Professional Documents

Culture Documents

Low Resolution Face Recognition Using Mixture of Experts

Uploaded by

TALLA_LOKESHOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Low Resolution Face Recognition Using Mixture of Experts

Uploaded by

TALLA_LOKESHCopyright:

Available Formats

World Academy of Science, Engineering and Technology 80 2011

Low Resolution Face Recognition Using Mixture of Experts

Fatemeh Behjati Ardakani, Fatemeh Khademian, Abbas Nowzari Dalini, and Reza Ebrahimpour

AbstractHuman activity is a major concern in a wide variety of applications, such as video surveillance, human computer interface and face image database management. Detecting and recognizing faces is a crucial step in these applications. Furthermore, major advancements and initiatives in security applications in the past years have propelled face recognition technology into the spotlight. The performance of existing face recognition systems declines signicantly if the resolution of the face image falls below a certain level. This is especially critical in surveillance imagery where often, due to many reasons, only low-resolution video of faces is available. If these low-resolution images are passed to a face recognition system, the performance is usually unacceptable. Hence, resolution plays a key role in face recognition systems. In this paper we introduce a new low resolution face recognition system based on mixture of expert neural networks. In order to produce the low resolution input images we down-sampled the 48 48 ORL images to 12 12 ones using the nearest neighbor interpolation method and after that applying the bicubic interpolation method yields enhanced images which is given to the Principal Component Analysis feature extractor system. Comparison with some of the most related methods indicates that the proposed novel model yields excellent recognition rate in low resolution face recognition that is the recognition rate of 100% for the training set and 96.5% for the test set. KeywordsLow resolution face recognition, Multilayered neural network, Mixture of experts neural network, Principal component analysis, Bicubic interpolation, Nearest neighbor interpolation.

I. I NTRODUCTION

S multimedia applications become ubiquitous, increasing processor speeds face recognition algorithms are being extended and rewritten to take advantage of video and other sensor modalities that produce a continuous stream of frames instead of a single image [9]. Video based sensors can provide important visual information in a number of applications. For example, at an airport gate entrance, video cameras are being used instead of still image digital cameras. Cellphones are equipped now with cameras capable of capturing a sequence of frames instead of a single image. Camcorders are everywhere, and the need to parse video digital libraries to extract specic content (such as faces) is soon to become a daily activity of search engines. The applications of face recognition using multiple still images are not limited to entertainment, education, or surveillance. In video surveillance, the faces of interest are often of small size because of the great distance between the camera and the objects which leads to work with low resolution images. Image resolution is a potential factor

affecting face recognition performance. In the low-resolution face images, many detailed facial features are lost and faces are indiscernible to human. We also notice that in many automatic face recognition systems, the size of face images are reduced, and also achieve satised performance. But how will the image resolution affect recognition accuracy is still open to discussion. Several algorithms have been proposed to render a superresolution face image from the low-resolution one such as super-resolution algorithms [3], [4], [7], and [14] that use some interpolation techniques to enhance the image quality. Actually, these algorithms preprocess the low resolution image and pass it to the next phase which is face recognition. In the recognition phase some classication algorithms are required in order to distinguish and identify the faces from each other. Algorithms such as k-nearest neighbor [5], articial neural networks [13], local visual primitives [13] and coupled locality preserving mapping [12] have yet been deployed for the purpose of face recognition. In this paper, we proposed a method that accomplishes the face recognition by using combined neural classiers especially mixture of expert neural networks [1], [6] and also the Principal Component Analysis technique as a feature extraction tool [8]. The process in the whole is as follows: i) the rst step is enhancing the given image quality by using the bicubic interpolation method, ii) the second one is extracting eigenvalue and eigenvector from the enhanced image using PCA, iii) and nally, feed these eigenvectors to the trained network and get the nal result. In order to train the mixture of expert network, we produced an articial dataset from the ORL [8] dataset by reducing the size of its images using k-nearest interpolation algorithm. The rest of the paper is organized as follows. In Section 2 our proposed model is introduced. It is followed by the experimental results in Section 3. Finally, Section 4 draws conclusion and summarizes the paper. II. BASIC C ONCEPTS In this section we provide some basic information that are essential to understand our proposed method, including interpolation techniques, feature extraction and mixture of expert neural networks. A. Interpolation Interpolation works by using known data to estimate values at unknown points. Common interpolation algorithms can be grouped into two categories: adaptive and non-adaptive [10].

Fatemeh Behjati Ardakani, Fatemeh Khademian and Abbas Nowzari-Dalini are with the Center of Excellence in Biomathematics, School of Mathematics, Statistics and Computer Science, University of Tehran, Tehran, Iran, email addresses: (f.behjati@ut.ac.ir, khademian@ut.ac.ir, nowzari@ut.ac.ir) and Reza Ebrahimpour is with the Department of Electrical Engineering, Shahid Rajaee University, Tehran, Iran, email address: (ebrahimpour@ipm.ir).

879

World Academy of Science, Engineering and Technology 80 2011

Adaptive methods change depending on what they are interpolating (sharp edges vs. smooth texture), whereas non-adaptive methods treat all pixels equally. Since we have used the nonadaptive interpolation methods in our algorithm it is worth to introduce some techniques in this category. 1) Nearest neighbor interpolation: Nearest neighbor is the most basic and requires the least processing time of all the interpolation algorithms because it only considers one pixel; the closest one to the interpolated point. This has the effect of simply making each pixel bigger. Since this method does not conserve the image quality we apply it in order to produce low resolution images. In other words, to produce the input images we down-sampled the 48 48 ORL images to 12 12 ones using this interpolation method. 2) Bilinear Interpolation: Bilinear interpolation considers the closest 2 2 neighborhood of known pixel values surrounding the unknown pixel. It then takes a weighted average of these 4 pixels to arrive at its nal interpolated value. The bilinear interpolation results are much smoother looking images than nearest neighbor interpolation. 3) Bicubic interpolation: Bicubic goes one step beyond bilinear by considering the closest 4 4 neighborhood of known pixels, for a total of 16 pixels. Since these pixels are at various distances from the unknown pixel, closer pixels are given a higher weighting in the calculation. Bicubic produces noticeably sharper images than the previous two methods, and is perhaps the ideal combination of processing time and output quality. For this reason it is a standard in many image editing programs (including Adobe Photoshop), printer drivers and in-camera interpolation. In this work we use this interpolation method to enhance the given image quality and up-sample the 12 12 input images to 24 24 ones. B. Principal Component Analysis Principal Component Analysis (PCA) is a way of identifying patterns in data, and expressing the data in such a way as to highlight their similarities and differences [11]. Since patterns in data can be hard to nd in data of high dimension, where the luxury of graphical representation is not available, PCA is a powerful tool for analyzing data. The other main advantage of PCA is that once you have found these patterns in the data, and you compress the data, i.e. by reducing the number of dimensions, without much loss of information. This technique includes the following 5 main steps Step 1. Subtract the mean of the input data: For PCA to work properly, we have to subtract the mean from each of the data dimensions. The mean subtracted is the average across each dimension. Step 2. Calculate the covariance matrix: Recall that covariance is always measured between 2 dimensions. If we have a data set with more than 2 dimensions, there is more than one covariance measurement that can be calculated. Step 3. Calculate the eigenvectors and eigenvalues of the covariance matrix: Since the covariance matrix is square, we can calculate the eigenvectors and eigenvalues for this matrix. These are rather important, as they tell us useful information about our data.

Step 4. Choosing components and forming a feature vector: In general, once eigenvectors are found from the covariance matrix, the next step is to sort the eigenvalues in decreasing order. This gives you the components in order of signicance. Step 5. Deriving the new data set: This is the nal step in PCA, and is also the easiest. Once we have chosen the components (eigenvectors) that we wish to keep in our data and formed a feature vector, we simply take the transpose of the vector and multiply it on the left of the transpose of the original data set.

C. Mixture of Experts Expert combination is a classic strategy that has been widely used in various problem solving tasks. A team of individuals with diverse and complementary skills tackle a task jointly such that a performance better than any single individual can make is achieved via integrating the strengths of individuals [6]. The mixture of experts (ME) architecture is composed of N local experts and there is a gating network whose outputs dene the expert weights conditioned on the input (Figure 1). In our proposed method, each expert i is a multi layer perceptron (MLP) neural network with one hidden layer that computes an output Oi as a function of the input stimuli vector x, and a set of weights of hidden and output layers, and a sigmoid activation function. We assume that each expert specializes in a different area of the input space. The gating network assigns a weight gi to each of the experts output, Oi . The gating network determines the g = {g1 , g2 , , gN } as a function of the input vector x and a set of parameters such as weights of its hidden and output layers and a sigmoid activation function. Each element gi of g can be interpreted as estimates of the prior probability that expert i can generate the desired output y. The gating network is composed of two layers: the rst layer is an MLP neural network, and the second layer is a softmax nonlinear operator. Thus the gating network computes = {1 , 2 , , N }, which is the output vector of the MLP layer of the gating network, then applies the softmax function to get: gi = exp(i )

N j=1

exp(j )

i = 1, 2, , N,

Fig. 1.

The structure of mixture of experts neural network.

880

World Academy of Science, Engineering and Technology 80 2011

where N is the number of expert networks. So the gi s are nonnegative and sum to 1. The nal mixed output of the entire network is: T =

i

B. Enhancing the quality of input images As it is stated in previous sections, in most of the face recognition systems there are two distinct phases. The rst phase works on the given low resolution image and enhances its quality and the second phase works on the output of the enhanced image coming from the rst phase and deploys some classication algorithms to match the input against the dataset and get the identication task done. We used the bicubic interpolation algorithm for the above mentioned rst phase and improved the image size, reaching the 24 24 dimension. Figure 2 depicts the two samples of ORL dataset that have been modied in favor of producing articial input images. In the preprocessing phase the quality of input images is improved and their size is 24 24, which means that topology of the network needs 576 nodes in the input layer. This would make the network too complicated since there are too many free parameters and the convergence of the network might not be possible. Hence, we are going to use the feature extraction technique that returns only the informative features of the image. Among the related work in this eld we inferred that PCA feature extraction is much more convenient method for low resolution face recognition problem [2], [12], and [13]. Practical experiments show that the rst 50-th components are sufcient for this task. We should mention that these rst 50th components are sorted in descending order, so in this way we obtain the most informative PCA components. C. Face recognition Once the data got ready, it is time to give them to the neural network for the recognition purpose. As it is mentioned earlier, the designed neural network consists of several MLP neural networks that play the experts role and they are combined through the mixture of experts approach. The training set includes the eigenvectors of the 5 images of each individual which is 200 images over the total of 400 (5 40), and the other 200 images are left for the testing set. Since the input data are in the 50 dimensional space the topology of the designed network would have 50 nodes in the input layer and also because the number of subjects is 40 then the number of the nodes used in the output layer must be 40 (each node represents one subject). Therefore, the topologies

Oi gi

i = 1, 2, , N.

The weights of MLPs are learned using the error backpropagation (BP) algorithm. For each expert i and the gating network, the weights are updated according to the following equations:

T wi = e hi (y Oi )(Oi (1 Oi ))i , T i = e hi wi (y Oi )(Oi (1 Oi ))(i (1 i ))x,

= g (h g)( (1 ))T , = g T (h g)( (1 ))(1 )x, where e and g are learning rates for the expert and the gating networks, respectively. and w are the weight matrices of input to hidden, and hidden to output layer, respectively, for experts and and are the weight matrices of input to hidden and hidden to output layer, respectively, for the gating network. T i and T are the transpose of i and , the output matrices of the hidden layer of expert and gating networks, respectively. In the above formulas h = {h1 , h2 , , hN } is a vector such that each hi is an estimate of the posterior probability that expert i can generate the desired output y, and is computed as follows: hi = gi exp( 1 (y Oi )T (y Oi )) 2 . 1 T j gj exp( 2 (y Oj ) (y Oj ))

As mentioned, in Figure 1 the structure of mixture of experts method is illustrated. The following section explains our algorithm based on the preceding concepts. III. P ROPOSED

METHOD

To accomplish this work we used the ORL dataset which contains a set of grey level 4848 face images. In this dataset there are ten different images of each of 40 distinct subjects. For some subjects, the images were taken at different times, varying the lighting, facial expressions (open / closed eyes, smiling / not smiling) and facial details (glasses / no glasses). The steps of our method are as follows. A. Set up the proper dataset Since we are about to work on the low resolution images, we must down-sample the ORL dataset images to get lower quality ones. For this, we used the k-nearest neighbor interpolation algorithm and decreased the dimension of the original images by 4 which results 12 12 low resolution images. In other words, we suppose that sizes of the actual input images are 12 12. It should be noted that, in the real applications the original images are low and this step is obviously omitted.

Fig. 2. left: the original ORL dataset samples, middle: down sampled by nearest neighbor interpolation, right: enhanced images by bicubic interpolation.

881

World Academy of Science, Engineering and Technology 80 2011

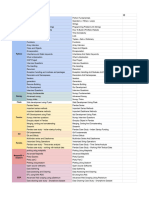

of the designed neural networks in this project differ only in the number of the hidden layer nodes. We have examined different network congurations by changing the complexity of the experts or the number of experts used in designing the combined neural network and also assigning different values to the used parameters. These experimental results are provided in the following section. A schematic representation of our proposed method is depicted in Figure 3. IV. E XPERIMENTAL RESULTS This section presents the experimental results of the proposed method. In all the experiment the gating learning rate was set to 0.5 and the number of its hidden nodes was 60 nodes, the expert learning rate was 0.8, and the network trained by 300 epochs. We ran our algorithm on different number of hidden nodes of experts and the results are provided in Table I. As it is shown in the Table I, the recognition rate for the systems containing 10 nodes in their hidden layer is relatively low. By increasing the number of hidden nodes from 10 to 20 the recognition rate improves signicantly, and in the 2experts system with 30 hidden nodes the recognition rate of

TABLE I T HE RECOGNITION RATES BASED ON DIFFERENT NUMBER OF HIDDEN NODES AND EXPERTS . 2 experts 78 % 88% 93.5% 90.5% 3 experts 75 % 90.5% 96.5% 96% 4 experts 70.5 % 92.5% 93.5% 92.5%

10 20 30 40

nodes nodes nodes nodes

Fig. 3.

The overview of our approach.

93.5% shows that the number of experts was insufcient that could not divide the input space properly. In the 4-experts system with the same number of hidden nodes comparing to the system with 3-experts there are too many free parameters that makes the network too complex to get a better result than 3-experts. So the network with 3-experts and 30 numbers of hidden nodes divides the input space in the best way and establishes a balance of the number of experts and hidden nodes. Finally increasing the number of hidden nodes to 40 incurs a loss of recognition rate comparing to 30 nodes which is because of the high complexity of the networks. We compared our method with some other related works, and the obtained results are as follows. 1) Kernel correlation lter (KCF) [2] method uses a set of MACE [2] lters to extract features from the generic training set. For every subject in the generic training set, this method builds a MACE lter. Ending up with 222 different lters, which is the total number of individuals in the generic training set. Thus the dimensionality of this feature space is 222. Each lter takes as input all 12, 776 generic training face images available. For the authentic class to whom the MACE lter belongs, the parameter of MACE lter values of all images belonging to this authentic class are set to 1. For all other images belonging to the remaining 221 impostor classes, KCF sets the corresponding parameter of MACE lter values to 0. This ensures that the lter exhibits no correlation between different subjects. The best reported recognition rate of this method is 92.31%. 2) The Hallucinating Facial Images and Features (HFIF) [13] proposed a method for simultaneous image and feature hallucination based on neighbor embedding. In HFIF method they make use of local visual primitives (LVPs) [13] in feature representation and propose local constrained neighbor selection in image/feature reconstruction. This method achieved 96% recognition rate. 3) Coupled Locality Preserving mappings (CLP) [12] is based on coupled mappings (CMs), projects the face images with different resolutions into a unied feature space which favors the task of classication. These CMs are learned through optimizing the objective function to minimize the difference between the correspondences. The best achieved recognition rate in this method is 90.1%. Face recognition is one of the most common tasks that involves the neural network system of human brain at any time. Hence, we inspired our proposed method based on this fact and also in order to improve its efciency we decided to combine various neural networks by the help of mixture of experts method. Figure 4 illustrates that our proposed method (ME) has better recognition rate comparing to the above mentioned

882

World Academy of Science, Engineering and Technology 80 2011

[10] A.N. Htwe, Image Interpolation framework using non-adaptive approach and NL means, International Journal of Network and Mobile Technologies, 1, 2010, 2832. [11] I.T. Jolliffe, Principal Component Analysis, Springer-Verlag, New York, NY, USA, 2002. [12] B. Li, H. Chang, S. Shan, and X. Chen, Low-resolution face recognition via coupled locality preserving mappings, IEEE Signal Processing Letters, 17, 2010, 2023. [13] B. Li, H. Chang, S. Shan, X. Chen, and W. Gao, Hallucinating facial images and features, in Proceedings of 19th International Conference on Pattern Recognition, IEEE Press, New York, NY, USA, pp. 14, 2008. [14] C. Liu, H. Shum, and C. Zhang, A two-step approach to hallucinating faces: Global parametric model and local nonparametric model, in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, IEEE Press, Los Alamitos, CA, USA, pp. 192198, 2001. Fig. 4. Comparing the recognition rate of the known face recognition systems with our proposed method (ME)

methods. As we can see, the best performance is obtained with 3 experts and 30 number of hidden nodes. In this case the ability of generalization of patterns has been increased and it is much more stable comparing to the other cases. V. C ONCLUSION The basic idea of this work is that in the networks learning process, the expert networks compete for each input pattern, while the gate network rewards the winner of each competition with stronger error feedback signals. Thus, over time, the gate partitions the input space in response to the experts performance. Consequently this approach leads us to achieve 100% recognition rate for training set and 96.5% recognition rate for the testing set that by comparing with the other face recognition systems it demonstrates that although this methods computational effort is low, it gets improved results. R EFERENCES

[1] A. Iranzad, S. Masoudnia, F. Cheraghchi, A. Nowzari-Dalini, R. Ebrahimpour, in Proceedings of International Conference on Soft Computing and Pattern Recognition, IEEE Press, Paris, France, pp. 309313, 2010. [2] R. Abiantun, M. Savvides, and B. V. K. Vijaya Kumar, How low can you go? low resolution face recognition study using kernel correlation feature analysis on the FRGCv2 dataset, in Special Session on Research at the Biometric Consortium Conference, IEEE Press, New York, NY, USA, pp. 16, 2006 [3] S. Baker and T. Kanade, Hallucinating faces, in Proceedings of 14th IEEE Conference on Automatic Face and Gesture Recognition, IEEE Press, Los Alamitos, CA, USA, pp. 8388, 2000. [4] H. Chang, D. Yeung, and Y. Xiong, Super-resolution through neighbor embedding, in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, IEEE Press, Los Alamitos, CA, USA, pp. 275282, 2004. [5] C. Conde, A. Ruiz, and E. Cabello, PCA vs low resolution images in face verication, in Proceedings of 12th International Conference on Image Analysis and Processing, IEEE Press, Los Alamitos, CA, USA, pp. 6367, 2003. [6] R. Ebrahimpour, E. Kabir, and M.R Youse, Teacher-directed learning in view-independent face recognition with mixture of experts using overlapping eigenspaces, Computer Vision and Image Understanding, 111, 2008, 195206. [7] W. Freeman, E. Pasztor, and O. Carmichael, Learning low-level vision, International Journal of Computer Vision, 40, 2000, 2547. [8] S. Haykin, Neural Networks: A Comprehensive Foundation, Prentice Hall, Ontario, Canada, 1999. [9] P.H. Hennings-Yeomans, S. Baker, and B.V.K. Vijaya Kumar, Recognition of low-resolution faces using multiple still images and multiple cameras, in Proceeding of 2th IEEE Conference on Biometrics: Theory, Applications and Systems, IEEE Press, New York, NY, USA, pp. 16, 2008.

883

You might also like

- Contextual Image Classification: Understanding Visual Data for Effective ClassificationFrom EverandContextual Image Classification: Understanding Visual Data for Effective ClassificationNo ratings yet

- Face Recognition Using Pca and Neural Network Model: Submitted by Aadarsh Kumar (15MIS0374Document11 pagesFace Recognition Using Pca and Neural Network Model: Submitted by Aadarsh Kumar (15MIS0374KrajadarshNo ratings yet

- Vision-Face Recognition Attendance Monitoring System For Surveillance Using Deep Learning Technology and Computer VisionDocument5 pagesVision-Face Recognition Attendance Monitoring System For Surveillance Using Deep Learning Technology and Computer VisionShanthi GanesanNo ratings yet

- TS - Facial RecognitionDocument10 pagesTS - Facial RecognitionYash KulkarniNo ratings yet

- Face Recognition Using Principal Component Analysis and Artificial Neural Network of Facial Images Datasets in Soft ComputingDocument7 pagesFace Recognition Using Principal Component Analysis and Artificial Neural Network of Facial Images Datasets in Soft ComputingInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Review and Comparison of Face Detection Algorithms: Kirti Dang Shanu SharmaDocument5 pagesReview and Comparison of Face Detection Algorithms: Kirti Dang Shanu SharmaKevin EnriquezNo ratings yet

- IJAREEIE Paper TemplateDocument8 pagesIJAREEIE Paper TemplateAntriksh BorkarNo ratings yet

- Face Detection Using Feed Forward Neural Network in MatlabDocument3 pagesFace Detection Using Feed Forward Neural Network in MatlabInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Face Recognition Using Back Propagation Neural Network: Siddharth RawatDocument8 pagesFace Recognition Using Back Propagation Neural Network: Siddharth RawatMayank OnkarNo ratings yet

- Face Identification AbstractDocument5 pagesFace Identification AbstractTelika RamuNo ratings yet

- 3606-Article Text-6772-1-10-20210422Document14 pages3606-Article Text-6772-1-10-20210422hello smiNo ratings yet

- Correlation Method Based PCA Subspace Using Accelerated Binary Particle Swarm Optimization For Enhanced Face RecognitionDocument4 pagesCorrelation Method Based PCA Subspace Using Accelerated Binary Particle Swarm Optimization For Enhanced Face RecognitionEditor IJRITCCNo ratings yet

- Ieee Conference Paper TemplateDocument4 pagesIeee Conference Paper TemplateMati ur rahman khanNo ratings yet

- Image ProcessingDocument33 pagesImage ProcessingTejaswini ReddyNo ratings yet

- Face Detection Tracking OpencvDocument6 pagesFace Detection Tracking OpencvMohamed ShameerNo ratings yet

- Project PPT FinalDocument19 pagesProject PPT FinalRohit GhoshNo ratings yet

- Face recognition-CS 303Document8 pagesFace recognition-CS 303Venkata hemanth ReddyNo ratings yet

- Indreni College Institute of Science and Technology: A Project Report On "Face Detection"Document10 pagesIndreni College Institute of Science and Technology: A Project Report On "Face Detection"Jeevan PandeyNo ratings yet

- Alphanumeric Recognition Using Hand Gestures: Shashank Krishna Naik, Mihir Singh, Pratik Goswami, Mahadeva Swamy GNDocument7 pagesAlphanumeric Recognition Using Hand Gestures: Shashank Krishna Naik, Mihir Singh, Pratik Goswami, Mahadeva Swamy GNShashank Krishna NaikNo ratings yet

- Image Recognition Using CNNDocument12 pagesImage Recognition Using CNNshilpaNo ratings yet

- A Face Recognition System On Embedded DeviceDocument8 pagesA Face Recognition System On Embedded DeviceChiranjib PatraNo ratings yet

- Department of Masters of Comp. ApplicationsDocument10 pagesDepartment of Masters of Comp. ApplicationsJani MuzaffarNo ratings yet

- Thesis On Face Recognition Using PcaDocument8 pagesThesis On Face Recognition Using Pcabsqbr7px100% (2)

- Face Recognition Using Artificial Neural Networks: By, Bharath Bhatt C R USN: 4JN10TE007 8 Sem TCE DeptDocument25 pagesFace Recognition Using Artificial Neural Networks: By, Bharath Bhatt C R USN: 4JN10TE007 8 Sem TCE DeptNag BhushanNo ratings yet

- Application Research of BP Neural Network in Face RecognitionDocument4 pagesApplication Research of BP Neural Network in Face Recognitionnikky234No ratings yet

- Blur and Motion Blur Influence On Face Recognition PerformanceDocument5 pagesBlur and Motion Blur Influence On Face Recognition PerformanceDhanya BNo ratings yet

- Report - EC390Document7 pagesReport - EC390Shivam PrajapatiNo ratings yet

- Attendance Marking System Using Image Recognition: Professor: Sanjay SrivastavaDocument15 pagesAttendance Marking System Using Image Recognition: Professor: Sanjay SrivastavaGhanshyam s.nairNo ratings yet

- Face RecognitionDocument23 pagesFace RecognitionAbdelfattah Al ZaqqaNo ratings yet

- Facial Recognition Using Machine LearningDocument21 pagesFacial Recognition Using Machine LearningNithishNo ratings yet

- Brain Tumor Pattern Recognition Using Correlation FilterDocument5 pagesBrain Tumor Pattern Recognition Using Correlation FilteresatjournalsNo ratings yet

- Research Article Image Processing Design and Algorithm Research Based On Cloud ComputingDocument10 pagesResearch Article Image Processing Design and Algorithm Research Based On Cloud ComputingKiruthiga PrabakaranNo ratings yet

- Face Recognition Using Gabor Filters: Muhammad SHARIF, Adeel KHALID, Mudassar RAZA, Sajjad MOHSINDocument5 pagesFace Recognition Using Gabor Filters: Muhammad SHARIF, Adeel KHALID, Mudassar RAZA, Sajjad MOHSINYuri BrasilkaNo ratings yet

- Survey FinalDocument6 pagesSurvey FinalEPIC BASS DROPSNo ratings yet

- Image PruningDocument69 pagesImage Pruningnarendran kNo ratings yet

- Face Detection in Python Using OpenCVDocument17 pagesFace Detection in Python Using OpenCVKaruna SriNo ratings yet

- Geometric Feature Learning: Unlocking Visual Insights through Geometric Feature LearningFrom EverandGeometric Feature Learning: Unlocking Visual Insights through Geometric Feature LearningNo ratings yet

- Project Report Format For CollegeDocument13 pagesProject Report Format For CollegeTik GamingNo ratings yet

- Irjet V4i5112 PDFDocument5 pagesIrjet V4i5112 PDFAnonymous plQ7aHUNo ratings yet

- B16 Paper IEEEDocument6 pagesB16 Paper IEEEAman PrasadNo ratings yet

- Study On Advancement in Face Recognition Technology: Arpit Arora, Abhishek KR - Rai, DR - Leena AryaDocument4 pagesStudy On Advancement in Face Recognition Technology: Arpit Arora, Abhishek KR - Rai, DR - Leena AryaRavindra KumarNo ratings yet

- Development of An Efficient Face Recognition System Based On Linear and Nonlinear AlgorithmsDocument9 pagesDevelopment of An Efficient Face Recognition System Based On Linear and Nonlinear AlgorithmsIAES IJAINo ratings yet

- A MATLAB Based Face Recognition Using PCA With Back Propagation Neural Network - Research and Reviews - International Journals PDFDocument6 pagesA MATLAB Based Face Recognition Using PCA With Back Propagation Neural Network - Research and Reviews - International Journals PDFDeepak MakkarNo ratings yet

- ECE885 Computer Vision: Prof. Bhupinder VermaDocument59 pagesECE885 Computer Vision: Prof. Bhupinder VermaHarpuneet SinghNo ratings yet

- Research Article: Applying Artificial Neural Networks For Face RecognitionDocument17 pagesResearch Article: Applying Artificial Neural Networks For Face Recognitionsalman1992No ratings yet

- REPORTDocument82 pagesREPORTTamizh KdzNo ratings yet

- Sign Language Recognition From Digital Videos Using Feature Pyramid Network With Detection TransformerDocument13 pagesSign Language Recognition From Digital Videos Using Feature Pyramid Network With Detection TransformerMiral ElnakibNo ratings yet

- Application of The OpenCV-Python For Personal Identifier StatementDocument4 pagesApplication of The OpenCV-Python For Personal Identifier StatementMuzammil NaeemNo ratings yet

- Assignment 1 NFDocument6 pagesAssignment 1 NFKhondoker Abu NaimNo ratings yet

- Ivanna K. Timotius 2010Document9 pagesIvanna K. Timotius 2010Neeraj Kannaugiya0% (1)

- Title Automated Student Attendance Management System Using Face Recognition Domain: Image Processing, Deep Learning ObjectiveDocument8 pagesTitle Automated Student Attendance Management System Using Face Recognition Domain: Image Processing, Deep Learning ObjectiveRizaur RehamanNo ratings yet

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- Project Report: Topic: Real Time Facial Expression RecognitionDocument25 pagesProject Report: Topic: Real Time Facial Expression RecognitionHarsih KangaNo ratings yet

- Liveness Detection in Face Recognition Using Deep LearningDocument4 pagesLiveness Detection in Face Recognition Using Deep LearningIvan FadillahNo ratings yet

- Project Report: Topic: Real Time Facial Expression RecognitionDocument24 pagesProject Report: Topic: Real Time Facial Expression RecognitionHarsih KangaNo ratings yet

- ControlDocument9 pagesControlmohammed al jbobyNo ratings yet

- Preprocessing Techniques To Improve CNNDocument20 pagesPreprocessing Techniques To Improve CNNgrazzymaya02No ratings yet

- Mpginmc 1079Document7 pagesMpginmc 1079Duong DangNo ratings yet

- BATCH - 6 Mini Project FinalDocument39 pagesBATCH - 6 Mini Project FinalBanthalapati S Lavakumar RajuNo ratings yet

- Unit 2 - Machine Learning - WWW - Rgpvnotes.inDocument21 pagesUnit 2 - Machine Learning - WWW - Rgpvnotes.inluckyaliss786No ratings yet

- CIS 520, Machine Learning, Fall 2015: Assignment 2 Due: Friday, September 18th, 11:59pm (Via Turnin)Document3 pagesCIS 520, Machine Learning, Fall 2015: Assignment 2 Due: Friday, September 18th, 11:59pm (Via Turnin)DiDo Mohammed AbdullaNo ratings yet

- Machine Learning Techniques For Anomaly Detection: An OverviewDocument10 pagesMachine Learning Techniques For Anomaly Detection: An OverviewYin ChuangNo ratings yet

- Entropy: A Labeling Method For Financial Time Series Prediction Based On TrendsDocument27 pagesEntropy: A Labeling Method For Financial Time Series Prediction Based On TrendsPhillipe S. ScofieldNo ratings yet

- Lazy vs. Eager LearningDocument6 pagesLazy vs. Eager Learningakshayhazari8281No ratings yet

- Topic 4 MLDocument125 pagesTopic 4 MLﻣﺤﻤﺪ الخميسNo ratings yet

- 2020conf DeepFitDocument16 pages2020conf DeepFitPham Van ToanNo ratings yet

- Best Resources To Learn Statistics - Analytics VidhyaDocument7 pagesBest Resources To Learn Statistics - Analytics VidhyaAshishAmitavNo ratings yet

- Knowledge-Based Systems: P Rajesh Kanna, M.E., Assistant Professor, P Santhi, M.E., PH.D., ProfessorDocument12 pagesKnowledge-Based Systems: P Rajesh Kanna, M.E., Assistant Professor, P Santhi, M.E., PH.D., ProfessorSikha ReddyNo ratings yet

- Predicting The Churn in Telecom IndustryDocument23 pagesPredicting The Churn in Telecom Industryashish841No ratings yet

- SUBRAMANI Sudha-Thesis - Nosignature PDFDocument214 pagesSUBRAMANI Sudha-Thesis - Nosignature PDFNahúm Flores GutiérrezNo ratings yet

- Fmicb 13 925454Document12 pagesFmicb 13 925454Panca SatifaNo ratings yet

- Optimization of Lodging Costs For Rental Listing Homes - Kim Kerby SilvestreDocument21 pagesOptimization of Lodging Costs For Rental Listing Homes - Kim Kerby SilvestreKim Kerby SilvestreNo ratings yet

- Determination of Customer Satisfaction Using Improved K-Means AlgorithmDocument19 pagesDetermination of Customer Satisfaction Using Improved K-Means AlgorithmAC CahuloganNo ratings yet

- Tutorial 6Document8 pagesTutorial 6POEASONo ratings yet

- Plagiarism Checker X Originality Report: Similarity Found: 12%Document10 pagesPlagiarism Checker X Originality Report: Similarity Found: 12%Project DevelopmentNo ratings yet

- Scodeen Global Python DS - ML - Django Syllabus Version 13Document22 pagesScodeen Global Python DS - ML - Django Syllabus Version 13AbhijitNo ratings yet

- CampusX Course IndexDocument3 pagesCampusX Course IndexShameel LambaNo ratings yet

- Review Paper On Three Phase Fault AnalysisDocument6 pagesReview Paper On Three Phase Fault AnalysisPritesh Singh50% (2)

- Comic Characters Detection Using Deep LearningDocument6 pagesComic Characters Detection Using Deep LearningBenedict NsiahNo ratings yet

- Document Classification Methods ForDocument26 pagesDocument Classification Methods ForTrần Văn LongNo ratings yet

- Speech Emotion Detection Using Machine LearningDocument11 pagesSpeech Emotion Detection Using Machine LearningdataprodcsNo ratings yet

- 1 PBDocument12 pages1 PBAbdallah GrimaNo ratings yet

- Dyslexia Prediction Using Machine LearningDocument9 pagesDyslexia Prediction Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- My Final FileDocument54 pagesMy Final Fileharshit gargNo ratings yet

- Distance Based ModelsDocument19 pagesDistance Based ModelsNidhiNo ratings yet

- Capstone Story TemplateDocument30 pagesCapstone Story TemplateekeneNo ratings yet

- Data Science ClassesDocument13 pagesData Science ClassesBakari HamisiNo ratings yet

- ClusteringDocument84 pagesClusteringmanmeet singh tutejaNo ratings yet

- Dokumen Tanpa JudulDocument12 pagesDokumen Tanpa JudulHairun AnwarNo ratings yet