Professional Documents

Culture Documents

Learning From Less Successful Kaizen Events: A Case Study

Uploaded by

Areda Batu0 ratings0% found this document useful (0 votes)

32 views12 pagesDespite their popularity, there is a lack of systematic research on Kaizen events. There is no systematic, empirical evidence on what sort of Kaizen event designs may be most efective for achieving and sustaining improvements. A case study of a less successful Kaizen event is presented to demonstrate how the event contributed to organizational learning.

Original Description:

Original Title

5PCS Learning From Less Successful Kaizen Events

Copyright

© Attribution Non-Commercial (BY-NC)

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentDespite their popularity, there is a lack of systematic research on Kaizen events. There is no systematic, empirical evidence on what sort of Kaizen event designs may be most efective for achieving and sustaining improvements. A case study of a less successful Kaizen event is presented to demonstrate how the event contributed to organizational learning.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

32 views12 pagesLearning From Less Successful Kaizen Events: A Case Study

Uploaded by

Areda BatuDespite their popularity, there is a lack of systematic research on Kaizen events. There is no systematic, empirical evidence on what sort of Kaizen event designs may be most efective for achieving and sustaining improvements. A case study of a less successful Kaizen event is presented to demonstrate how the event contributed to organizational learning.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 12

10 September 2008 Vol. 20 No.

3 Engineering Management Journal

Learning From Less Successful Kaizen Events: A Case Study

Jennifer A. Farris, Texas Tech

Eileen M. Van Aken, Virginia Tech

Toni L. Doolen, Oregon State University

June Worley, Oregon State University

(Sheridan, 1997; Melnyk, Calantone, Montabon, and Smith,

1998; Laraia, Moody, and Hall, 1999); however, despite their

popularity and apparent potential for creating improvement,

there is a lack of systematic research on Kaizen events. Te

majority of current Kaizen event publications are focused on

anecdotal results from companies that have implemented Kaizen

events (Sheridan, 1997; Cuscela, 1998) and untested design

recommendations from individuals and organizations that

facilitate Kaizen events (e.g., Laraia et al., 1999; Vitalo, Butz, and

Vitalo, 2003). Tere is no systematic, empirical evidence on what

sort of Kaizen event designs may be most efective for achieving

and sustaining improvements in business performance or human

resource outcomes.

A particular weakness of the current published accounts

is the lack of attention to less successful events only strongly

successful Kaizen events receive much coverage in the accounts.

Tis is despite the fact that Laraia et al. (1999) suggest that

most companies will have difculty sustaining even half of

the results from a given event. In addition, the organizational

learning literature suggests that understanding less successful

tool applications is a key component of organizational learning

(Sitkin, 1992; Lounamaa and March, 1989; Cole, 1992).

Tis paper describes results from an ongoing research

program focused on identifying determinants of Kaizen event

efectiveness, both in terms of initial event outcomes and the

sustainability of outcomes. In particular, the paper presents

a case study of a less successful Kaizen event studied in the

current research to demonstrate how the event contributed to

organizational learning, and to highlight how the methods and

measures used in the current research allowed triangulation of

multiple data types and sources. Te implications of the case

study event for the current body of knowledge on Kaizen events

are also examined, and, fnally, directions for future research

are described.

Current Research Program

Based on the lack of previous research on Kaizen events, in 2002,

Oregon State University and Virginia Tech began a joint research

efort aimed at understanding Kaizen events. Tis efort is aimed

at measuring the outcomes of Kaizen events (both technical

performance and human resource outcomes), and identifying

what design and context factors relate to the efectiveness of

Kaizen events, both in terms of generating positive initial results

and sustaining event outcomes over time.

Te goal of the research is to sample multiple Kaizen events

in multiple organizations, in order to better understand how these

events (and associated outcomes) vary both within and across

organizations and industry types. A total of six organizations

participated in the frst phase of this research program, which

Refereed management tool manuscript. Accepted by Associate Editor Landaeta. A previous version of this work was presented at the

ASEM 2006 Conference.

Abstract: Tis paper describes results from an ongoing

research program focused on identifying determinants of

Kaizen event efectiveness, both in terms of initial event

outcomes and the sustainability of outcomes. Although

anecdotal published accounts suggest that increasing

numbers of companies are using Kaizen events, and that

these projects can result in substantial improvement in key

business metrics, there is a lack of systematic research on

Kaizen events. A particular weakness of the current published

accounts is the lack of attention to less successful events only

strongly successful applications of Kaizen events receive much

coverage in the accounts; however, the organizational learning

literature suggests that understanding less successful cases is a

key component of organizational learning. We present a case

study from a less successful Kaizen event to demonstrate how

the case study event contributed to organizational learning.

We also present a set of methods and measures that can be

used by practicing engineering managers and engineering

management researchers to evaluate and analyze Kaizen event

performance. Te implications of the case study event for the

current body of knowledge on Kaizen events are also examined,

and, fnally, directions for future research are described.

Keywords: Productivity, Teams, Lean Manufacturing,

Quality Management

EMJ Focus Areas: Strategic and Operations Management,

Program and Project Management, Quality Management

A

Kaizen event is a focused and structured improvement

project, using a dedicated cross-functional team to

improve a targeted work area, with specifc goals, in an

accelerated timeframe (Letens, Farris, and Van Aken, 2006).

During the relatively short timeframe of the event (generally 3

to 5 days), Kaizen event team members apply low-cost problem-

solving tools and techniques to rapidly plan and, ofen, implement

improvements in a target work area. Evidence suggests that

Kaizen events have become increasingly popular in recent years

as a method of rapidly introducing improvements. In particular,

Kaizen events have been associated with the implementation

of lean production (Womack, Roos, and Jones, 1990). In fact

Kaizen events apparently originated with Toyota, which used this

method to train its suppliers in lean production practices during

the 1970s (Sheridan, 1997).

Anecdotal published accounts suggest that some Kaizen

events have resulted in substantial improvements in key business

metrics, as well as in important human resource outcomes

11 September 2008 Vol. 20 No. 3 Engineering Management Journal

contained the case study event described here (a total of 51 events

were studied), and nine organizations are currently participating

in the second phase of the research program. Industry types range

from heavily manufacturing-focused companies (e.g., secondary

wood products manufacturers) to heavily engineering-oriented

companies (e.g., electronic motor manufacturer). Within each

organization, events within diferent organizational process

areas (e.g., manufacturing, engineering, sales, and product

development) are being studied. Te frst phase of this research

focused on initial outcomes of events, while subsequent phases are

focusing on results sustainability. Tis case study was completed

before the frst phase of the larger study was complete, and an

earlier work focused on the analysis of the case study data alone

(Farris, Van Aken, Doolen, and Worley, 2006

2

). In this paper,

we compare the results from the case study to fndings from the

now-complete frst phase of the larger study, to further evaluate

case study fndings; however, it is important to stress that the

contributions of the fndings from the larger data set are entirely

post hoc. Te evaluation of overall event performance and

identifcation of likely contributing factors were completed based

on the case study data alone. Findings from the larger data set, as

included here, are used only as a further test of the robustness of

case study conclusions.

Measures

A Kaizen event is a complex organizational phenomenon, with

the potential of impacting both a technical system (i.e., work area

performance) and a social system (i.e., participating employees

and work area employees). In addition, Kaizen events typically

use a semi-autonomous team (a social system) to apply a specifc

set of technical problem-solving tools. Tus, a complex and varied

set of measures including both technical system measures and

social system measures is necessary in studying Kaizen events.

Exhibit 1 presents the research model developed to study

initial Kaizen event outcomes. (See Farris, Van Aken, Doolen,

and Worley, 2006

1

, for a description of how the factors in the

model were identifed.) For the study of longitudinal outcomes,

additional factors related to the target work area and sustainability

mechanisms will be included. Te variables studied in the research

on initial outcomes are summarized in Exhibit 2.

Te longitudinal study of Kaizen event outcomes investigates

the relationship between technical system outcomes, work area

factors, and follow-up mechanisms. Technical system outcomes

to be studied include perceived success (overall success), impact

on the area, and the percentage of improvement sustained. Work

area factors to be investigated include work area employee learning

behaviors, work area employee stewardship, work area employee

Kaizen capabilities (i.e., task- and problem-solving knowledge,

skills, and abilities gained from Kaizen event participation), work

area employee commitment to Kaizen events, and the history of

Kaizen events in the target work area (both before and afer the

target Kaizen event). Follow-up mechanisms to be investigated

include the amount of resources invested on follow-up (hours),

as well as the specifc mechanism used (e.g., management review

meetings, audits, training on new standard work procedures, etc.)

Methods

Each participating organization frst completes a semi-structured

interview on its Kaizen event program as a whole (i.e., the way

it plans, implements, sustains, and supports Kaizen events). Tis

interview guide contains 35 open-ended questions related to

the Kaizen event program. Ten for each Kaizen event studied

Exhibit 1. Research Model for Initial Outcomes

)NPUT&ACTORS 0ROCESS&ACTORS /UTCOMES

+AIZEN%VENT$ESIGN!NTECEDENTS

s'OAL#LARITY

s'OAL$IFFICULTY

s4EAM!UTONOMY

s4EAM&UNCTIONAL(ETEROGENEITY

s4EAM+AIZEN%XPERIENCE

s4EAM,EADER%XPERIENCE

s%VENT0LANNING0ROCESS

s-ANAGEMENT3UPPORT

s7ORK!REA2OUTINENESS

s!CTION/RIENTATION

s!FFECTIVE#OMMITMENTTO#HANGE

s)NTERNAL0ROCESSES

s4OOL!PPROPRIATENESS

s4OOL1UALITY

s'OAL!CHIEVEMENT

s)MPACTON!REA

s/VERALL0ERCEIVED3UCCESS

3OCIAL3YSTEM/UTCOMES

+AIZEN%VENT0ROCESS&ACTORS

4ECHNICAL3YSTEMS

/UTCOMES

s!TTITUDE

s+AIZEN#APABILITIES

/RGANIZATIONALAND7ORK

!REA!NTECEDENTS

12 September 2008 Vol. 20 No. 3 Engineering Management Journal

within participating organizations, four research instruments

are used to collect data for the study of initial event outcomes

two questionnaires completed by team members (a Kickof

Survey and a Report Out Survey), one log of team activities,

and one questionnaire completed by the event facilitator (an

Event Information Sheet). Tese instruments, their contents,

and administration procedures are summarized in Exhibit 3. In

addition to these instruments, organizational documents (e.g.,

the report out presentation fle, team charter if used, etc.) are

also collected.

Besides the quantitative measures described in Exhibit 3, the

research instruments are designed to collect additional, qualitative

data on the event design, context, process, and outcomes. For

instance, the Report Out Survey contains two open-ended

questions that ask team members to describe the biggest obstacles

to the success of their team and the biggest contributors to the

success of their team, respectively. Te Event Information Sheet

contains additional open-ended questions asking the facilitator to

describe his/her perceptions of the biggest obstacles and biggest

contributors, as well as the event planning process, the kickof

meeting process, team training, and management interaction

with the team during the event.

In addition to the data collected on initial outcomes, a Post-

Event Information Sheet is used to collect longitudinal data on

the sustainability of event results approximately 12 months afer

event completion. Longitudinal data is being collected for the 51

events studied during the frst phase of this research, as well as

for subsequent events studied during additional phases of the

research. Some non-response is expected for a variety of reasons

(e.g., work area no longer exists, etc.). Exhibit 4 describes the

types of data collected in the Post-Event Information Sheet. It

is noted that pilot eforts in studying the sustainability of event

outcomes led to a change in the methodology from the version

presented in previous work (Farris et al., 2006

2

), specifcally

the combination of two instruments and the reduction of the

number of repeated observations. Both changes were designed

to reduce the measurement burden on participating companies

and to increase the likelihood of response, as pilot eforts

suggested that the initial methodology was likely to result in

high non-response.

As can be seen, the current research methodology uses a

mixture of measures, methods, and data sources to provide a

holistic assessment of the event design, context, process, and

outcomes. For instance, technical success is measured through

multiple measures (e.g., goal achievement, overall perceived

success, perceived impact on area) of multiple types (i.e., survey

scales and objective measures) and multiple data sources (i.e.,

data from team members and the event facilitator as well as

Exhibit 2. Variables Studied in Research on Initial Outcomes

Goal Clarity Team perceptions of the clarity of their improvement goals

Goal Difculty Team perceptions of the difculty of their improvement goals

Team Kaizen Experience Average number of previous Kaizen events completed by team members

Team Functional Heterogeneity Diversity of functional expertise within the Kaizen event team

Team Autonomy Amount of control over event activities given to the Kaizen event team

Team Leader Experience Number of previous Kaizen events the team leader has led or co-led

Management Support Team perceptions of the adequacy of resources dedicated to the event

Event Planning Process Total person-hours invested in planning

Work Area Routineness General complexity of the target system, based on the stability of the product mix and degree of

routineness of product fow

Action Orientation Team perceptions of the extent to which their team focused on implementation versus analysis

Afective Commitment to

Change

Team member perceptions of the need for the Kaizen event

Tool Appropriateness Facilitator rating of the appropriateness of the problem-solving tools used by the team during the event

Tool Quality Facilitator rating of the quality of the teams use of these tools

Internal Processes Team member ratings of the internal harmony and coordination of their team.

Goal Achievement Aggregate percentage of major improvement goals met

Perceived Success

(Overall Success)

Stakeholder perceptions of the overall success of the Kaizen event.

Impact on Area Team member perceptions of the impact of the event on the target area

Kaizen Capabilities Perceived incremental gains in employee task- and problem-solving knowledge, skills, and abilities resulting from a

specifc Kaizen event

Attitude Team perceptions of the degree to which members gained afect for events

13 September 2008 Vol. 20 No. 3 Engineering Management Journal

secondary, supporting data from organizational documents).

In addition, the collection of qualitative contextual data can be

used to further explain conclusions drawn from the quantitative

data. For instance, team member and facilitator descriptions of

the biggest contributors and biggest obstacles to team success can

provide rich data useful for understanding the event context.

Case Study

Te case study organization is a manufacturer of large equipment

which is participating in the current research. Te case study

organization has been conducting Kaizen events since 1998 and

conducts events in both manufacturing and non-manufacturing

work areas, with a roughly 70/30% ratio in favor of non-

manufacturing areas. On the whole, the case study organization

Exhibit 3. Study Instruments for Initial Outcomes

Instrument Measures

Person(s)

Completing

Timing Method

Kickof Survey GoalClarity

GoalDifculty

AfectiveCommitmenttoChange

Team

members

First day of event -- end of

the kickof meeting, which

introduces event goals

Group

administration

Team Activities

Log

None directly provides an understanding of

event context and can be compared to Action

Orientation scale results

One team

member

During event end of

each day

Self-administered

Report Out

Survey

Attitude

ImpactonArea

KaizenCapabilities

TeamAutonomy

ManagementSupport

ActionOrientation

InternalProcesses

OverallSuccess(PerceivedSuccess)

Team

members

Last day of event -- end of

the report out meeting,

where the team presents

results to management

Group

administration

Event Information

Sheet

TeamFunctionalHeterogeneity

TeamLeaderExperience

EventPlanningProcess

WorkAreaRoutineness

ToolAppropriateness

ToolQuality

OverallSuccess(PerceivedSuccess)

GoalAchievement

Facilitator One to two weeks after

event

Self-administered

or administered

via phone

interview

Exhibit 4. Study Instruments for Sustainability Study

Instrument Measures Person(s) Completing Timing Method

Post-Event

Information Sheet

Follow-UpInvestment

KaizenEventHistory

OverallSuccess(PerceivedSuccess)

PercentageofImprovementSustained

ImpactonArea

WorkAreaLearning

WorkAreaStewardship

WorkAreaCommitmenttoKaizenEvents

WorkAreaAttitude

WorkAreaKaizenCapabilities

Work Area Manager T2 = 12 months after

event

Self-administered

or administered

via phone

interview

management believes that the Kaizen event program is recognized

as a success within the company. Te results for the specifc event

described in this case study demonstrate how even organizations

with substantial maturity and experience in conducting Kaizen

events experience variation in outcomes across events. Te

study results also demonstrate how the measurement tools and

methods used in this research provide valuable information that

the organization, as well as the researchers, can use to learn from

all of its events (both more and less successful events). Finally, this

case study demonstrates how a less successful case can be useful

for deriving testable propositions regarding the contributing

factors to event success.

As part of the current research, the case study organization

collected data from a manufacturing-focused event, which

14 September 2008 Vol. 20 No. 3 Engineering Management Journal

occurred in February 2006. Te event focused on improving the

quality of the raw material delivered to production, and will be

referred to here as the Raw Material Quality (RMQ) event. Te

event was fve days long and the primary intended outcome was

an action plan for improving raw material quality. None of the

changes suggested during the event would be implemented until

afer the conclusion of the event. In the current research, events

of this type are referred to as non-implementation events.

While the Kaizen event literature and the authors experience

both suggest that many Kaizen events include full or partial

implementation of the teams solution as part of the formal event

activities (i.e. implementation events), the current data set and

some published accounts reveal that some organizations hold

events where implementation of changes is not a part of event

design. In these events, the intended output is a plan for change,

which could be a detailed action plan for implementing changes to

a work area or product or a more general list of action items (e.g.,

from a value stream mapping activity). In the 51 events studied in

the frst phase of this research, 75% (38) were implementation and

25% (13) were non-implementation. It can also be observed from

this that the averages for individual variables in our research are

likely more representative of implementation events, rather than

non-implementation events. Because of this, we note in this paper

how the event compared to the other 12 non-implementation

events in the sample, as well as to the larger data set as a whole.

Te detailed objectives for the RMQ event are summarized

in Exhibit 5. Tere were 11 members on the RMQ event team. All

11 team members completed the Kickof Survey (100% response

rate) and 10 team members completed the Report Out Survey

(91% response rate).

Te objective here is not to present all the data collected

from the event, but rather to highlight how the current research

contributed to a holistic understanding of the event and how

the event process could be improved. Tis is considered both in

terms of the relative levels of the diferent variables measured in

this case study event and the comparison of this event to the other

events studied in the frst phase of the larger research program.

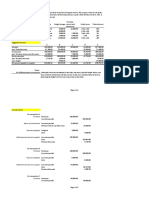

Exhibits 6 and 7 display the boxplots of team member ratings for

the Kickof Survey variables and Report Out Survey variables,

respectively, while Exhibit 8 lists the summary statistics for the

Kickof Survey and Report Out Survey. On the boxplots, the

y-axes denote the response scales for the survey questions. All

survey questions used the same 6-point Likert-type (Likert, 1932)

response scale (1 = strongly disagree, 2 = disagree, 3 = tend

to disagree, 4 = tend to agree, 5 = agree, and 6 = strongly

agree). Te x-axes denote the diferent survey variables. Te

middle line of each boxplot represents the within-team median

score for that variable, while the box captures 50% of the data,

and the whiskers capture the upper and lower quartiles. Outliers

and extreme outliers are indicated by an open circle and

asterisk, respectively.

As shown in Exhibit 8, we calculated parametric statistics

(mean, standard deviation), as well as nonparametric statistics

(median, range), for the Kickof Survey and Report Out

Survey scales. Along with the majority of researchers utilizing

psychometric data, we subscribe to the measurement paradigm

that considers parametric analysis methods appropriate as long

as the distributional properties of the data support them and the

latent variables being measured can be assumed to be continuous.

An analysis of the distributional properties of the scale-level

data for the larger sample of 51 events studied in this research

suggested that most rated variables were approximately normally

distributed (Farris, 2006), thus supporting the use of parametric

methods. In addition, an examination of the boxplots (Exhibits

6 and Exhibits 7) suggest that the data for the RMQ event were

also approximately normal for many variables (e.g., Afective

Commitment to Change, Kaizen Capabilities), although for other

variables, a skew is noted (e.g., Team Autonomy). Tus, given

our philosophy of measurement and the actual characteristics

of the data, we consider parametric, as well as non-parametric,

statistics to be meaningful for these analyses. However, we note

that the use of parametric statistics with psychometric data is not

completely without controversy, with a minority of social science

researchers, primarily those subscribing to representational

theory (Stevens, 1946, 1951), opposing the use of parametric

statistics with psychometric data under all or most conditions (for

a more detailed review of the topic see Borgatta and Bohrnstedt,

1980; Gaito, 1980; Goldstein and Hersen, 1984; Hand, 1996; Lord,

1953; Michell, 1986; Townsend and Asbhy, 1984; Velleman and

Wilkinson, 1993; Zumbo and Zimmerman, 1993).

Interpreting Team Outcomes

Analysis of the raw technical system outcomesthat is, team

performance versus goalswould suggest that the team was

successful in meeting its objectives (see Exhibit 5); however, the

teams goals were not measurable per seespecially since exact

targets (e.g., decrease product throughput time by 30%) had

not been specifed. Te conclusion at the time of the initial case

study analysis was that this event was highly successful in terms

of objective results. Tis fnding is supported by the data set of

51 events studied in the frst phase of the larger research program,

where these results place this event in the top two-thirds of the

events studied in terms of goal achievement. In addition, this event

was similar to the majority of the other 12 non-implementation

events, where the mean goal achievement was 83%, with a mode

of 100% (9 out of 13 observations) and a minimum of zero.

Survey results would more tentatively support the successfulness

of the eventmost of the team members tentatively agreed that

the event was a technical success. For the Overall Success variable

Exhibit 5. RMQ Event Goals

Goal Result Achieved

1. Standardize the

raw material

inspection

process

throughout

supply chain

1. Action plan developed includes

plans to designate a single

inspection process owner (work

group), create standard inspection

criteria, and develop standard

training.

2. Communicate

incoming quality

requirements to

vendors

2. Action plan developed to

communicate standard criteria to

vendors

3. Reduce raw

material

inventory levels

3. Action plan developed to reduce

inventory by implementing just-in-

time delivery of raw material and a

kanban system for safety stock

4. Decrease product

throughput time

4. Estimated 59% decrease in

throughput time if all changes

implemented

15 September 2008 Vol. 20 No. 3 Engineering Management Journal

(see Exhibits 2 and 7), both the within-team median rating and

the within-team mean rating were between 4 (tend to agree)

and 5 (agree) on the 6-point survey response scale (4.20 and

4.50, respectively). Meanwhile, team member perceptions of the

impact of the RMQ event on the target system (Impact on Area)

were also tentatively positive. Te within-team median for Impact

on Area was 4.00 and the within-team mean (representing the

team-level variable score) was 4.04; however, compared to the

larger data set of 51 events, the RMQ event had the fourth lowest

score for Impact on Area (bottom 8%), while the average Impact

on Area score was 4.94. Even among the 13 non-implementation

events only, the RMQ event had the third lowest score for

Impact on Area, while the average score for non-implementation

events was 4.69. Finally, team member responses to the Attitude

and Kaizen Capabilities scales suggest that the event had

somewhat positively impacted employee afect toward Kaizen

events and employee continuous improvement capabilities (see

Exhibit 7). Both the within-team median and mean scores for

Exhibit 6. Kickof Survey Results

Exhibit 7. Report Out Survey Results

ll l0 l0 N

Aff Cmt to Chg Goal Difficulty Goal Clarity

7.0

6.0

5.0

4.0

3.0

2.0

l.0

l0 9 l0 l0 l0 l0 l0 l0 N

7.0

6.0

5.0

4.0

3.0

2.0

l.0

T

e

a

m

A

u

t

o

n

o

m

y

M

a

n

a

g

e

m

e

n

t

S

u

p

p

o

r

t

A

c

t

i

o

n

O

r

i

e

n

t

a

t

i

o

n

|

n

t

e

r

n

a

l

P

r

o

c

e

s

s

e

s

A

t

t

i

t

u

d

e

K

a

i

z

e

n

C

a

p

a

b

i

l

i

t

i

e

s

|

m

p

a

c

t

o

n

A

r

e

a

O

v

e

r

a

l

l

S

u

c

c

e

s

s

16 September 2008 Vol. 20 No. 3 Engineering Management Journal

these variables were between 4 (tend to agree) and 5 (agree).

Te within-team median was 4.00 for Attitude and 4.56 for

Kaizen Capabilities, while the within-team mean was 4.20 for

Attitude and 4.39 for Kaizen Capabilities; however, even though

team member responses were on the positive (agree) side of

the survey scale, out of the 51 events studied in the frst phase

of the larger research program, the RMQ event had the second

lowest score (based on within-team means) for Attitude (bottom

4%) and the seventh lowest score for Kaizen Capabilities (bottom

14%). In addition, for the 13 non-implementation events only,

the RMQ event had the lowest score for Attitude and the third

lowest score for Kaizen Capabilities. Te averages across all 51

events in the data set were 5.00 for Attitude and 4.87 for Kaizen

Capabilities; for non-implementation events, the averages were

4.98 and 4.76, respectively. Te error structure of the data set (i.e.,

the 51 teams nested within six organizations) makes the results

of ordinary least squares-based tests for diferences across events

suspect due to violation of independence assumptions; however,

the relative position of the RMQ event in the data set suggests

that this was one of the least successful events in phase one of

the research in terms of human resource impact and perceived

impact on area.

Furthermore, additional sources of data examined in this

research support a much less positive picture of the overall success

of the event than the teams objective performance versus its

goals does. Te facilitator rating for the Overall Success variable

(obtained via the Event Information Sheet) was 1.0 (strongly

disagree). Te RMQ event was one out of only two events in the

phase one data set of 51 events to receive an Overall Success rating

of 1.0 from the facilitator (the other event was an implementation

event). In an open-ended response describing management

interaction with the Kaizen event team, the facilitator said he felt

that the event was a failure, even though he believed the teams

solution was technically sound. Furthermore, the member of

management who was present at the team report out to review

the teams solution rejected the majority of the teams suggestions

outright. Other surrounding factorsespecially lack of buy-in

from a key function that was not represented in the event team

make it almost certain that the improvements identifed in the

event will never be implemented. On the balance, therefore, it

is clear that, except for the potential for sustaining the gains in

employee Attitude and Kaizen Capabilities from participation,

the RMQ event was not ultimately a strong success (i.e., it did not

directly result in changes that were then implemented to improve

organizational performance). Tus, it is clear that the multiple

data sources and measures used in this research provided a more

comprehensive picture of overall event impact and success than

any of the measures would have alone especially if only the raw

technical outcomes were examined, as is common practice in

published Kaizen event accounts. Tis case example highlights

the need for multiple measures of event outcomes (e.g., goal

achievement, human resource outcomes, perceived success) from

multiple sources (e.g., team members, facilitator) to provide a

holistic picture of the event and both its immediate and short-

term results. Afer analyzing the overall impact of the event, and

determining that it was a less successful application, the next

step was to investigate what factors may have contributed to

these results.

Identifying Obstacles to Team Success

In case study research, triangulation is one method commonly

used to draw overall conclusions from case study data. In a case

study context, triangulation describes the situation where data

from multiple sources or multiple data collection methods are

examined to determine to what extent these data lead to the same

conclusions (Yin, 2003). Ofen, one or more data sources being

examined are qualitative in nature and the cross-datum comparison

is also typically made using qualitative analysis methods

(looking for common themes, etc.). Tis form of triangulation is

conceptually similar to, but methodologically distinct from, the

mathematical triangulation used to determine a location of a given

point in land surveying, etc. In the current research, triangulation

was used to evaluate the overall efectiveness of the event. In

addition, triangulation of the data collected on event input and

process factors led to the identifcation of three major factors

that likely contributed to the limited success of the RMQ event,

Exhibit 8. Summary Statistics for Kickof Survey and Report Out Survey Variables

Variable Median Mean Standard Deviation Range

Kickof Goal Clarity 4.00 3.98 0.69 2.25 - 5.00

Survey Goal Difculty 3.88 3.98 0.75 3.00 - 5.50

Commitment to Goals 4.50 4.45 0.59 3.33 - 5.50

Report Attitude 4.25 4.23 0.63 3.00 - 5.00

Out Impact on Area 4.00 3.97 1.06 1.75 - 5.00

Survey Skills 4.25 4.20 0.48 3.00 - 4.75

UnderstandingofCI 4.63 4.45 0.81 2.50 - 5.25

Team Autonomy 4.00 3.88 1.04 1.50 - 4.75

Management Support 4.40 4.22 0.82 2.60 - 5.20

Action Orientation 2.75 2.43 0.85 1.25 - 3.50

Internal Processes 4.80 4.64 0.72 3.00 - 5.60

Overall Success 4.50 4.20 1.32 2.00 - 6.00

17 September 2008 Vol. 20 No. 3 Engineering Management Journal

thus suggesting ways the organization can improve its Kaizen

event process and expanding the current body of knowledge on

Kaizen event efectiveness. Although the identifcation of these

factors is not based on experimentation, the triangulation of data

strengthens the conclusion that these factors likely contributed

to the overall event results. In addition, the factors identifed in

this case study are compared to results from analysis of the larger

phase one data set (i.e., 51 events from six organizations).

First, the method used to communicate the event goals to

the team appears likely to have resulted in lower than optimal

goal clarity. Data provided by the event facilitator (via the Event

Information Sheet) on the kickof meeting process indicate that

the event sponsor completed the goals presentation portion of the

kickof meeting and that he used a one-way, top-down delivery

format. Te sponsors presentation of the goals did not include

any description of the motivation behind the goals (i.e., how the

event was identifed or what the motivating business issues were)

or any interactive discussion of the goals with the team. Tat is,

the team was not invited to discuss the goals with the sponsor or

to ask questions to clarify the sponsors expectations. Tis method

of communicating the goals to the team likely made it more

difcult for the team to achieve improvements (due to confusion

about what improvements were needed), and, more importantly,

put the team at risk for failing to meet sponsor expectations, since

those expectations appear not to have been clear to the team and

the team had no opportunity of asking questions to clarify or

deepen their understanding. Tis apparent lack of understanding

of sponsor expectations at least partly explains why management

ultimately found the teams solutions unacceptable despite

positive objective results (see the previous section). Data

collected using other study measures and sources are consistent

with this interpretation. For instance, the within-team median for

the Kickof Survey variable Goal Clarity was 4.00 (tend to agree)

and the within-team mean (the team-level variable value) was

3.98, indicating a somewhat positive orientation but an overall

lack of confdence by team members about the clarity of the goals.

Furthermore, the majority of RMQ team members (six out of

10 responding team members) reported a Goal Clarity score of

4.00 or lower. Only one team member reported a Goal Clarity

score greater than 4.50. In addition, compared to the larger data

set of 51 events, the RMQ event had the sixth lowest score for

Goal Clarity (bottom 12%), while the average Goal Clarity score

was 4.59. For the 13 non-implementation events only, the RMQ

event had the second lowest score for Goal Clarity, while the

average score for non-implementation events was 4.61. Finally,

open-ended responses to perceptions of the biggest obstacles to

team success (an open-ended question in the Report Out Survey

and the Event Information Sheet) provide further support for the

idea that team goal clarity could have been improved. One team

member stated that the main obstacle to the success of his/her

team was [difculty in] focusing on our main objective and who

would do it. Tus, these results suggest that low levels of goal

clarity may contribute to failure to meet sponsor expectations,

even if the objective results achieved appear positive. Meanwhile,

results from statistical analysis of the larger data set indicate that

low goal clarity also reduces the efectiveness of internal team

dynamics (i.e., Internal Processes), which, in turn, leads to lower

levels of Attitude and Kaizen Capabilities (Farris, Van Aken,

Doolen, and Worley, 2007). Tis is consistent with fndings from

this case study. Although Internal Processes was the highest-rated

Report Out Survey variable for the RMQ event (the within-team

median was 4.80 and the within-team mean was 4.64), the RMQ

event had the ffh lowest score for Internal Processes in the larger

data set (bottom 10%), while the mean for Internal Processes

across all 51 events in the phase one data set was 5.17. For the

13 non-implementation events only, the RMQ event had the

lowest score for Internal Processes, while the mean score for non-

implementation events was 5.25. Tus, case study results suggest

that goal clarity is a key variable in predicting and managing event

success, both in terms of technical system outcomes and human

resource outcomes.

Second, the team was lacking in representation from a key

function, thus likely limiting the appropriateness of the teams

solution and reducing the likelihood of post-event implementation,

due to a lack of input and buy-in from the function. Data from

the Event Information Sheet indicate that a key contributor to

raw material quality was not represented on the team: the group

responsible for actually performing the inspections of the material,

and thus, ultimately responsible for implementing and sustaining

changes. It appears that this may have been due to problems with

resource availability from this group during the scheduled time

for the event. Tis lack of representation ultimately led to doubts

both by members of the team and members of management

over whether the event results were realistic. In describing

management interaction with the team during the event (in the

Event Information Sheet), the facilitator indicated that one of the

criticisms that the member of management reviewing the solution

had was that it did not take into account the realities of the

workplace. In addition, in the descriptions of the biggest obstacles

to team success, one team member listed not being allowed to be

realistic as the biggest obstacle. A second respondent listed lack

of support from the process contributors to the team as one of

the biggest obstacles. Finally, one of the individual items related

to the Management Support variable in the Report Out Survey

is, Our Kaizen event team had enough help from others in our

organization to get our work done. Te within-team median

score for this item was 4.00 and the within-team mean score was

3.60 (between tend to disagree and tend to agree), indicating

that the team thought that support from others in the organization

could have been improved.

Finally, team decision-making authority (team autonomy)

was ultimately limited in this event. Although apparently accorded

the authority to make decisions to improve the work area in the

stated goals of the event, in practice, the teams decision-making

authority was entirely negated by management at the report

out meeting. As described earlier, data provided by the event

facilitator indicate that management ultimately rejected many of

the teams solutions during the report out meeting. Additional

data from the Event Information Sheet indicate that, during this

meeting, the member of management reviewing the solution

directly criticized the teams solution. In addition, the report out

meeting was informal with only a management representative,

rather than a broad spectrum of managers, which would have

more strongly demonstrated support for the event and the teams

decision-making. Furthermore, in the descriptions of the biggest

obstacles to team success, one respondent listed managements

[lack of] willingness to change, as one of the biggest obstacles,

further indicating the fact that management support for the

decisions made during the event (and thereby its willingness to

empower the team to make changes) was not evident. Most Kaizen

event resources suggest setting certain boundary conditions (e.g.,

cost, etc.), and then giving the team a high degree of autonomy

in deciding what solutions to implement, as long as boundary

conditions are met (Oakeson, 1997; Sheridan, 1997; Minton, 1998;

18 September 2008 Vol. 20 No. 3 Engineering Management Journal

Laraia et al., 1999). Finally, although the team median and team

mean score for the Report Out Survey variable Team Autonomy

were tentatively positive (4.33 and 4.12, respectively), the RMQ

event had the fourth lowest Team Autonomy score in the data set

(bottom 8%) and the mean score for team autonomy across all 51

events in the data set was 4.82. For the 13 non-implementation

events only, the RMQ event had the second lowest score for

Team Autonomy, while the mean score for non-implementation

events was 4.81. It has been suggested that the high degree of

autonomy generally accorded to the team may be a key factor in

why Kaizen event programs appear (at least based on anecdotal

evidence) to be more accepted by employees than many previous

improvement programs, such as quality circles. Kaizen teams are

ofen allowed to actually implement changes during the event, so

participating employees can directly see that their efort makes a

diference and that management trusts them to make decisions to

improve the organization. In contrast, in traditional improvement

approaches, such as quality circles, employees are generally only

given the power to suggest changes, which must then be approved

by management prior to implementation (Mohr and Mohr, 1983;

Cohen and Bailey, 1997; Laraia et al., 1999). Te current case

example was designed to be a non-implementation event (i.e.,

planning only), but even in this case, management could have

accorded the team more authority. Failure to allow the teams

solutions to be implemented afer the event demonstrates a lack

of confdence in the teams decisions, and also changes the Kaizen

event model to be more similar in practice to the quality circle

model in both process and results. In fact, Lawler and Morhman

(1985, 1987) argue that this type of management response is

a key factor leading to the demise of U.S. quality circles in the

1980s employees were initially enthusiastic about participating

in quality circles, but soon saw that they had no actual power to

achieve change, as management ofen rejected their suggestions

and decisions. Similarly, work motivation theory suggests that

inequity between perceived inputs (e.g., employee time and

efort) and perceived outcomes (e.g., management acceptance of

a teams solution) could lead to decreased motivation to perform

similar tasks in the future, employee withdrawal and, ultimately,

lower outcomes for future events (Adams, 1965; Aldefer,

1969). Tus, it remains to be investigated how this change to

the prescribed Kaizen event process, if it is replicated across

additional Kaizen events, will ultimately impact the sustainability

of individual event results and the Kaizen event program

as a whole.

Discussion and Future Research

Tis research demonstrates how the detailed examination

of a less successful case provided a valuable opportunity for

organizational learning. Te identifcation of the three factors

presented above, which apparently limited the success of the

specifc RMQ event, provided valuable information for learning

for the case study organization, and may ultimately contribute

to improved efectiveness of the organizations Kaizen event

program as a whole. By identifying three key variables that appear

to impact event success, the case study organization can now seek

to develop mechanisms that can be used to infuence or control

these variables. A few of the mechanisms recommended were:

communicating upfront with sponsors to insure an interactive

presentation of team goals, including the opportunity for the

team to ask questions to clarify sponsor expectations; getting

management agreement to institute a policy of not moving forward

with an event without representation on the team from all key

functions; negotiating with management to more clearly defne

the boundaries of team authority and to develop mechanisms for

more clearly and strongly communicating management support

for the teams decision-making. Te organization has already

implemented some of these corrective mechanisms, with apparent

success. On the next event studied within the organization, the

facilitator of the event indicated that, based on the fndings from

the RMQ event, he ensured that the event sponsor presented the

goals to the team in an interactive format, where team members

were encouraged to ask questions to clarify the goals. Te Goal

Clarity score was noticeably higher for this event, although it is,

of course, impossible to prove that this was solely the result of his

intervention. In general, the case study organization has already

demonstrated that it is proactive in learning from its events. Te

Kaizen event facilitators routinely hold informal sessions where

they share more and less successful elements from their events

with one another. It is likely the organization will continue to seek

to improve Kaizen processes based on examination of both more

and less successful events.

From a research perspective, the case illustrates how

the methods and measures used in the current study allow

triangulation of multiple data types and sources to draw more

robust conclusions. As has been demonstrated, the importance of

all three factors to the event outcomes is supported by two sources

of data (the facilitator and the team) and by both qualitative

and quantitative measures, as well as by results from the larger

research program. In addition, the case study results highlight

the need for additional research on Kaizen event efectiveness

by illustrating how the design and results of Kaizen events can

vary, even within organizations that are experienced in using

Kaizen events. Te results also indicate how even organizations

with signifcant maturity in their Kaizen event programs still

sometimes experience difculty in achieving optimal event

designs. Finally, the case illustrates the importance of research

on the sustainability of event outcomes, and event programs as

a whole, by illustrating a factor (management support for team

decision-making authority), which may ultimately impact the

sustainability of event outcomes and the Kaizen event program

as a whole. Future research should focus on identifying factors

related to the sustainability of outcomes, as well as further testing

of factors associated with initial outcomes. For instance, future

research should continue to investigate the impact of the three

factors identifed in this study to confrm their importance to

Kaizen event efectiveness. Tis research should include additional

case studies of more and less successful events and analysis of

larger, multi-event data sets. As discussed, the larger research

study of 51 events, which was completed afer an earlier version

of this paper was published (Farris et al., 2006

2

), has already

confrmed the importance of goal clarity and team autonomy

to initial outcomes. Te importance of adequacy of functional

representation (i.e., whether or not all key functions were

represented on the team) has not been specifcally investigated

in the larger study, although cross-functional heterogeneity is a

variable in the larger research. Perhaps additional case studies of

less successful events could be used to determine whether or not

this factor is a common theme in less successful Kaizen events

and whether or not its impact should be investigated statistically

in the future.

Finally, for the practicing engineering manager who may

be responsible for sponsoring Kaizen events, conducting Kaizen

events, participating in Kaizen events, or overseeing employees

who dothis research highlights three variables that should be

19 September 2008 Vol. 20 No. 3 Engineering Management Journal

considered in event design, as well as the consequences of not

carefully managing these variables. For instance, engineering

managers sponsoring Kaizen events might be tempted to solely

reserve decision-making authority while relegating the team to

an advisory role. Tis research, and related literature, suggests

that the manager would be better of chartering the creation of a

competent team with full functional representation, giving them

clear goals (allowing them to clarify these goals, if necessary),

specifying important boundaries, and then abiding by whatever

solution the team develops during the solution process, at least

until this solution is proven inadequate. In addition, this research

provides a set of measurement instruments and methods that can

be used to assess Kaizen event design and initial outcomes. Tese

instruments consist of two short survey questionnaires completed

by Kaizen event team members and a questionnaire completed by

the Kaizen event facilitator, as well as a team activities log. Due to

scope and space constraints, these instruments are not presented

here, but are available in other publications (Farris, 2006). We

invite interested individuals to contact the authors for copies of

these instruments.

Acknowledgments

Tis research was supported by the National Science Foundation

under grant No. DMI-0451512. We also gratefully acknowledge

the Kaizen event team members, facilitators, and coordinators

for their participation in data collection and validation. Finally,

we thank the four anonymous reviewers for their constructive

and insightful feedback. An earlier version of this paper appeared

in the 2006 American Society for Engineering Management

Conference Proceedings.

References

Adams, John S. Inequity in Social Exchange, in Advances in

Experimental Social Psychology Leonard Berkowitz (ed.)

Vol. 62 (1965), pp. 335-343, Academic Press.

Aldefer, Clayton S. An Empirical Test of a New Teory of Human

Needs, Organizational Behavior and Human Performance,

4 (1969), pp. 142-175.

Borgatta, Edward F., and George W. Bohrnstedt, Level of

Measurement: Once and Over Again, Sociological Methods

and Research, 9:2 (1980), pp. 147-160.

Cohen, Susan G., and Diane E. Bailey, What Makes Teams Work:

Group Efectiveness Research from the Shop Floor to the

Executive Suite, Journal of Management, 23:3 (1997), pp.

239-290.

Cole, Robert E., Te Quality Revolution, Production and

Operations Management, 1:1 (Winter 1992), pp. 118-120.

Cuscela, Kristin N., Kaizen Blitz Attacks Work Processes at Dana

Corp., IIE Solutions, 30:4 (April 1998), pp. 29-31.

Farris, Jennifer, An Empirical Investigation of Kaizen Event

Efectiveness: Outcomes and Critical Success Factors, Ph.D.

Dissertation (2006), Virginia Tech.

Farris, Jennifer, Eileen M. Van Aken, and Toni L. Doolen,

Studying Kaizen Event Outcomes and Critical Success

Factors: A Model-Based Approach. Proceedings of the

Industrial Engineering and Research Conference (May

20061), CD-ROM.

Farris, Jennifer, Eileen M. Van Aken, Toni L. Doolen, and

June Worley, Learning from Kaizen Events: A Research

Methodology for Determining the Characteristics of More

- and Less - Successful Events, Proceedings of the American

Society for Engineering Management Conference (October

20062), CD-ROM.

Farris, Jennifer, Eileen M. Van Aken, Toni L. Doolen, and June

Worley, A Study of Mediating Relationships on Kaizen

Event Teams, Proceedings of the Industrial Engineering and

Research Conference (May 2007), CD-ROM.

Gaito, John, Measurement Scales and Statistics: Resurgence of

an Old Misconception, Psychological Bulletin, 87:3 (1980),

pp. 564-567.

Goldstein, Gerald, and Michel Hersen, Handbook of Psychological

Assessment, Pergamon Press (1984).

Hand, David J., Statistics and the Teory of Measurement,

Journal of the Royal Statistical Society, 159:3 (1996), pp. 445-

492.

Laraia, Anthony C., Patricia E. Moody, and Robert W. Hall, Te

Kaizen Blitz: Accelerating Breakthroughs in Productivity and

Performance, Te Association for Manufacturing Excellence

(1999).

Lawler, Edward E., and Susan A. Mohrman, Quality Circles Afer

the Fad, Harvard Business Review, 63:1 (Jan/Feb1985), pp.

64-71.

Lawler, Edward E., and Susan A. Mohrman, Quality Circles:

Afer the Honeymoon, Organizational Dynamics, 15:4

(Spring 1987), pp. 42-54.

Letens, Geert, Jennifer Farris, and Eileen M. Van Aken,

Development and Application of a Framework for the

Design and Assessment of a Kaizen Program, Proceedings

of the American Society for Engineering Management

Conference (October 2006), CD-ROM.

Likert, Rensis, A Technique for the Measurement of Attitudes,

Archives of Psychology, 140 (1932), pp. 5- 55.

Lord, Frederic M., On the Statistical Treatment of Football

Numbers, Te American Psychologist, 8 (1953), pp. 750-751.

Lounamaa, Pertti H., and James G. March, Adaptive Coordination

of a Learning Team, Management Science, 33:1 (January

1987), pp. 107-123.

Melnyk, Stephen A., Roger J. Calantone, Frank L. Montabon, and

Richard T. Smith, Short-Term Action in Pursuit of Long-

Term Improvements: Introducing Kaizen Events, Production

and Inventory Management Journal, 39:4 (Fourth Quarter

1998), pp. 69-76.

Michell, Joel, Measurement Scales and Statistics: A Clash of

Paradigms, Psychological Bulletin, 100:3 (1986), pp. 398-

407.

Minton, Eric, Profle: Luke Faulstick Baron of Blitz Has

Boundless Vision of Continuous Improvement, Industrial

Management, 40:1 (Jan/Feb 1998), pp. 14-21.

Mohr, William. L., and Harriet Mohr, Quality Circles, Addison-

Wesley Publishing Company (1983).

Oakeson, Mark, Kaizen Makes Dollars & Sense for Mercedes-

Benz in Brazil, IIE Solutions, 29:4 (1997), pp. 32-35.

Sheridan, John H., Kaizen Blitz, Industry Week, 246:16

(September 1, 1997), pp. 18-27.

Sitkin, Sim B., Learning Trough Failure: Te Strategy of

Small Losses, in Research in Organizational Behavior L.L.

Cummings and B.M. Straw (Eds.), 14 (1992), pp. 231-266,

JAI Press.

Stevens, Stanley S., On the Teory of Scales of Measurement,

Science, 103 (1946), pp. 667-680.

Stevens, Stanley S., Mathematics, Measurement and

Psychophysics, in Handbook of Experimental Psychology S.S.

Stevens (Ed.), (1951), pp. 1-49, Wiley.

Townsend, James T., and F.G. Ashby, Measurement Scales and

20 September 2008 Vol. 20 No. 3 Engineering Management Journal

Statistics: Te Misconception Misconceived, Psychological

Bulletin, 96:2 (1984), pp. 394-401.

Velleman, Paul F., and Leland Wilkinson, Nominal, Ordinal,

Interval and Ratio Typologies are Misleading, Te American

Statistician, 47:1 (1993), pp. 65-72.

Vitalo, Ralph L., Frank Butz, and Joseph P. Vitalo, Kaizen Desk

Reference Standard, Vital Enterprises (2003).

Womack, James P., Daniel T. Jones, and Daniel Roos, Te Machine

that Changed the World, Rawson Associates (1990).

Yin, Robert K., Case Study Research Design and Methods, 3rd

edition, Sage Publications, Inc. (2003).

Zumbo, Bruno D., and Donald W. Zimmerman, Is the Selection

of Statistical Methods Governed by Level of Measurement?

Canadian Psychologist, 34 (1993), pp. 390-400.

About the Authors

Jennifer A. Farris is an assistant professor in the Department

of Industrial Engineering at Texas Tech University. She

received her BS in industrial engineering from the University

of Arkansas and her MS and PhD in industrial and systems

engineering from the Grado Department of Industrial

and Systems Engineering at Virginia Tech, where she also

served as a Graduate Research Assistant and a Postdoctoral

Associate in the Enterprise Engineering Research Lab.

Her research interests are in performance measurement,

product development, kaizen event processes, and healthcare

performance improvement. She is a member of IIE and

Alpha Pi Mu.

Eileen M. Van Aken is an associate professor and associate

department head in the Grado Department of Industrial and

Systems Engineering at Virginia Tech. She is the founder

and director of the Enterprise Engineering Research Lab,

conducting research with organizations on performance

measurement, organizational improvement methods, lean

work systems, and team-based work systems. Prior to joining

the ISE faculty at Virginia Tech in 1996, she was employed

for seven years at the Center for Organizational Performance

Improvement at Virginia Tech, and was also employed by

AT&T Microelectronics in Virginia where she worked in

both process and product engineering. She received her BS,

MS, and PhD degrees in industrial engineering from Virginia

Tech. She is a senior member of IIE and ASQ, and is a member

of ASEM and ASEE. She is a Fellow of the World Academy of

Productivity Science.

Toni L. Doolen is an associate professor in the School

of Mechanical, Industrial & Manufacturing Engineering

at Oregon State University. Prior to joining the faculty at

OSU, Toni gained 11 years of manufacturing experience at

Hewlett-Packard Company as an engineer, senior member

of technical staf, and engineering manager. Toni received a

BS in electrical engineering and a BS in mterials science and

engineering from Cornell University, an MS in manufacturing

systems engineering from Stanford University, and a PhD in

industrial engineering at Oregon State University. She is a

senior member of SWE, IIE, and SME. She is also a member

of ASEE.

June M. Worley is a PhD candidate in the Mechanical,

Industrial, and Manufacturing Engineering Department

at Oregon State University. She received a BS in computer

science and a BS in mathematical sciences from Oregon State

University. She received an MS in industrial engineering

from Oregon State University, completing a thesis on the

sociocultural factors of a lean manufacturing implementation.

Her research interests include systems analysis, information

systems engineering, lean manufacturing, performance

metrics, and human systems engineering. Prior industry

experience includes fve years in operations management at a

regional bank and over three years of technical experience in

project analysis and implementation.

Contact: Jennifer A. Farris, PhD, Texas Tech University,

Industrial Engineering Building Room 201 (3061) Lubbock,

TX 79409-3061; phone: 806-742-3543; jennifer.farris@ttu.edu

You might also like

- Selection of Incident Investigation MethodsDocument12 pagesSelection of Incident Investigation Methodsdarya2669No ratings yet

- PQM Quiz FinalDocument11 pagesPQM Quiz FinalSyeda Sadaf ZahraNo ratings yet

- Blaine Ray HandoutDocument24 pagesBlaine Ray Handoutaquilesanchez100% (1)

- Marosszeky Et Al. 2004 - Lessons Learnt in Developing Effective Performance Measures For Construction Safety ManagementDocument12 pagesMarosszeky Et Al. 2004 - Lessons Learnt in Developing Effective Performance Measures For Construction Safety ManagementMurugan RaNo ratings yet

- Kaizen Events: An Assessment of Their Impact On The Socio-Technical System of A Mexican CompanyDocument17 pagesKaizen Events: An Assessment of Their Impact On The Socio-Technical System of A Mexican CompanyLio KaelNo ratings yet

- Case StudyDocument38 pagesCase StudyNaresh RamaNo ratings yet

- Production System Design and AnalysisDocument5 pagesProduction System Design and AnalysisHaneen SaifNo ratings yet

- Benner Tushman 2003 Expl LeadDocument20 pagesBenner Tushman 2003 Expl LeadCjhvNo ratings yet

- Critical Success Factors in KnowledgeDocument20 pagesCritical Success Factors in KnowledgeEsteban LadinoNo ratings yet

- Computerised Accounting Information Systems Lessons in State-Owned Enterprise in Developing Economies PDFDocument23 pagesComputerised Accounting Information Systems Lessons in State-Owned Enterprise in Developing Economies PDFjcgutz3No ratings yet

- CASE Tools: Why Are Not Used?Document10 pagesCASE Tools: Why Are Not Used?bladethedoom471No ratings yet

- IS Evaluation in PracticeDocument10 pagesIS Evaluation in PracticereilyshawnNo ratings yet

- Computerised Accounting Information Systems: Lessons in State-Owned Enterprise in Developing EconomiesDocument24 pagesComputerised Accounting Information Systems: Lessons in State-Owned Enterprise in Developing EconomiesMoHamed M. ScorpiNo ratings yet

- Zvikomborero Zinyengere Undergrad Chapter1Document7 pagesZvikomborero Zinyengere Undergrad Chapter1eli dhoroNo ratings yet

- Computers in Human Behavior: Yaobin Lu, Chunjie Xiang, Bin Wang, Xiaopeng WangDocument12 pagesComputers in Human Behavior: Yaobin Lu, Chunjie Xiang, Bin Wang, Xiaopeng Wangvivianne_roldanNo ratings yet

- Literature Review of The Measurement in The Innovation ManagementDocument8 pagesLiterature Review of The Measurement in The Innovation ManagementPaula Andrea ÑañezNo ratings yet

- The Effectiveness of Internal AuditingDocument12 pagesThe Effectiveness of Internal AuditingHadi MahmudahNo ratings yet

- Briand 1998Document30 pagesBriand 1998Ali AmokraneNo ratings yet

- Relationship Between Kaizen Events and Perceived Quality Performance in Indian Automobile IndustryDocument4 pagesRelationship Between Kaizen Events and Perceived Quality Performance in Indian Automobile IndustryMegha PatelNo ratings yet

- The Evolution of CASE Usage in Finland Between 1993 and 1996 - 1999Document17 pagesThe Evolution of CASE Usage in Finland Between 1993 and 1996 - 1999akmicahNo ratings yet

- Cohen Et Al-2010-Australian Accounting ReviewDocument12 pagesCohen Et Al-2010-Australian Accounting ReviewNajmudinSolihinNo ratings yet

- EnnnDocument155 pagesEnnncampal123No ratings yet

- Methods of Research - Term PaperDocument6 pagesMethods of Research - Term PaperOLAOLUWANLESI ADANLAWO ELISHANo ratings yet

- An Exploratory Study of Project Success With Tools, Software and MethodsDocument18 pagesAn Exploratory Study of Project Success With Tools, Software and MethodsالمهندسالمدنيNo ratings yet

- A Case Study and Survey-Based Assessment of The Management of Innovation and TechnologyDocument14 pagesA Case Study and Survey-Based Assessment of The Management of Innovation and TechnologyoscarcorderoNo ratings yet

- Factors Influencing Activity Based Costing (Abc) Adoption in Manufacturing IndustryDocument12 pagesFactors Influencing Activity Based Costing (Abc) Adoption in Manufacturing IndustryaboalbraNo ratings yet

- Bandar A 2005Document15 pagesBandar A 2005e.mottaghiNo ratings yet

- Improving The Applicability of Environmental Scanning Systems: State of The Art and Future ResearchDocument17 pagesImproving The Applicability of Environmental Scanning Systems: State of The Art and Future Researchmarysadeghfaha1391No ratings yet

- Integrated Management Systems - Quality, Environment and Health and Safety: Motivations, Benefits, Difficulties and Critical Success FactorsDocument7 pagesIntegrated Management Systems - Quality, Environment and Health and Safety: Motivations, Benefits, Difficulties and Critical Success FactorsPriyasha RayNo ratings yet

- Key Performance Indicators For Innovation Implementation: Perception vs. Actual UsageDocument7 pagesKey Performance Indicators For Innovation Implementation: Perception vs. Actual UsagemaherkamelNo ratings yet

- Tech MGT CaseDocument17 pagesTech MGT CaseVenkata Nagabhushana ShastryNo ratings yet

- Nader Muhammad Saleh Al-Jawarneh: AbstractDocument9 pagesNader Muhammad Saleh Al-Jawarneh: AbstractjournalNo ratings yet

- KPI For Lean Implementation in Manufacturing PDFDocument14 pagesKPI For Lean Implementation in Manufacturing PDFHadee SaberNo ratings yet

- Key Performance Indicators MST NDocument14 pagesKey Performance Indicators MST NmscarreraNo ratings yet

- The Productivity Dilemma (Repost)Document43 pagesThe Productivity Dilemma (Repost)MikeNo ratings yet

- Literature Review Manufacturing IndustryDocument9 pagesLiterature Review Manufacturing Industryafmzuiffugjdff100% (1)

- Business Process Analytics A Dedicated Methodology Through A Case StudyDocument18 pagesBusiness Process Analytics A Dedicated Methodology Through A Case StudyelfowegoNo ratings yet

- Title of Your Paper: Information Systems Implementation NameDocument11 pagesTitle of Your Paper: Information Systems Implementation NameAbeer ArifNo ratings yet

- The Impact of External and Internal Factors On The Management Accounting PracticesDocument13 pagesThe Impact of External and Internal Factors On The Management Accounting PracticesAnish NairNo ratings yet

- Scientific Paper Melvin Yip 4361121Document18 pagesScientific Paper Melvin Yip 4361121zabrina nuramaliaNo ratings yet

- 4 Damian Casestudy Ese 2004Document31 pages4 Damian Casestudy Ese 2004Psicologia ClinicaNo ratings yet

- A Study On Employee Productivity and Its Impact On Tata Consultancy Services Limited Pune (Maharashtra)Document9 pagesA Study On Employee Productivity and Its Impact On Tata Consultancy Services Limited Pune (Maharashtra)International Journal of Business Marketing and ManagementNo ratings yet

- Management Science Letters: Compatibility of Accounting Information Systems (Aiss) With Activities in Production CycleDocument8 pagesManagement Science Letters: Compatibility of Accounting Information Systems (Aiss) With Activities in Production Cyclew arfandaNo ratings yet

- Criteria For Measuring KM Performance Outcomes in Organisations (For AMHAZ)Document20 pagesCriteria For Measuring KM Performance Outcomes in Organisations (For AMHAZ)Georges E. KalfatNo ratings yet

- Knowledge ManagementDocument16 pagesKnowledge ManagementDamaro ArubayiNo ratings yet

- Analysis of Systems Development Project Risks: An Integrative FrameworkDocument20 pagesAnalysis of Systems Development Project Risks: An Integrative FrameworkJayaletchumi MoorthyNo ratings yet

- EEFs and Tools of Impact AnalysisDocument7 pagesEEFs and Tools of Impact AnalysisArkabho BiswasNo ratings yet

- Bhattacharyya 2013Document17 pagesBhattacharyya 2013airish21081501No ratings yet

- Influence of Organisational Resources On Performance of ISO Certified Organisations in KenyaDocument15 pagesInfluence of Organisational Resources On Performance of ISO Certified Organisations in KenyaFekadu ErkoNo ratings yet

- Decision Making Through Operations Research - HyattractionsDocument8 pagesDecision Making Through Operations Research - HyattractionsBruno SaturnNo ratings yet

- Reconceptualising The Information System As A Service (Research in Progress)Document12 pagesReconceptualising The Information System As A Service (Research in Progress)AldimIrfaniVikriNo ratings yet

- The Impact of Accounting Information SystemsDocument22 pagesThe Impact of Accounting Information Systemsjikee11No ratings yet

- Towards The Integration of Event Management Best PracticeDocument12 pagesTowards The Integration of Event Management Best PracticeKaren AngNo ratings yet

- Reaction Paper - 2 "The Problem With Workarounds Is That They Work: The Persistence of Resource Shortages"Document5 pagesReaction Paper - 2 "The Problem With Workarounds Is That They Work: The Persistence of Resource Shortages"Shaneil Matula100% (1)

- "Fleshing Out" An Engagement With A Social Accounting Technology Michael FraserDocument14 pages"Fleshing Out" An Engagement With A Social Accounting Technology Michael FraserRian LiraldoNo ratings yet

- Applying Business Analytics For Performance Measurement and Management. The Case Study of A Software CompanyDocument31 pagesApplying Business Analytics For Performance Measurement and Management. The Case Study of A Software Companyjincy majethiyaNo ratings yet

- The Impact of Enterprise Resource Planning (ERP) On The Internal Controls Case Study: Esfahan Steel CompanyDocument11 pagesThe Impact of Enterprise Resource Planning (ERP) On The Internal Controls Case Study: Esfahan Steel CompanyTendy Kangdoel100% (1)

- The Impact of Accounting Information System in Decision Making Process in Local Non-Governmental Organization in Ethiopia in Case of Wolaita Development Association, SNNPR, EthiopiaDocument5 pagesThe Impact of Accounting Information System in Decision Making Process in Local Non-Governmental Organization in Ethiopia in Case of Wolaita Development Association, SNNPR, EthiopiaEmesiani TobennaNo ratings yet

- Quality of Routine SosDocument21 pagesQuality of Routine SosDan BeringuelaNo ratings yet

- Ferrer 2020 The Missing Link in Project GovernaDocument16 pagesFerrer 2020 The Missing Link in Project Governarodrigo_domNo ratings yet

- A Framework of Problem Diagnosis For ERP ImplementDocument26 pagesA Framework of Problem Diagnosis For ERP ImplementChristian John RojoNo ratings yet

- Topic 7 Queuing TheoryDocument6 pagesTopic 7 Queuing TheoryAreda BatuNo ratings yet

- Review of Marketing ResearchDocument353 pagesReview of Marketing ResearchSangram100% (2)

- Obando ShisanyaDocument23 pagesObando ShisanyaAreda BatuNo ratings yet

- Export Division ProsodyDocument71 pagesExport Division ProsodyFahad ChowdhuryNo ratings yet

- Textile Fibers: Prof. Aravin Prince PeriyasamyDocument46 pagesTextile Fibers: Prof. Aravin Prince PeriyasamyAreda BatuNo ratings yet

- How Does One Extract The Finest Cellulose Fibers From Bamboo Without Using Caustic ChemicalsDocument2 pagesHow Does One Extract The Finest Cellulose Fibers From Bamboo Without Using Caustic ChemicalsAreda BatuNo ratings yet

- Erp Comparison enDocument16 pagesErp Comparison enAreda BatuNo ratings yet

- Learning From Less Successful Kaizen Events: A Case StudyDocument12 pagesLearning From Less Successful Kaizen Events: A Case StudyAreda BatuNo ratings yet

- B - ELSB - Cat - 2020 PDFDocument850 pagesB - ELSB - Cat - 2020 PDFanupamNo ratings yet

- Future Scope and ConclusionDocument13 pagesFuture Scope and ConclusionGourab PalNo ratings yet

- Handout No. 03 - Purchase TransactionsDocument4 pagesHandout No. 03 - Purchase TransactionsApril SasamNo ratings yet

- GutsDocument552 pagesGutsroparts cluj100% (1)

- The Role of Leadership On Employee Performance in Singapore AirlinesDocument42 pagesThe Role of Leadership On Employee Performance in Singapore Airlineskeshav sabooNo ratings yet

- Jahnteller Effect Unit 3 2017Document15 pagesJahnteller Effect Unit 3 2017Jaleel BrownNo ratings yet

- Egalitarianism As UK: Source: Hofstede Insights, 2021Document4 pagesEgalitarianism As UK: Source: Hofstede Insights, 2021kamalpreet kaurNo ratings yet

- 实用多元统计分析Document611 pages实用多元统计分析foo-hoat LimNo ratings yet

- Working With Hierarchies External V08Document9 pagesWorking With Hierarchies External V08Devesh ChangoiwalaNo ratings yet

- Examples Week1 CompressDocument6 pagesExamples Week1 CompressAngel HuitradoNo ratings yet

- Clay and Shale, Robert L VirtaDocument24 pagesClay and Shale, Robert L VirtaRifqi Brilyant AriefNo ratings yet