Professional Documents

Culture Documents

Assign 1

Uploaded by

darkmanhiOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Assign 1

Uploaded by

darkmanhiCopyright:

Available Formats

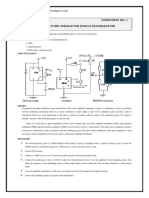

Korea Advanced Institute of Science and Technology

Department of Electrical Engineering & Computer Science

EE531 Statistical Learning Theory, Spring 2011

Issued: Mar 02, 2011 Assignment I

Due: Mar 16, 2011

Policy

Group study is encouraged; however, assignment that you hand-in must be of your own work. Any-

one suspected of copying others will be penalized. The homework will take considerable amount of

time so start early. There are Matlab problems in the assignment, and it may require some self study.

1. (Probability distributions) A family of probability distribution functions that are called the

exponential family has a special form given by

p(x|) = h(x)g()exp{

T

u(x)} (1)

where x may be scalar or vector random variable, and may be continuous or discrete. Here the

is called the natural parameters of the distribution and can be scalar or vector, and u(x) is

some function of x. The function g() can be interpreted as the coecient that ensures that

the distribution is normalized and therefore satises

g()

_

h(x)exp{

T

u(x)}dx = 1

where the integration is replaced by summation if x is a discrete variable. Consider a single

binary random variable x {0, 1} such that its probability of x = 1 is denoted by the parameter

so that

p(x = 1|) =

where 0 1 then the probability distribution over x also known as Bernoulli distribution

can be written as

p(x|) = Bern(x|) =

x

(1 )

1x

.

Expressing the right-hand side as the exponential of the logarithm, we have

p(x|) = exp{xln + (1 x) ln(1 )}

= (1 )exp{ln(

(1 )

)x} (2)

Comparison with ( 1) allows us to identify

u(x) = x

h(x) = 1

g() = () =

1

1 + exp()

.

For the following distributions, prove that is part of the exponential family by nding u(x), h(x)

and g().

1

(i) Gaussian:

p(x|,

2

) = N(x|,

2

) =

1

(2

2

)

1/2

exp

_

1

2

2

(x )

2

_

(ii) Beta: [0, 1], a > 0, b > 0

p(|a, b) = Beta(|a, b) =

(a +b)

(a)(b)

a1

(1 )

b1

where (x) =

_

0

u

x1

e

u

du (properties:(1) = 1, (z + 1) = z(z), (n + 1) = n!)

(iii) K-dimensional Direchlet (multivariate version Beta distribution)( = [

1

, ,

k

], =

[

1

, ,

k

]):

p(|) = Dir(|) =

(

0

)

(

1

) (

K

)

K

k=1

k

1

k

where 0

k

1,

k

k

= 1,

0

=

K

k=1

k

.

(iv) Multinomial ( = [

1

, ,

k

])

p(m

1

, m

2

, . . . , m

K

|, N) = Mult(m

1

, m

2

, . . . , m

K

|, N) =

N!

m

1

!m

2

! . . . m

K

!

K

k=1

m

k

k

where

K

k=1

m

k

= N and 0

k

1,

k

k

= 1

(v) Gamma: > 0, a > 0, b > 0

p(|a, b) = Gam(|a, b) =

1

(a)

b

a

a1

exp(b)

a. (maximum likelihood) Given a distribution from the exponential family following the

( 1), derive a relationship relating E(u(x)) and a function of the maximum likelihood

estimate of the .

b. (conjugate prior) When a certain prior distribution, known as the conjugate prior,

is assumed for a given likelihood function, also of certain restricted special form, the

posterior distribution has the same functional form of the prior. For example, the

beta distribution is the conjugate prior for Bernoulli likelihood. For each member of

the exponential family, there exists a conjugate prior that can be written in the form

p(|, ) = f(, )g()

exp{

T

} (3)

where f(, ) is a normalization coecient, and g() is the same function that appears

in the ( 1). Assuming that X is iid, prove that the conjugate prior can be written this

way. Find the conjugate prior for inverse of the variance of a univariate Gaussian

when the mean is assumed to be known. Also in the Dirichlet case, what is the

conjugate prior for the

k

?

c. (conjugate prior) Given X = [x

1

, ..., x

N

],

x

i

| N(,

2

) (4)

N(

0

,

2

0

) (5)

and x

i

is i.i.d. What is p(|X) = N(|

N

,

2

N

)? In other words, nd

N

and

2

N

.

d. (bias, variance) Given a iid set of data, x

1

, x

2

, . . . , x

n

R drawn from an univariate

Gaussian N(x|,

2

), What is the maximum likelihood estimates of the and ? Are

the estimates biased? What are the square biases and the variance of the estimate?

What is the mean square errors (MSE) of the estimates?

2

2. (Bayesian Approach) Consider a binomial random variable x given by

Bin(m|N, ) =

_

N

m

_

m

(1 )

Nm

with prior distribution for given by the beta distribution,

Beta(|, ) =

_

(+)

()()

1

(1 )

1

if 0 < < 1

0 otherwise

(i) Suppose we have observed m occurrences of x = 1 and l occurrences of x = 0. Show that

the posterior mean value of x lies between the prior mean and the maximum likelihood

estimate of . To do this, show that the posterior mean can be written as times the

prior mean plus (1 ) times the maximum likelihood estimate, where 0 1. From

this, what can you infer about the relationship between prior, posterior and maximum

likelihood solution?

(ii) [MATLAB]

a. First, we set the prior distribution for . Plot the beta distribution with = 2, = 8.

b. Now use the given data matrix to calculate the likelihood. Load data.mat, then you

will get a 10x10 data matrix. Each row has one data sequence which has 10 binary

values generated independently from bernoulli distribution with = 0.5. So you can

calculate the likelihood of each data sequence using Binomial distribution. Write a

code that reads each row and calculates the likelihood, and updates the posterior

distribution iteratively(total 10 iterations). At each iteration, plot the prior(the

posterior of one step before), the likelihood, and the posterior distribution. Discuss

the result.

c. At each iteration, calculate the prior mean, posterior mean and ML estimator of .

Is the result compatible with (i)? You can check if is between 0 and 1.

3. (Bayes risk)

(i) Bayes risk, also known as the average or expected loss, is dened as

R =

_ _

L(,

(x))p(, x)dxd

where L(, ) is the loss function and

(x) is the estimate of . The Bayes estimator

(x)

is an estimator that minimizes the Bayes risk. Show that this could also be obtained by

minimizing the posterior risk (also known as the conditional risk) given by

R

c

=

_

L(,

(x))p(|x)d.

(ii) Prove that when the L(,

) = (

)

2

, the Bayes estimate that minimizes the Bayes risk

is E[|x]. Show in this case that the Bayes estimate will never make the Bayes risk to be

zero.

(iii) Prove also when L(,

) =

_

0 |

|

2

,

1, |

| >

2

for small , then the Bayes estimator is

the maximum a posteriori(MAP) estimate,

= arg

max p(|x).

4. (Bayes Decision)

3

(i) A bar owner sells fresh draft beer in quantities of 500cc, and the owner makes m wons

for every 500cc of beer that he sells but loses n wons for every 500cc of beer that he does

not sell. The owner orders beer each day and throws away whatever is leftover from the

previous day. He orders amount of beer for each. Assume the demand for beer measured

in 500cc is a continuous random variable X with probability density function f(x) and

cumlative distribution function F(x). Show that the owners prot will be maximized

when F() = m/(m+n).

(ii) In many classication problems, you classify a pattern to one of N classes, or to reject

it as being unclassiable. If the cost (equivalent to loss) for reject is not too high then

rejection maybe a desirable thing to do. Let the cost for a correct answer be 0, for an

incorrect answer be

s

and for reject be

r

. Under what conditions on Pr(c

i

|x),

s

and

r

, should we reject? (hint: consider risk for choosing ith class c

i

R(c

i

|x) and compare

with R(reject|x).)

5. (Naive Bayes) Consider the spam-mail example that was covered in lecture. For your benet,

it is restated with slightly dierent notations but essentially the same way. Given labeled

training data D = {X

i

, y

i

} where class label y

i

= c {0, 1} such that y

i

= 1 for spam mail

and y

i

= 0 for ham mail and where X

i

= [x

i1

, x

i2

, . . . , x

iK

] is an indicator vector of all the

words in the dictionary i.e. x

ij

= 1 if the j word exist in the mail; otherwise, x

ij

= 0 if it

does not exist. There are K words in the dictionary. The probability of the ith word in class

c is denoted as

i,c

(to be clear class refers to either spam (c = 1) or ham(c = 0), and it was

estimated (Bayes estimate, conditional mean) from training D as

i,c

=

n

i,c

+ 1

n

c

+ 2

(6)

where n

c

is the count of class c mails and n

i,c

is the count of ith word in class c. Then using

0-1 Loss and minimizing the Bayes Risk (Mean risk) dened as

E[L( y, y)] =

y

p( y, y)L( y, y) (7)

where

L( y, y) =

_

0 if y = y

1 if y = y

(8)

the decision rule (Bayes classier)

y = arg max

y

p(y = y|X) (9)

was derived.

(i) What assumptions are made in the estimation of Eq. (6) ? Be sure to be exact in your

statement. (e.g. something has this probability distribution and the estimate is the (...)

of the distribution).

(ii) Derive the decision rule Eq. (9) from Eq. (7).

(iii) Write the decision rule in terms of p(X|y) and p(y). Then assume that the occurrence of

a word is independent of one another and write the decision rule interms of p(x

i

|y) and

p(y) where X = [x

i

, . . . , x

K

].

4

(iv) Compute the likelihood as

p(X|y, D) =

_

p(X, |y, D)d (10)

=

_

p(X|, y, D)p(|D)d (11)

=

_

p(X|, y)p(|D)d (12)

where it is assumed p(X|, y, D) = p(X|, y). Assume that the occurrence of a word is

independent of one another. You may need the following: Beta(a, b) =

(a+b)

(a)(b)

a1

(1

)

b1

and (n + 1) = n(n).

(v) Is there any dierence between the decision rules derived using the result above p(X|y =

c) = p(X|y = c, D) and using p(x

i

|y = c) = p(x

i

|y = c,

ic

)? Comment.

6. (Nonparametric density estimation)

In this problem, we estimate the underlying probability density function(pdf) of a dataset

which is generated from the pdf, by applying Kernel density estimation method. The true pdf

is given as follows:

x 0.7 N(5, 2

2

) + 0.3 N(5, 0.5

2

)

(i) Using matlab, plot the true probability density function.

(ii) Now, we need to generate the samples from the true pdf. Write a matlab function [X] =

DataGeneration(n) that randomly draws n number of samples X = [x

1

, x

2

, ..., x

n

] from

the true pdf.(Maybe you need to use randn, normpdf functions.) After generating

n = 2000 samples, check the generated samples by typing hist(X,250) on the command

window. Does the histogram look similar to the true pdf?

(iii) Now its time to estimate the pdf from the samples obtained in (b). Write a matlab

function [h,e]=kernelpdf(X) that estimates the pdf of the input data X of arbitrary

length. Use the Epanechnikov kernel and use cross-validation to nd the optimal kernel

binwidth h and an estimate of the Leave-One-Out cross-validation likelihood e. The

Leave-One-Out cross-validation is as following(See lecture note 5, Slide 7):

1

n

n

i=1

P

(i)

(x

i

)

Test your function with n = 2000 dataset. Plot the estimated pdf. (You can use the

MATLAB function fminbnd in nding the maximum of a function.)

(iv) This time, instead of using the optimal h value, write a matlab code which takes ar-

bitrary h as an input parameter.([e] = kernelpdf2(h,X)) Generate 3 datasets(n=20,

200, 2000), and estimate the pdf for three h values(h = 0.1, 1, 5), for each dataset. Plot

the estimated pdfs like the one presented in LectureNote 5, Slide 14. Based on the plots,

discuss the Bias and variance of nonparametric density estimation.(with regards to the

change in n and h)

5

You might also like

- Homework 1Document4 pagesHomework 1Bilal Yousaf0% (1)

- Stanford University CS 229, Autumn 2014 Midterm ExaminationDocument23 pagesStanford University CS 229, Autumn 2014 Midterm ExaminationErico ArchetiNo ratings yet

- Weekly Home Learning Plan: Empowerment Technologies S.Y 2020-2021 1 SemesterDocument4 pagesWeekly Home Learning Plan: Empowerment Technologies S.Y 2020-2021 1 SemesterGlenzchie Taguibao90% (10)

- Notes and Solutions For: Pattern Recognition by Sergios Theodoridis and Konstantinos Koutroumbas.Document209 pagesNotes and Solutions For: Pattern Recognition by Sergios Theodoridis and Konstantinos Koutroumbas.Mehran Salehi100% (1)

- Tutorial Problems Day 1Document3 pagesTutorial Problems Day 1Tejas DusejaNo ratings yet

- IIT Kanpur Machine Learning End Sem PaperDocument10 pagesIIT Kanpur Machine Learning End Sem PaperJivnesh SandhanNo ratings yet

- SK350 / SK200 Software Manual: 1 How To Upload New Software For The SK350 / SK200Document8 pagesSK350 / SK200 Software Manual: 1 How To Upload New Software For The SK350 / SK200Ray PutraNo ratings yet

- Patent OptimizerDocument49 pagesPatent Optimizer9716755397No ratings yet

- CMPUT 466/551 - Assignment 1: Paradox?Document6 pagesCMPUT 466/551 - Assignment 1: Paradox?findingfelicityNo ratings yet

- 10-701/15-781, Machine Learning: Homework 1: Aarti Singh Carnegie Mellon UniversityDocument6 pages10-701/15-781, Machine Learning: Homework 1: Aarti Singh Carnegie Mellon Universitytarun guptaNo ratings yet

- SAA For JCCDocument18 pagesSAA For JCCShu-Bo YangNo ratings yet

- Reading List 2020 21Document8 pagesReading List 2020 21septian_bbyNo ratings yet

- 10-701/15-781 Machine Learning - Midterm Exam, Fall 2010: Aarti Singh Carnegie Mellon UniversityDocument16 pages10-701/15-781 Machine Learning - Midterm Exam, Fall 2010: Aarti Singh Carnegie Mellon UniversityMahi SNo ratings yet

- Background For Lesson 5: 1 Cumulative Distribution FunctionDocument5 pagesBackground For Lesson 5: 1 Cumulative Distribution FunctionNguyễn ĐứcNo ratings yet

- 1 Review and Overview: CS229T/STATS231: Statistical Learning TheoryDocument4 pages1 Review and Overview: CS229T/STATS231: Statistical Learning TheoryyojamaNo ratings yet

- Chapter 4Document36 pagesChapter 4Sumedh KakdeNo ratings yet

- Exam P Formula SheetDocument14 pagesExam P Formula SheetToni Thompson100% (4)

- Assignment 5 Stat Inf b3 2022 2023 PDFDocument16 pagesAssignment 5 Stat Inf b3 2022 2023 PDFMrinmoy BanikNo ratings yet

- hw1 PDFDocument3 pageshw1 PDF何明涛No ratings yet

- A Two Parameter Distribution Obtained byDocument15 pagesA Two Parameter Distribution Obtained byc122003No ratings yet

- Homework 1 - Theoretical Part: IFT 6390 Fundamentals of Machine Learning Ioannis MitliagkasDocument6 pagesHomework 1 - Theoretical Part: IFT 6390 Fundamentals of Machine Learning Ioannis MitliagkasRochak AgarwalNo ratings yet

- Ps 1Document5 pagesPs 1Rahul AgarwalNo ratings yet

- MIT18 S096F13 Pset2Document4 pagesMIT18 S096F13 Pset2TheoNo ratings yet

- Multivariate Probability: 1 Discrete Joint DistributionsDocument10 pagesMultivariate Probability: 1 Discrete Joint DistributionshamkarimNo ratings yet

- Stanford Stats 200Document6 pagesStanford Stats 200Jung Yoon SongNo ratings yet

- Applied Mathematics and Computation: S. RezvaniDocument8 pagesApplied Mathematics and Computation: S. RezvaniLizaNo ratings yet

- Ps 1Document5 pagesPs 1Emre UysalNo ratings yet

- Mathematical StatsDocument99 pagesMathematical Statsbanjo111No ratings yet

- AssigmentsDocument12 pagesAssigmentsShakuntala Khamesra100% (1)

- MathEcon17 FinalExam SolutionDocument13 pagesMathEcon17 FinalExam SolutionCours HECNo ratings yet

- CS771: Machine Learning: Tools, Techniques and Applications Mid-Semester ExamDocument7 pagesCS771: Machine Learning: Tools, Techniques and Applications Mid-Semester ExamanshulNo ratings yet

- Csci567 Hw1 Spring 2016Document9 pagesCsci567 Hw1 Spring 2016mhasanjafryNo ratings yet

- MRRW Bound and Isoperimetric Problems: 6.1 PreliminariesDocument8 pagesMRRW Bound and Isoperimetric Problems: 6.1 PreliminariesAshoka VanjareNo ratings yet

- f (x) = Γ (α+β) Γ (α) Γ (β) x, for 0<x<1Document8 pagesf (x) = Γ (α+β) Γ (α) Γ (β) x, for 0<x<1Kimondo KingNo ratings yet

- A Very Gentle Note On The Construction of DP ZhangDocument15 pagesA Very Gentle Note On The Construction of DP ZhangronalduckNo ratings yet

- Midterm 2014Document23 pagesMidterm 2014Zeeshan Ali SayyedNo ratings yet

- MCMC Bayes PDFDocument27 pagesMCMC Bayes PDFMichael TadesseNo ratings yet

- Machine Learning and Pattern Recognition Background Selftest AnswersDocument5 pagesMachine Learning and Pattern Recognition Background Selftest AnswerszeliawillscumbergNo ratings yet

- SSG Tutorial NA MA214 PreMidsemDocument9 pagesSSG Tutorial NA MA214 PreMidsemTihid RezaNo ratings yet

- HMWK 4Document5 pagesHMWK 4Jasmine NguyenNo ratings yet

- HW 1Document7 pagesHW 1Lakhdari AbdelhalimNo ratings yet

- Information Theory and Machine LearningDocument21 pagesInformation Theory and Machine Learninghoai_thu_15No ratings yet

- Homework 4Document4 pagesHomework 4Jeremy NgNo ratings yet

- Maximum Likelihood An Introduction: L. Le CamDocument31 pagesMaximum Likelihood An Introduction: L. Le CamTiffany SpenceNo ratings yet

- Bi VariateDocument27 pagesBi VariateBikash JhaNo ratings yet

- HW 1Document8 pagesHW 1BenNo ratings yet

- Actsc 432 Review Part 1Document7 pagesActsc 432 Review Part 1osiccorNo ratings yet

- F.1 Further Exercises On Chapter 1Document18 pagesF.1 Further Exercises On Chapter 1aronl10No ratings yet

- CS19M016 PGM Assignment1Document9 pagesCS19M016 PGM Assignment1avinashNo ratings yet

- BasicsDocument61 pagesBasicsmaxNo ratings yet

- Assignment2 PDFDocument2 pagesAssignment2 PDFlaraNo ratings yet

- CS 717: EndsemDocument5 pagesCS 717: EndsemGanesh RamakrishnanNo ratings yet

- The Polynomials: 0 1 2 2 N N 0 1 2 N NDocument29 pagesThe Polynomials: 0 1 2 2 N N 0 1 2 N NKaran100% (1)

- 3 Bayesian Deep LearningDocument33 pages3 Bayesian Deep LearningNishanth ManikandanNo ratings yet

- Propagation of Error or Uncertainty: Marcel Oliver December 4, 2015Document8 pagesPropagation of Error or Uncertainty: Marcel Oliver December 4, 20154r73m154No ratings yet

- Practice Midterm 2010Document4 pagesPractice Midterm 2010Erico ArchetiNo ratings yet

- Bayes Sample QuestionsDocument2 pagesBayes Sample QuestionsRekha RaniNo ratings yet

- Test Code MS (Short Answer Type) 2011 Syllabus For MathematicsDocument5 pagesTest Code MS (Short Answer Type) 2011 Syllabus For Mathematicssap_chacksNo ratings yet

- 601 sp09 Midterm SolutionsDocument14 pages601 sp09 Midterm Solutionsreshma khemchandaniNo ratings yet

- Heavy Duty Math Writing: 1 Utilizing Large DelimitersDocument9 pagesHeavy Duty Math Writing: 1 Utilizing Large DelimitersCurtis HelmsNo ratings yet

- B671-672 Supplemental Notes 2 Hypergeometric, Binomial, Poisson and Multinomial Random Variables and Borel SetsDocument13 pagesB671-672 Supplemental Notes 2 Hypergeometric, Binomial, Poisson and Multinomial Random Variables and Borel SetsDesmond SeahNo ratings yet

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- BAPI EnhancementDocument6 pagesBAPI EnhancementSathish B SathishNo ratings yet

- Signal Multiplier Icc312Document2 pagesSignal Multiplier Icc312supermannonNo ratings yet

- CAPM QuestionsDocument49 pagesCAPM QuestionsrahimNo ratings yet

- Qmodmanager log-SubnauticaZeroDocument6 pagesQmodmanager log-SubnauticaZeroErwin R. PrasajaNo ratings yet

- WT60 Spare PartsDocument11 pagesWT60 Spare PartsimedNo ratings yet

- Global Sourcing OptionsDocument25 pagesGlobal Sourcing OptionsKazi ZamanNo ratings yet

- Pharma IndustriesDocument12 pagesPharma Industriesmarketing lakshNo ratings yet

- Astley Baker DaviesDocument2 pagesAstley Baker DaviesdearbhupiNo ratings yet

- Employee Offboarding Checklist 1679881966Document4 pagesEmployee Offboarding Checklist 1679881966Novianti Alit RahayuNo ratings yet

- Numark Mixtrack: (NKC4) Service ManualDocument21 pagesNumark Mixtrack: (NKC4) Service ManualjoseNo ratings yet

- Bts Wallpapers - Búsqueda de GoogleDocument1 pageBts Wallpapers - Búsqueda de GoogleMarichuy LaraNo ratings yet

- Rules of Procedure - NPCDocument27 pagesRules of Procedure - NPCMark VernonNo ratings yet

- Manual 903 H2SDocument145 pagesManual 903 H2SEduardo MontrealNo ratings yet

- Tools - For - IMRT - QA-N DoganDocument86 pagesTools - For - IMRT - QA-N DoganhitsNo ratings yet

- Computer Communication Networks CS-418: Lecture 8 - 1 Network Layer - Routing ProtocolsDocument19 pagesComputer Communication Networks CS-418: Lecture 8 - 1 Network Layer - Routing ProtocolsAli MemonNo ratings yet

- Stevan Jakovljevic Srpska Trilogija PDF Download Travail Ensemble Gra PDFDocument2 pagesStevan Jakovljevic Srpska Trilogija PDF Download Travail Ensemble Gra PDFKarenNo ratings yet

- Etec 404Document11 pagesEtec 404Akhilesh ChaudhryNo ratings yet

- Interview - QST Ans TelecomDocument19 pagesInterview - QST Ans TelecomSwarna Sekhar Dhar100% (1)

- PAMDocument11 pagesPAMMarco Alejandro Teran AguilarNo ratings yet

- EEG DE351 HD NAS Decoder Product ManualDocument18 pagesEEG DE351 HD NAS Decoder Product ManualFNo ratings yet

- Airpods Pro QSGDocument2 pagesAirpods Pro QSGAndrés M. Argüelles LandínezNo ratings yet

- Bts3911e&Wifi InfoDocument3 pagesBts3911e&Wifi InfoadilNo ratings yet

- Readhat Premium EX200 by VCEplus 24qDocument12 pagesReadhat Premium EX200 by VCEplus 24qMamoon20No ratings yet

- Literature Survey On LTEDocument5 pagesLiterature Survey On LTETalluri GuptaNo ratings yet

- PowerMill Robot - PostProcessorsDocument28 pagesPowerMill Robot - PostProcessorselmacuarro5100% (1)

- DFo 3 1Document44 pagesDFo 3 1Asfia SadrinaNo ratings yet

- 74HC02 74HCT02: 1. General DescriptionDocument15 pages74HC02 74HCT02: 1. General DescriptionSyed Hassan TariqNo ratings yet