Professional Documents

Culture Documents

Chapter2 Solutions

Uploaded by

alantsang2Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Chapter2 Solutions

Uploaded by

alantsang2Copyright:

Available Formats

Solutions to the Review Questions at the End of Chapter 2 1.

(a) The use of vertical rather than horizontal distances relates to the idea that the explanatory variable, x, is fixed in repeated samples, so what the model tries to do is to fit the most appropriate value of y using the model for a given value of x. Taking horizontal distances would have suggested that we had fixed the value of y and tried to find the appropriate values of x. (b) When we calculate the deviations of the points, yt, from the fitted values, y t , some points will lie above the line (yt > y t ) and some will lie below the line (yt < y t ). When we calculate the residuals ( ut = yt y t ), those corresponding to points above the line will be positive and those below the line negative, so adding them would mean that they would largely cancel out. In fact, we could fit an infinite number of lines with a zero average residual. By squaring the residuals before summing them, we ensure that they all contribute to the measure of loss and that they do not cancel. It is then possible to define unique (ordinary least squares) estimates of the intercept and slope. (c) Taking the absolute values of the residuals and minimising their sum would certainly also get around the problem of positive and negative residuals cancelling. However, the absolute value function is much harder to work with than a square. Squared terms are easy to differentiate, so it is simple to find analytical formulae for the mean and the variance. 2. The population regression function (PRF) is a description of the model that is thought to be generating the actual data and it represents the true relationship between the variables. The population regression function is also known as the data generating process (DGP). The PRF embodies the true values of and , and for the bivariate model, could be expressed as y t = + x t + u t Note that there is a disturbance term in this equation. In some textbooks, a distinction is drawn between the PRF (the underlying true relationship between y and x) and the DGP (the process describing the way that the actual observations on y come about).

1/7

Introductory Econometrics for Finance Chris Brooks 2008

The sample regression function, SRF, is the relationship that has been estimated using the sample observations, and is often written as y t = + x t Notice that there is no error or residual term in the equation for the SRF: all this equation states is that given a particular value of x, multiplying it by and adding will give the model fitted or expected value for y, denoted y . It is also possible to write y t = + x t + u t This equation splits the observed value of y into two components: the fitted value from the model, and a residual term. The SRF is used to infer likely values of the PRF. That is the estimates and are constructed, for the sample data. 3. An estimator is simply a formula that is used to calculate the estimates, i.e. the parameters that describe the relationship between two or more explanatory variables. There are an infinite number of possible estimators; OLS is one choice that many people would consider a good one. We can say that the OLS estimator is best i.e. that it has the lowest variance among the class of linear unbiased estimators. So it is optimal in the sense that no other linear, unbiased estimator would have a smaller sampling variance. We could define an estimator with a lower sampling variance than the OLS estimator, but it would either be non-linear or biased or both! So there is a trade-off between bias and variance in the choice of the estimator.

4. A list of the assumptions of the classical linear regression models disturbance terms is given in Box 2.3 on p.44 of the book. We need to make the first four assumptions in order to prove that the ordinary least squares estimators of and are best, that is to prove that they have minimum variance among the class of linear unbiased estimators. The theorem that proves that OLS estimators are BLUE (provided the assumptions are fulfilled) is known as the Gauss-Markov theorem. If these assumptions are violated (which is dealt with in Chapter 4), then it may be that OLS estimators are no longer unbiased or efficient. That is, they may be inaccurate or subject to fluctuations between samples. We needed to make the fifth assumption, that the disturbances are normally distributed, in order to make statistical inferences about the population parameters from the sample data, i.e. to test hypotheses about the coefficients. Making this assumption implies that test statistics will follow a tdistribution (provided that the other assumptions also hold).

2/7

Introductory Econometrics for Finance Chris Brooks 2008

5. If the models are linear in the parameters, we can use OLS. (2.57) Yes, can use OLS since the model is the usual linear model we have been dealing with. (2.58) Yes. The model can be linearised by taking logarithms of both sides and by rearranging. Although this is a very specific case, it has sound theoretical foundations (e.g. the Cobb-Douglas production function in economics), and it is the case that many relationships can be approximately linearised by taking logs of the variables. The effect of taking logs is to reduce the effect of extreme values on the regression function, and it may be possible to turn multiplicative models into additive ones which we can easily estimate. (2.59) Yes. We can estimate this model using OLS, but we would not be able to obtain the values of both and , but we would obtain the value of these two coefficients multiplied together. (2.60) Yes, we can use OLS, since this model is linear in the logarithms. For those who have done some economics, models of this kind which are linear in the logarithms have the interesting property that the coefficients ( and) can be interpreted as elasticities. (2.61). Yes, in fact we can still use OLS since it is linear in the parameters. If we make a substitution, say qt = xtzt, then we can run the regression yt = +qt + ut as usual. So, in fact, we can estimate a fairly wide range of model types using these simple tools. 6. The null hypothesis is that the true (but unknown) value of beta is equal to one, against a one sided alternative that it is greater than one: H0 : = 1 H1 : > 1 The test statistic is given by test stat = * 1.147 1 = = 2.682 0.0548 SE ( )

We want to compare this with a value from the t-table with T-2 degrees of freedom, where T is the sample size, and here T-2 =60. We want a value with 5% all in one tail since we are doing a 1-sided test. The critical t-value from the t-table is 1.671:

3/7

Introductory Econometrics for Finance Chris Brooks 2008

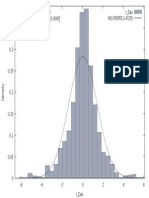

f(x)

5% rejection region

+1.671

The value of the test statistic is in the rejection region and hence we can reject the null hypothesis. We have statistically significant evidence that this security has a beta greater than one, i.e. it is significantly more risky than the market as a whole. 7. We want to use a two-sided test to test the null hypothesis that shares in Chris Mining are completely unrelated to movements in the market as a whole. In other words, the value of beta in the regression model would be zero so that whatever happens to the value of the market proxy, Chris Mining would be completely unaffected by it. The null and alternative hypotheses are therefore: H0 : = 0 H1 : 0 The test statistic has the same format as before, and is given by: test stat = * 0.214 0 = = 1.150 SE ( ) 0.186

We want to find a value from the t-tables for a variable with 38-2=36 degrees of freedom, and we want to look up the value that puts 2.5% of the distribution in each tail since we are doing a two-sided test and we want to have a 5% size of test over all:

4/7

Introductory Econometrics for Finance Chris Brooks 2008

2.5% rejection region

2.5% rejection region

-2.03

+2.03

The critical t-value is therefore 2.03. Since the test statistic is not within the rejection region, we do not reject the null hypothesis. We therefore conclude that we have no statistically significant evidence that Chris Mining has any systematic risk. In other words, we have no evidence that changes in the companys value are driven by movements in the market. 8. A confidence interval for beta is given by the formula: ( SE ( ) t crit , SE ( ) t crit ) Confidence intervals are almost invariably 2-sided, unless we are told otherwise (which we are not here), so we want to look up the values which put 2.5% in the upper tail and 0.5% in the upper tail for the 95% and 99% confidence intervals respectively. The 0.5% critical values are given as follows for a t-distribution with T-2=38-2=36 degrees of freedom:

5/7

Introductory Econometrics for Finance Chris Brooks 2008

0.5% rejection region

0.5% rejection region

-2.72

+2.72

The confidence interval in each case is thus given by (0.214 0.186*2.03) for a 95% confidence interval, which solves to (-0.164, 0.592) and (0.214 0.186*2.72) for a 99% confidence interval, which solves to (0.292,0.720) There are a couple of points worth noting. First, one intuitive interpretation of an X% confidence interval is that we are X% sure that the true value of the population parameter lies within the interval. So we are 95% sure that the true value of beta lies within the interval (-0.164, 0.592) and we are 99% sure that the true population value of beta lies within (-0.292, 0.720). Thus in order to be more sure that we have the true vale of beta contained in the interval, i.e. as we move from 95% to 99% confidence, the interval must become wider. The second point to note is that we can test an infinite number of hypotheses about beta once we have formed the interval. For example, we would not reject the null hypothesis contained in the last question (i.e. that beta = 0), since that value of beta lies within the 95% and 99% confidence intervals. Would we reject or not reject a null hypothesis that the true value of beta was 0.6? At the 5% level, we should have enough evidence against the null hypothesis to reject it, since 0.6 is not contained within the 95% confidence interval. But at the 1% level, we would no longer have sufficient evidence to reject the null hypothesis, since 0.6 is now contained within the interval. Therefore we should always if possible conduct some sort of sensitivity analysis to see if our conclusions are altered by (sensible) changes in the level of significance used. 9. We test hypotheses about the actual coefficients, not the estimated values. We want to make inferences about the likely values of the population parameters (i.e. to test hypotheses about them). We do not need to test

6/7

Introductory Econometrics for Finance Chris Brooks 2008

hypotheses about the estimated values since we know exactly what our estimates are because we calculated them!

7/7

Introductory Econometrics for Finance Chris Brooks 2008

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Ex2 System PDFDocument0 pagesEx2 System PDFdejaytop19820% (1)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- GMAT Quantitative Test 1 SummaryDocument174 pagesGMAT Quantitative Test 1 SummaryBrian TranNo ratings yet

- Andres Caracoles Drax Eletropaulo Gener Dom - Rep. Argentina U.K Brazil Chile CG CS CS LU CGDocument13 pagesAndres Caracoles Drax Eletropaulo Gener Dom - Rep. Argentina U.K Brazil Chile CG CS CS LU CGBrian Tran100% (1)

- IFRS Vs German GAAP Similarities and Differences - Final2 PDFDocument87 pagesIFRS Vs German GAAP Similarities and Differences - Final2 PDFBrian TranNo ratings yet

- Exercise 1. Open GRETL and Capm - GDT Data File.: Coefficient Std. Error T-Ratio P-ValueDocument3 pagesExercise 1. Open GRETL and Capm - GDT Data File.: Coefficient Std. Error T-Ratio P-ValueBrian TranNo ratings yet

- (Harvard) Tesco Supply Chain Latest Version PDFDocument30 pages(Harvard) Tesco Supply Chain Latest Version PDFBrian TranNo ratings yet

- Model 1 - CAPMDocument2 pagesModel 1 - CAPMBrian TranNo ratings yet

- Reset Model - 3 Factor ModelDocument2 pagesReset Model - 3 Factor ModelBrian TranNo ratings yet

- Chapter 2 - Exercise 1Document2 pagesChapter 2 - Exercise 1Brian TranNo ratings yet

- Histogram of Daily Dax ReturnDocument1 pageHistogram of Daily Dax ReturnBrian TranNo ratings yet

- Bus 418 - Team 53Document88 pagesBus 418 - Team 53Brian TranNo ratings yet

- Exercise 1 - Chapter 4Document4 pagesExercise 1 - Chapter 4Brian TranNo ratings yet

- LOR Vicky For Reference 1 PDFDocument1 pageLOR Vicky For Reference 1 PDFBrian TranNo ratings yet

- Exercise 1 - Chapter 4Document4 pagesExercise 1 - Chapter 4Brian TranNo ratings yet

- How To Read A Book in Five Minutes PDFDocument3 pagesHow To Read A Book in Five Minutes PDFBrian TranNo ratings yet

- Bill Clinton''s Great SpeechDocument3 pagesBill Clinton''s Great SpeechBrian TranNo ratings yet