Professional Documents

Culture Documents

Efficient Object Tracking Using GIS Templates

Uploaded by

Linges WaranOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Efficient Object Tracking Using GIS Templates

Uploaded by

Linges WaranCopyright:

Available Formats

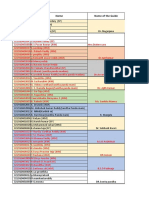

Efficient and Accurate Object Tracking for Mobile Mapping System Using GIS Object Templates Lingeswaran S Segmentation

and Tracking ofVGIS Object for MMS

Abstract: Segmentation and tracking of objects in a 2D image

sequence is an important and challenging field of wide usages including change detection, object-based video post production, content-based indexing and retrieval, surveillance. This paper proposes a novel hybrid method to segment the GIS object in DMI sequences using pyramid decomposition. The proposed method involves image enhancement strategy to hypothesis for foreground background identification and applying watershed transformation to achieve foreground background segmentation more effectively. The proposed method also uses image enhancement and denoising strategy to enhance the frameset to properly track the Object of interest in the given spatiotemporal data set using Gaussian filter. Pyramid decomposition based strategy is used to track the GIS object in sequential frame which involves DMI. Pixels having the highest gradient magnitude intensities (GMIs) correspond to watershed lines, which represent the region boundaries are tracked in subsequent frames. The object template is updated with the frame set to increase the tracking performance.

Keywords-- Digital Measurable Image (DMI), Segmentation,

Object, Color Histogram Back-projection, Watershed, Denoising

I. INTRODUCTION

n quest of low cost, compact size, and efficient tracking methodology based Mobile Mapping system has always been a keen interest of the automobile operators. The tracking of object of interest (OOI) in the field of digital Measureable Image has attracted lots of researchers. DMI sequence acquired by a mobile imaging platform with a much larger time gap; they are not competent to get spatiotemporal segmentation of GIS object in DMI sequence. Automatic GIS data extraction and update is still an unsolved puzzle and the bottle- neck of the MMS [1], [10]. Object-based image sequence segmentation techniques can be grouped into different categories, for instance, supervised, unsupervised or semi-supervised; region based or boundary based; high level or low level; local information based or global information based; segmentation of mobile objects or static objects. DMI sequence acquired by a mobile imaging platform with a much larger time gap; they are not competent to get spatiotemporal segmentation of GIS object in DMI sequence.. Most arresting information in MMS is about GIS objects, e.g. guideposts, central lines and so on. Accurate and efficient segmentation of GIS objects in DMI sequences is a necessary preprocessing for the MMS and there is still a lack of credible work on this issue. Several types of active contour have been utilized for video object segmentation and tracking. Vaswani et al

proposed that particle filter combined with level set based active contour can be used to segment moving and deformable object in an image sequence [15]. Graph or hyper graph is used in some region based segmentation method.SVM based strategy have widely used for clustering in hyper graph lane. Some region based methods employ clustering operation or region splitting and growing in the feature space, which is usually formed by motion vectors, spatial features or appearance features like color, texture, and position. The proposed approach uses watershed algorithm for the foreground and background segmentation process. Watershed approach has been introduced in semi automatic video object racking and shown improvised result from the previously proposed method. Patch color histogram approach have been used for the exact tracking of the object from the various frame of the video sequences. In order to consider the local information of the provided video sequence, image enhancement including Gaussian filter have been employed to enhance the quality output. This paper is organized as follows: Section II proposes Watershed Transformation for image segmentation. Section III proposes adaptive local filters while the experimental result is shown in section IV and conclusion is defined in section V.

II. WATERSHED TRANSFORMATION FOR IMAGE

SEGMENTATION Image segmentation is one of the widely utilized tools for the analysis and extraction of the object of interest from the provided images. Watershed transformation is one of the powerful tools for image segmentation and overcomes the problem of disconnected contours and false edges. Watershed transformation can be utilized for reducing the conflict of false edge definition and enhanced tracking. It considers the gradient magnitude of an image or a distance transformation of a binary image as a topographic surface. Pixels having the highest gradient magnitude intensities (GMIs) correspond to watershed lines, which represent the region boundaries. Water placed on any pixel enclosed by a common watershed line flows downhill to a common local intensity minimum (LIM). Pixels draining to a common minimum form a catch basin, which represents a segment. However, in practice, this transform produces severe over- segmentation due to noise or local irregularities in the gradient image. In order to cope with the problem of noise and local irregularities image enhancement strategy have

been proposed in the section III. Enhancement strategy includes adaptive Gaussian filter to increase the performance of the available video sequence. A previously defined set of markers can be used as a method to enhance the watershed transformation segmentation results.

effective for processing. The Frame set is denoised using the Gaussian filter to increase the range of prediction from the given OOI.

A.

Hypothesis For Figure Background Identification And Auto-Marked Watershed Transformation According to characteristics of GIS OOI (Geographical information based object of interest), we propose the following hypothesis for foreground background identification of GIS OOI template. Pixels having gradient magnitude intensities near to the region boundary belong to the region only;

While in the object template, pixels near the bounding box (boundary) belong to background; the template object is always less in size than the given GIS video sequences; The OOI lies in the center area of the template image.

Although the foreground-background segmentation result is coarse, most of the background can be eliminated and the remainder foreground is sufficient to compute the object of interest from the given frames. The foregroundbackground segmentation enhances the performance of segmentation process and increase the quality of the segmented region. Thus the region from the given frame set is obtained for processing and tracked. However the region so tracked cannot be exactly revealed with the OOI. Patch color histogram strategy is being used for the exact segmentation of the tracked region.

Fig: 2 Local Statistics Estimation We assume that only background objects are present in the first image plane, so that spatial clustering of the first frame gives us the distribution of regions that characterize the scene. Foreground distributions are created in the future image planes as the algorithm processes. The model is initialized using the spatial segmentation of the first frame and the parameters of the distributions are initialized using the local statistics calculation shown in Figure 1. If the number of pixels that belong to region i within the neighborhood is too small, the estimates of i,s,t and i,s,t are not very reliable and therefore no distribution is created for this region. In this way, outliers can be discarded and distribution statistics can be more accurately estimated. Also, in the case of occlusion, background pixel distributions are preserved.

Fig1: Background and Foreground Segmentation Using Watershed Transformation

According to the above three hypothesis, we proposed an auto-marked watershed transform scheme for foreground background identification scheme.

Segment t h e GI S obj e ct te mpl at e u s i ng wa t e r s he d transformation. All the segments adjacent to boundary of the template will be abandoned. The remainder segments form a mask indicating the exact OOI. This cause the template to be more precise and

B.

Fast Patch-based Color Histogram Backprojection In this paper we propose an approximate but quick method, fast patch-based color histogram back-projection method. It is less time-consuming and more robust to object deformation.

The improvements compared to former patch-based color histogram back-projection are: Both the object template and the input image are resized to half of their initial size, for color histogram is nearly invariant to scale change. You can resize the inputs much smaller for speed if you like. The sliding step is set to one third of the object size in corresponding directions rather than 1 pixel. For most of GIS objects template are much bigger than 3 pixels, hence a higher speed will be achieved.

function to identify potential interest points that are invariant to scale and orientation. 2. Keypoint localization: At each candidate location, a detailed model is t to determine location and scale. Key points are selected based on measures of their stability. 3. Orientation assignment: One or more orientations are assigned to each key point location based on local image gradient directions. All future operations are performed on image data that has been transformed relative to the assigned orientation, scale, and location for each feature, thereby providing invariance to these transformations. 4. Keypoint descriptor: The local image gradients are measured at the selected scale in the region around each Keypoint. These are transformed into a representation that allows for signicant levels of local shape distortion and change in illumination.

For comparing two histograms, we choose the histogram intersection, shown in (2), as the correspondence measurement for efficiency consideration. Control variables: K , , W , Initialization: Obtain initial segmentation x using ACA Calculate local statistics i,s,t , i,s,t Initialize Gaussian pdfs pi using i,s,t , i,s,t Initialize foreground Gaussian pdf p f to NULL while new data yt do // Temporal Labeling If> t hreshold then pixel = foreground pixel label = K + 1 if tem plate > threshold then pixel = foreground pixel label = K + 1 else if template < threshold pixel = background pixel label = i end if // Update Calculate local statistics s,t using xt Update temporal model by applying Smoothing Smooth xt by MRF until convergence using end while The cost of extracting the scale invariant features is minimized by taking a cascade ltering approach, in which the more expensive operations are applied only at locations that pass an initial test. Following are the major stages of computation used to generate the set of image features: 1. Scale-space extrema detection: The rst stage of computation searches over all scales and image locations. It is implemented efficiently by using a difference-of-Gaussian

Fig 3: Scale Invariant Feature Location Identification

This approach has been named the Scale Invariant Feature Transform (SIFT), as it transforms image data into scaleinvariant coordinates relative to local features.

III. ENHANCEMENT FOR IMAGE EXTRACTION

The steps of our enhancement technique are as following: 1-Unsharp masking step: Enhances small structures and bring out the hidden details in the image by using unsharp masking. It only sharpens the areas, which have edges or lots of details. Unsharp masking performed by generating a blurred copy of the original image by using laplacian filter, subtracting it from the original image.

I(i,j) = I0(i,j) + Ib(i,j) Where I (i,j) unsharp masking image I0(i,j) original image Ib(i,j) blurred copy

verify the proposed spatiotemporal segmentation method and performed on computer with Intel Pentium 4 2.4GHz CPU and 1GB RAM.

Multiply the unsharp masking image by a fractional value, and adding it to the original image to get the image that will be contrasted. In this step, the large features are not changed by much, but the small ones are enhanced. The result is a sharper, more detailed image. G(i,j) = I0(i,j) +k Ib(i,j) Where: g (i, j) is output image, k is scaling constant. Logical values for k vary between 0.2 and 0.7. Recently there was an attempt to perform the sharpening by local analysis of gradients. 2-Contrast enhancement step: For a grey scale images A sliding 3x3 map window moves from the left side to the right side of original image horizontally in steps starting from the images upper right corner. A pixel value in the enhanced widow dependents only on its value thats mean if the interest pixel exceeds a certain value (threshold) its value remain unchanged if the value of the pixel is under the threshold then it will be remapped .The process can be described with the mapping function O =M (i), where O and i are the new and old pixel values, respectively. M=

Fig 4: interface for extracting and matching the template

c i * i 1+ e

According to above mapping function the new value of corresponding pixel will be: O= 3

Fig 5: Interface detecting the template in frame accurately

Where c is a contrast factor determines the degree of the needed contrast. After map window reaches the right side, it returns to the left side and moves down a step. The process is repeated until the sliding window reaches the right-bottom corner of the image. For color images, before applying the slider map window the pixels with the lowest values map to 0 and the highest values to 255 other pixels value calculated based on a weighted average of the RGB. IV. EXPERIMENTAL RESULT We tested the performance of the proposed technique on grey scale and color real remote sensing images. The effectiveness of the proposed method is demonstrated via the experiment results using DMI sequences. Experiments are implemented on MATLAB R2010a to

Fig 6: Interface tracking template in the DMI Sequence

REFRENCES

[1] Peng [2] J.

Li, Cheng Wang, Hanyun Wang, Shengyong Hao, Spatiotemporal egmentation of GIS Object for Mobile Mapping System IEEE, Conference 2011. G. Allen, R. Y. D. Xu, and J. S. Jin, Object Tracking Using CamShift Algorithm and Multiple Quantized Feature Spaces, PanSydney Area Workshop on Visual Information Processin, 2003. [3] N. Apostoloff, and A. Fitzgibbon, Automatic video segmentation using spatiotemporal T-junctions, BMVC, 2006. [4] S. W. Babacan, and T. N. Pappas, Spatiotemporal algorithm for joint video segmentation and foreground detection, EUSIPCO, 2006. [5] E. Borenstein, and J. Malik, Shape Guided Object Segmentation, CVPR, 2006. [6] G. Bradski, and A. Kaehler, Learning OpenCV. OReilly Media Inc., Sebastopol, pp.194-221, 2008. [7] W. Brendel, and S. Todorovic, Video Object Segmentation by TrackingRegions ICCV, 2009.

[8] P.

L. Correia, and F. Pereira, Classification of Video Segmentation Application Scenarios, IEEE Trans. on Circuits and Systems for Video Technology, 14(5), pp. 735-741, 2004.

[9] R. [10]

Ahmed, G. C. Karmakar, and L. S. Dooley, Probabilistic Spatio- Temporal Video Object Segmentation Incorporating Shape Information, ICASSP, 2005. S. beucher, The watershed transformation applied to image segmentation, 10th Pfefferkorn Conf. on Signal and Image Processing in Microscopy and Microanalysis, 16-19 sept. 1991, Cambridge, UK, Scanning Microscopy International, suppl. 6. pp. 299-314, 1992.

[11]

J. Huang, S. Ravikumar, M. Mitra, W.J. Zhu, and R. Zabih, spatial Color Indexing and Applications, International Journal of Computer Vision, 35(3), pp. 245268, 1999. [12] Y. C. Huang, Q.S. Liu, and, D. Metaxas, Video Object Segmentation by Hypergraph Cut, CVPR, 2009 [13] D. Lowe, Distinctive Image Features from Scale-Invariant key points, International Journal of Computer Vision, 60(2), pp. 91110, 2004.

[14]

S. J. Sun, D. R. Haynor, and Y. M. Kim, Semiautomatic Video Object Segmentation Using VSnakes, IEEE Trans. on Circuits and Systems for Video Technology. 13(1), pp. 75-82, 2003. [15] N. Vaswani, Y. Rathi, A. Yezzi, and A. Tannenbaum, Deform PF-MT: Particle Filter with Mode Tracker for Tracking Non-Affine Contour Deformations, IEEE Trans. Image Processing, 19(4), pp. 841-857,2009. [16] C. Wang, T. Hassan, N. El-Sheimy, and M. Lavigne, Automatic Road Vector Extraction for Mobile Mapping Systems, XXI Congress, ISPRS, 2008. [17] T. T. Zin, and H. Hama, A Method Using Morphology and Histogram for Object-based Retrieval in Image and Video Databases, International Journal of Computer Science and Network Security, 7(9), pp. 123-129, 2007.

You might also like

- V.kartHIKEYAN Published Article3Document5 pagesV.kartHIKEYAN Published Article3karthikeyan.vNo ratings yet

- A New Framework For Color Image Segmentation Using Watershed AlgorithmDocument6 pagesA New Framework For Color Image Segmentation Using Watershed AlgorithmpavithramasiNo ratings yet

- International Journal of Computational Engineering Research (IJCER)Document6 pagesInternational Journal of Computational Engineering Research (IJCER)International Journal of computational Engineering research (IJCER)No ratings yet

- 5 - AshwinKumar - FinalPaper - IISTE Research PaperDocument6 pages5 - AshwinKumar - FinalPaper - IISTE Research PaperiisteNo ratings yet

- International Journal of Engineering Research and Development (IJERD)Document5 pagesInternational Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- IJETR031551Document6 pagesIJETR031551erpublicationNo ratings yet

- SIFT Feature MatchingDocument12 pagesSIFT Feature MatchingethanrabbNo ratings yet

- Fast Adaptive and Effective Image Reconstruction Based On Transfer LearningDocument6 pagesFast Adaptive and Effective Image Reconstruction Based On Transfer LearningIJRASETPublicationsNo ratings yet

- Igital Mage NalysisDocument124 pagesIgital Mage NalysisGeología 2021No ratings yet

- Student AttendanceDocument50 pagesStudent AttendanceHarikrishnan ShunmugamNo ratings yet

- Number Plate Detection: 1.3 Methodology 1.3.1 Edge-Based Text ExtractionDocument17 pagesNumber Plate Detection: 1.3 Methodology 1.3.1 Edge-Based Text Extractionabhinav kumarNo ratings yet

- Image Stitching Using MatlabDocument5 pagesImage Stitching Using Matlabอภิฌาน กาญจนวาปสถิตย์No ratings yet

- Hiep SNAT R2 - FinalDocument15 pagesHiep SNAT R2 - FinalTrần Nhật TânNo ratings yet

- Multi-Temporal Change Detection and Image Segmentation: Under Guidance Of: Dr. Anupam Agarwal SirDocument19 pagesMulti-Temporal Change Detection and Image Segmentation: Under Guidance Of: Dr. Anupam Agarwal SirAshish MeenaNo ratings yet

- Partitioning A Piece of Information Into Meaningful Elementary Parts Termed SegmentsDocument7 pagesPartitioning A Piece of Information Into Meaningful Elementary Parts Termed SegmentsKavya AnandNo ratings yet

- 06 Avr PDFDocument6 pages06 Avr PDFrf_munguiaNo ratings yet

- Image Stitching Using Matlab PDFDocument5 pagesImage Stitching Using Matlab PDFnikil chinnaNo ratings yet

- International Journal of Engineering Research and Development (IJERD)Document5 pagesInternational Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- Chp_13_IMAGE-REGISTRATION-_-SEGMENTATION-minDocument21 pagesChp_13_IMAGE-REGISTRATION-_-SEGMENTATION-mindetex59086No ratings yet

- Image Processing: Dept - of Ise, DR - Ait 2018-19 1Document16 pagesImage Processing: Dept - of Ise, DR - Ait 2018-19 1Ramachandra HegdeNo ratings yet

- Anti-Personnel Mine Detection and Classification Using GPR ImageDocument4 pagesAnti-Personnel Mine Detection and Classification Using GPR ImageBHANDIWADMADHUMITANo ratings yet

- Efficient Graph-Based Image SegmentationDocument15 pagesEfficient Graph-Based Image SegmentationrooocketmanNo ratings yet

- Background Subraction in A Video System Using Morphology TechniqueDocument6 pagesBackground Subraction in A Video System Using Morphology TechniquetheijesNo ratings yet

- Recognizing Pictures at An Exhibition Using SIFTDocument5 pagesRecognizing Pictures at An Exhibition Using SIFTAmit Kumar MondalNo ratings yet

- Image Segmentation Using Soft Computing: Sukhmanpreet Singh, Deepa Verma, Arun Kumar, RekhaDocument5 pagesImage Segmentation Using Soft Computing: Sukhmanpreet Singh, Deepa Verma, Arun Kumar, RekhafarrukhsharifzadaNo ratings yet

- Boosting Image Classification With LDA-based Feature Combination For Digital Photograph ManagementDocument31 pagesBoosting Image Classification With LDA-based Feature Combination For Digital Photograph Managementjanybasha222No ratings yet

- MC0086Document14 pagesMC0086puneetchawla2003No ratings yet

- Multivariate Image Segmentation Using Semantic Region Growing with Adaptive Edge PenaltyDocument4 pagesMultivariate Image Segmentation Using Semantic Region Growing with Adaptive Edge PenaltySanjay ShelarNo ratings yet

- Image-Based Indoor Localization Using Smartphone CameraDocument9 pagesImage-Based Indoor Localization Using Smartphone Cameratoufikenfissi1999No ratings yet

- Image-Based Surface Crack Inspection and Pothole Depth EstimationDocument11 pagesImage-Based Surface Crack Inspection and Pothole Depth Estimationkiytff ghjjjjNo ratings yet

- Plagiarism - ReportDocument11 pagesPlagiarism - ReportSanjay ShelarNo ratings yet

- Currency Recognition On Mobile Phones Proposed System ModulesDocument26 pagesCurrency Recognition On Mobile Phones Proposed System Moduleshab_dsNo ratings yet

- Efficient Data Processing and Management of Multimedia Data Using Scalable Video CodingDocument76 pagesEfficient Data Processing and Management of Multimedia Data Using Scalable Video CodingVishwa PathakNo ratings yet

- A Comprehensive Image Segmentation Approach For Image RegistrationDocument5 pagesA Comprehensive Image Segmentation Approach For Image Registrationsurendiran123No ratings yet

- Image Processing Based Non-Invasive Health Monitoring in Civil EngineeringDocument8 pagesImage Processing Based Non-Invasive Health Monitoring in Civil EngineeringIJRASETPublicationsNo ratings yet

- Moving Toward Region-Based Image Segmentation Techniques: A StudyDocument7 pagesMoving Toward Region-Based Image Segmentation Techniques: A StudyDeepak MohanNo ratings yet

- Sketch-to-Sketch Match With Dice Similarity MeasuresDocument6 pagesSketch-to-Sketch Match With Dice Similarity MeasuresInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Sensors 22 08202 v2Document23 pagesSensors 22 08202 v2Correo NoDeseadoNo ratings yet

- Improved SIFT-Features Matching For Object Recognition: Emails: (Alhwarin, Wang, Ristic, Ag) @iat - Uni-Bremen - deDocument12 pagesImproved SIFT-Features Matching For Object Recognition: Emails: (Alhwarin, Wang, Ristic, Ag) @iat - Uni-Bremen - dethirupathiNo ratings yet

- Image Processing in Medical Field for Image Blurring CorrectionDocument31 pagesImage Processing in Medical Field for Image Blurring CorrectionRashi GuptaNo ratings yet

- Roadside Video Data Analysis FrameworkDocument28 pagesRoadside Video Data Analysis FrameworkKalirajan KNo ratings yet

- Region Growing Algorithm For UnderWater Image SegmentationDocument8 pagesRegion Growing Algorithm For UnderWater Image Segmentationpi194043No ratings yet

- Modern Mobile MappingDocument12 pagesModern Mobile Mappingn.ragavendiranNo ratings yet

- Simulink Model Based Image SegmentationDocument4 pagesSimulink Model Based Image Segmentationeditor_ijarcsseNo ratings yet

- Optimal Image Segmentation Techniques Project ReportDocument33 pagesOptimal Image Segmentation Techniques Project ReportMoises HernándezNo ratings yet

- Tools and Techniques For Color Image RetrievalDocument12 pagesTools and Techniques For Color Image RetrievalLuke WalkerNo ratings yet

- Improved SIFT Algorithm Image MatchingDocument7 pagesImproved SIFT Algorithm Image Matchingivy_publisherNo ratings yet

- RGB-D Mapping Uses Depth Cameras for Dense 3D Indoor Environment ModelingDocument15 pagesRGB-D Mapping Uses Depth Cameras for Dense 3D Indoor Environment ModelingHieu TranNo ratings yet

- Motion Detection Application Using Web CameraDocument3 pagesMotion Detection Application Using Web CameraIlham ClinkersNo ratings yet

- A New Framework For Color Image Segmentation Using Watershed AlgorithmDocument7 pagesA New Framework For Color Image Segmentation Using Watershed AlgorithmbudiNo ratings yet

- High quality multi-scale image segmentation using optimizationDocument12 pagesHigh quality multi-scale image segmentation using optimizationdragon_287No ratings yet

- Digital Image ProcessingDocument15 pagesDigital Image ProcessingSmitha AsokNo ratings yet

- Content Based Image Retrieval Methods Using Self Supporting Retrieval Map AlgorithmDocument7 pagesContent Based Image Retrieval Methods Using Self Supporting Retrieval Map Algorithmeditor_ijarcsseNo ratings yet

- Façade Texturing For Rendering 3D City Models: Martin Kada, Darko Klinec, Norbert HaalaDocument8 pagesFaçade Texturing For Rendering 3D City Models: Martin Kada, Darko Klinec, Norbert HaalaBosniackNo ratings yet

- Digital Image Processing: Minakshi KumarDocument22 pagesDigital Image Processing: Minakshi KumarNikhil SoniNo ratings yet

- Combination of Feature-Based and Area-Based Image Registration Technique For High Resolution Remote Sensing ImageDocument4 pagesCombination of Feature-Based and Area-Based Image Registration Technique For High Resolution Remote Sensing ImageakfaditadikapariraNo ratings yet

- Content-Aware Image Compression With Convolutional Neural NetworksDocument9 pagesContent-Aware Image Compression With Convolutional Neural NetworksDmitry OryolNo ratings yet

- AN IMPROVED TECHNIQUE FOR MIX NOISE AND BLURRING REMOVAL IN DIGITAL IMAGESFrom EverandAN IMPROVED TECHNIQUE FOR MIX NOISE AND BLURRING REMOVAL IN DIGITAL IMAGESNo ratings yet

- PIP Future of Internet 2012 Big DataDocument41 pagesPIP Future of Internet 2012 Big DatadarkojevNo ratings yet

- AI Exam2012Document2 pagesAI Exam2012al_badwiNo ratings yet

- Artificial Intelligence Machine Learnind and ErpDocument13 pagesArtificial Intelligence Machine Learnind and Erpshahanas mubarakNo ratings yet

- Strategies For Improving Object Detection in Real-Time Projects That Use Deep Learning TechnologyDocument7 pagesStrategies For Improving Object Detection in Real-Time Projects That Use Deep Learning TechnologyMindful NationNo ratings yet

- Learn AI and ML for a Career BoostDocument8 pagesLearn AI and ML for a Career BoostRishiNo ratings yet

- 2017 1 Multivariate Data AnalysisDocument2 pages2017 1 Multivariate Data AnalysisWidya RezaNo ratings yet

- Industry 4.0 & Hero MotoCorpDocument42 pagesIndustry 4.0 & Hero MotoCorpdheeraj007_nitk3164100% (1)

- Xcede Salary GuideDocument10 pagesXcede Salary GuideJoel Cerqueira PonteNo ratings yet

- Unit-4object Segmentation Regression Vs Segmentation Supervised and Unsupervised Learning Tree Building Regression Classification Overfitting Pruning and Complexity Multiple Decision TreesDocument25 pagesUnit-4object Segmentation Regression Vs Segmentation Supervised and Unsupervised Learning Tree Building Regression Classification Overfitting Pruning and Complexity Multiple Decision TreesShalinichowdary ThulluriNo ratings yet

- ML 1Document20 pagesML 1Adwait RaichNo ratings yet

- Morphological Image ProcessingDocument21 pagesMorphological Image Processinglephuckt0% (1)

- Chengqing Zong - Rui Xia - Jiajun Zhang - Text Data Mining-Springer SingaporeDocument506 pagesChengqing Zong - Rui Xia - Jiajun Zhang - Text Data Mining-Springer Singaporeياسر سعد الخزرجيNo ratings yet

- 7 Steps To Design Build and Scale An AI Product - Allie K Miller - March 2019Document19 pages7 Steps To Design Build and Scale An AI Product - Allie K Miller - March 2019MathewNo ratings yet

- AI Unit 1.Document15 pagesAI Unit 1.timiNo ratings yet

- A Survey of Topic Pattern Mining in Text Mining PDFDocument7 pagesA Survey of Topic Pattern Mining in Text Mining PDFeditorinchiefijcsNo ratings yet

- The Impact of Artificial Intelligence On SocietyDocument2 pagesThe Impact of Artificial Intelligence On SocietychristineNo ratings yet

- IIoT For PDMDocument33 pagesIIoT For PDMCyrix.One100% (1)

- Ieee FORMAT PDFDocument4 pagesIeee FORMAT PDFKalyan VarmaNo ratings yet

- Blockchain and The Supply Chain Concepts, Strategies and Practical Applications, 2nd EditionDocument289 pagesBlockchain and The Supply Chain Concepts, Strategies and Practical Applications, 2nd EditionPierpaolo Vergati100% (1)

- Human Computer Interaction - IIT GuwahatiDocument3 pagesHuman Computer Interaction - IIT GuwahatiRajesh UpadhyayNo ratings yet

- Artificial Intelligence in Information SystemsDocument6 pagesArtificial Intelligence in Information SystemsVIVA-TECH IJRINo ratings yet

- Sat - 34.Pdf - A Systematic Approach Towards Description and Classification of Crime IncidentsDocument11 pagesSat - 34.Pdf - A Systematic Approach Towards Description and Classification of Crime IncidentsVj KumarNo ratings yet

- D.A.V. School: Artificial Intelligence Class: X Quarterly Examination 2020-2021 Time: 2 HrsDocument8 pagesD.A.V. School: Artificial Intelligence Class: X Quarterly Examination 2020-2021 Time: 2 HrsDR.S.VIJAYA SureshNo ratings yet

- Deepfake Image Detection A Comparative Study of Three Different Convolutional Neural NetworksDocument7 pagesDeepfake Image Detection A Comparative Study of Three Different Convolutional Neural NetworksPhạm Vũ HùngNo ratings yet

- BAE Report 171221 DigitalFinalDocument48 pagesBAE Report 171221 DigitalFinalSyam DayalNo ratings yet

- Dissertation Topics in Organisational PsychologyDocument4 pagesDissertation Topics in Organisational PsychologyAcademicPaperWritersCanada100% (1)

- Schedule First Presentation (Moodle Upload)Document12 pagesSchedule First Presentation (Moodle Upload)321910402038 gitamNo ratings yet

- Cover Letter For ExxonmobilDocument7 pagesCover Letter For Exxonmobilafdmmavid100% (1)

- (Frontiers in Artificial Intelligence and Applications) B. Goertzel-Advances in Artificial General Intelligence - Concepts, Architectures and Algorithms-IOS Press (2007)Document305 pages(Frontiers in Artificial Intelligence and Applications) B. Goertzel-Advances in Artificial General Intelligence - Concepts, Architectures and Algorithms-IOS Press (2007)Costin Stan100% (2)

- AIMLDocument2 pagesAIMLCharan NuthalapatiNo ratings yet