Professional Documents

Culture Documents

8 Interpretation

Uploaded by

Maulik PatelCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

8 Interpretation

Uploaded by

Maulik PatelCopyright:

Available Formats

Interpreting Effect Size Results

Cohens Rules-of-Thumb

standardized mean difference effect size small = 0.20 medium = 0.50 large = 0.80 correlation coefficient small = 0.10 medium = 0.25 large = 0.40 odds-ratio small = 1.50 medium = 2.50 large = 4.30

Practical Meta-Analysis -- D. B. Wilson

1

Interpreting Effect Size Results

These do not take into account the context of the intervention They do correspond to the distribution of effects across meta-analyses found by Lipsey and Wilson (1993)

Practical Meta-Analysis -- D. B. Wilson

Interpreting Effect Size Results

Rules-of-Thumb do not take into account the context of the intervention

a small effect may be highly meaningful for an intervention that requires few resources and imposes little on the participants a small effect may be meaningful if the intervention is delivered to an entire population (prevention programs for school children) small effects may be more meaningful for serious and fairly intractable problems

Cohens Rules-of-Thumb do, however, correspond to the distribution of effects across meta-analyses found by Lipsey and Wilson (1993)

Practical Meta-Analysis -- D. B. Wilson

Translation of Effect Sizes

Original metric Success Rates (Rosenthal and Rubins BESD)

Proportion of successes in the treatment and comparison groups assuming an overall success rate of 50% Can be adapted to alternative overall success rates Assuming a comparison group recidivism rate of 15%, the effect size of 0.45 for the cognitive-behavioral treatments translates into a recidivism rate for the treatment group of 7%

Example using the sex offender data

Practical Meta-Analysis -- D. B. Wilson

Translation of Effect Sizes

Odds-ratio can be translated back into proportions

you need to fix either the treatment proportion or the control proportion

ptreatment OR * pcontrol 1 OR * pcontrol pcontrol

Example: an odds-ratio of 1.42 translates into a treatment success rate of 59% relative to a success rate of 50% for the control group

Practical Meta-Analysis -- D. B. Wilson

Methodological Adequacy of Research Base

Findings must be interpreted within the bounds of the methodological quality of the research base synthesized. Studies often cannot simply be grouped into good and bad studies. Some methodological weaknesses may bias the overall findings, others may merely add noise to the distribution.

Practical Meta-Analysis -- D. B. Wilson

Confounding of Study Features

Relative comparisons of effect sizes across studies are inherently correlational! Important study features are often confounding, obscuring the interpretive meaning of observed differences If the confounding is not severe and you have a sufficient number of studies, you can model out the influence of method features to clarify substantive differences

Practical Meta-Analysis -- D. B. Wilson

Final Comments

Meta-analysis is a replicable and defensible method of synthesizing findings across studies Meta-analysis often points out gaps in the research literature, providing a solid foundation for the next generation of research on that topic Meta-analysis illustrates the importance of replication Meta-analysis facilitates generalization of the knowledge gain through individual evaluations

Practical Meta-Analysis -- D. B. Wilson

Application of Meta-Analysis to Your Own Research Areas

Practical Meta-Analysis -- D. B. Wilson

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- An Export-Marketing Model For Pharmaceutical Firms (The Case of Iran)Document7 pagesAn Export-Marketing Model For Pharmaceutical Firms (The Case of Iran)Maulik PatelNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Markov RDocument12 pagesMarkov RMaulik PatelNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Human Resource Management: Topics To Be CoveredDocument22 pagesHuman Resource Management: Topics To Be CoveredMaulik PatelNo ratings yet

- Ch1Document24 pagesCh1Maulik PatelNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Business Environment Analysis (Porter's 5 Forces Model)Document9 pagesBusiness Environment Analysis (Porter's 5 Forces Model)FarihaNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- (Applied Logic Series 15) Didier Dubois, Henri Prade, Erich Peter Klement (Auth.), Didier Dubois, Henri Prade, Erich Peter Klement (Eds.) - Fuzzy Sets, Logics and Reasoning About Knowledge-Springer NeDocument420 pages(Applied Logic Series 15) Didier Dubois, Henri Prade, Erich Peter Klement (Auth.), Didier Dubois, Henri Prade, Erich Peter Klement (Eds.) - Fuzzy Sets, Logics and Reasoning About Knowledge-Springer NeAdrian HagiuNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Children's Grace of Mary Tutorial and Learning Center, Inc: New Carmen, Tacurong CityDocument4 pagesChildren's Grace of Mary Tutorial and Learning Center, Inc: New Carmen, Tacurong CityJa NeenNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Chapter 3 SIP MethodologyDocument43 pagesChapter 3 SIP MethodologyMáxyne NalúalNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- 39 - Riyadhah Wasyamsi Waduhaha RevDocument13 pages39 - Riyadhah Wasyamsi Waduhaha RevZulkarnain Agung100% (18)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Science Fiction FilmsDocument5 pagesScience Fiction Filmsapi-483055750No ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Peppers, Cracklings, and Knots of Wool Cookbook The Global Migration of African CuisineDocument486 pagesThe Peppers, Cracklings, and Knots of Wool Cookbook The Global Migration of African Cuisinemikefcebu100% (1)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- What Enables Close Relationships?Document14 pagesWhat Enables Close Relationships?Clexandrea Dela Luz CorpuzNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- MATH 7S eIIaDocument8 pagesMATH 7S eIIaELLA MAE DUBLASNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Weekly Learning Plan: Department of EducationDocument2 pagesWeekly Learning Plan: Department of EducationJim SulitNo ratings yet

- 211 N. Bacalso Avenue, Cebu City: Competencies in Elderly CareDocument2 pages211 N. Bacalso Avenue, Cebu City: Competencies in Elderly CareScsit College of NursingNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Eapp Module 1Document6 pagesEapp Module 1Benson CornejaNo ratings yet

- Tutor InvoiceDocument13 pagesTutor InvoiceAbdullah NHNo ratings yet

- Chapter 10: Third Party Non-Signatories in English Arbitration LawDocument13 pagesChapter 10: Third Party Non-Signatories in English Arbitration LawBugMyNutsNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- A Management and Leadership TheoriesDocument43 pagesA Management and Leadership TheoriesKrezielDulosEscobarNo ratings yet

- BEM - Mandatory CoursesDocument4 pagesBEM - Mandatory CoursesmohdrashdansaadNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- CM PhysicalDocument14 pagesCM PhysicalLulu Nur HidayahNo ratings yet

- UntitledDocument8 pagesUntitledMara GanalNo ratings yet

- Cloze Tests 2Document8 pagesCloze Tests 2Tatjana StijepovicNo ratings yet

- CSL - Reflection Essay 1Document7 pagesCSL - Reflection Essay 1api-314849412No ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

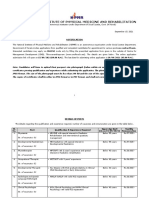

- NIPMR Notification v3Document3 pagesNIPMR Notification v3maneeshaNo ratings yet

- Fundamentals of Marketing Management: by Prabhat Ranjan Choudhury, Sr. Lecturer, B.J.B (A) College, BhubaneswarDocument53 pagesFundamentals of Marketing Management: by Prabhat Ranjan Choudhury, Sr. Lecturer, B.J.B (A) College, Bhubaneswarprabhatrc4235No ratings yet

- Definition Environmental Comfort in IdDocument43 pagesDefinition Environmental Comfort in Idharis hambaliNo ratings yet

- Complexity. Written Language Is Relatively More Complex Than Spoken Language. ..Document3 pagesComplexity. Written Language Is Relatively More Complex Than Spoken Language. ..Toddler Channel TVNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Network Scanning TechniquesDocument17 pagesNetwork Scanning TechniquesjangdiniNo ratings yet

- AVEVA Work Permit ManagerDocument2 pagesAVEVA Work Permit ManagerMohamed Refaat100% (1)

- The First Step Analysis: 1 Some Important DefinitionsDocument4 pagesThe First Step Analysis: 1 Some Important DefinitionsAdriana Neumann de OliveiraNo ratings yet

- Case Study On TQMDocument20 pagesCase Study On TQMshinyshani850% (1)

- Simple Linear Equations A Through HDocument20 pagesSimple Linear Equations A Through HFresgNo ratings yet

- Development Communication Theories MeansDocument13 pagesDevelopment Communication Theories MeansKendra NodaloNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)