Professional Documents

Culture Documents

Distributed Operating Systems: 2 BITS Pilani Ms-Wilp 06 April 2013

Uploaded by

Arun ATOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Distributed Operating Systems: 2 BITS Pilani Ms-Wilp 06 April 2013

Uploaded by

Arun ATCopyright:

Available Formats

Distributed Operating Systems

EMC

2

BITS Pilani

MS-WILP

06 April 2013

Definition of a Distributed System (1)

A distributed system is:

A collection of independent

computers that appears to

its users as a single coherent

system

Definition of a Distributed System (2)

A distributed system organized as middleware

Note that the middleware layer extends over multiple machines

1.1

Transparency in a Distributed System

Different forms of transparency in a distributed system

Transparency Description

Access

Hide differences in data representation and how a

resource is accessed

Location Hide where a resource is located

Migration Hide that a resource may move to another location

Relocation

Hide that a resource may be moved to another

location while in use

Replication

Hide that a resource may be shared by several

competitive users

Concurrency

Hide that a resource may be shared by several

competitive users

Failure Hide the failure and recovery of a resource

Persistence

Hide whether a (software) resource is in memory or

on disk

Scalability Problems

Examples of scalability limitations

Concept Example

Centralized services A single server for all users

Centralized data A single on-line telephone book

Centralized algorithms Doing routing based on complete information

Scaling Techniques

1. Hiding communication latencies

2. Distribution

3. Replication

Scaling Techniques (1)

1.4

The difference between letting:

a) a server or

b) a client check forms as they are being filled

Scaling Techniques (2)

1.5

An example of dividing the DNS name space into zones

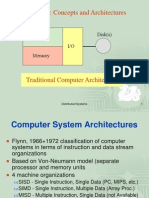

Hardware Concepts

1.6

Different basic organizations and memories in distributed computer

systems

Multiprocessors (1)

A bus-based multiprocessor

Multiprocessors (2)

a) A crossbar switch

b) An omega switching network

1.8

Homogeneous Multicomputer Systems

a) Grid

b) Hypercube

1-9

Software Concepts

An overview of

DOS (Distributed Operating Systems)

NOS (Network Operating Systems)

Middleware

System Description Main Goal

DOS

Tightly-coupled OS for multi-processors and

homogeneous multicomputers

Hide and manage

hardware resources

NOS

Loosely-coupled OS for heterogeneous

multicomputers (LAN and WAN)

Offer local services

to remote clients

Middleware

Additional layer atop of NOS implementing

general-purpose services

Provide distribution

transparency

Uniprocessor Operating Systems

Separating applications from OS code through

a microkernel

1.11

Multiprocessor Operating Systems (1)

A monitor to protect an integer against concurrent access

monitor Counter {

private:

int count = 0;

public:

int value() { return count;}

void incr () { count = count + 1;}

void decr() { count = count 1;}

}

Multiprocessor Operating Systems (2)

A monitor to protect an integer against concurrent access,but

blocking a process

monitor Counter {

private:

int count = 0;

int blocked_procs = 0;

condition unblocked;

public:

int value () { return count;}

void incr () {

if (blocked_procs == 0)

count = count + 1;

else

signal (unblocked);

}

void decr() {

if (count ==0) {

blocked_procs = blocked_procs + 1;

wait (unblocked);

blocked_procs = blocked_procs 1;

}

else

count = count 1;

}

}

Multicomputer Operating Systems (1)

General structure of a multicomputer operating

system

1.14

Multicomputer Operating Systems (2)

Alternatives for blocking and buffering in message passing

1.15

Multicomputer Operating Systems (3)

Relation between blocking, buffering, and reliable communications

Synchronization point Send buffer

Reliable comm.

guaranteed?

Block sender until buffer not full Yes Not necessary

Block sender until message sent No Not necessary

Block sender until message received No Necessary

Block sender until message delivered No Necessary

Distributed Shared Memory Systems (1)

a) Pages of address

space distributed

among four

machines

b) Situation after CPU 1

references page 10

c) Situation if page 10

is read only and

replication is used

Distributed Shared Memory Systems (2)

False sharing of a page between two independent processes

1.18

Network Operating System (1)

General structure of a network operating system

Network Operating System (2)

Two clients and a server in a network operating system

1-20

Network Operating System (3)

Different clients may mount the servers in different places

1.21

Positioning Middleware

General structure of a distributed system as middleware

1-22

Middleware and Openness

In an open middleware-based distributed system, the

protocols used by each middleware layer should be the same,

as well as the interfaces they offer to applications

1.23

Comparison between Systems

A comparison between multiprocessor OS, multicomputer

OS, network OS, and middleware based distributed systems

Item

Distributed OS

Network

OS

Middleware-

based OS

Multiproc. Multicomp.

Degree of transparency Very High High Low High

Same OS on all nodes Yes Yes No No

Number of copies of OS 1 N N N

Basis for communication

Shared

memory

Messages Files Model specific

Resource management

Global,

central

Global,

distributed

Per node Per node

Scalability No Moderately Yes Varies

Openness Closed Closed Open Open

Multitiered Architectures (1)

Alternative client-server organizations (a) (e)

1-29

Multitiered Architectures (2)

An example of a server acting as a client

1-30

Modern Architectures

An example of horizontal distribution of a Web service

1-31

Issues in DOS

Synchronization within one system is hard

enough

Semaphores

Messages

Monitors

Synchronization among processes in a

distributed system is much harder

Outline

We begin with clocks and see how the semantic

requirement for real-time made Lamports logical clocks

possible

Given global clocks, virtual or real, we consider mutual

exclusion

Centralized algorithms keep information in one place effectively

becomes a monitor

Distribution handles mutual exclusion in parallel at the cost of

O(N) messages per CS use

Token algorithm reduced messages under some circumstances but

introduced heartbeat overhead

Each has strengths and weaknesses

Outline

Many distributed algorithms require a coordinator

Creating the need to select, monitor, and replace the

coordinator as required

Election algorithms provide a way to select a

coordinator

Bully algorithm

Ring algorithm

Transactions provide a high level abstraction with

significant power for organizing, expressing, and

implementing distributed algorithms

Mutual Exclusion

Locking

Deadlock

Outline

Transactions are useful because they can be aborted

Concurrency control issues were considered

Locking

Optimistic

Deadlock

Detection

Prevention

Yet again

Distributed systems have the same problems

Only more so

Network Time Protocol

A requests time of B at its own

T

1

B receives request at its T

2

,

records

B responds at its T

3

, sending

values of T

2

and T

3

A receives response at its T

4

Assume transit time is

approximately the same both

ways

Assume that B is the time

server that A wants to

synchronize to

A knows (T

4

T

1

) from its own

clock

B reports T

3

and T

2

in response

to NTP request

A computes total transit time

of

( ) ( )

2 3 1 4

T T T T

Network Time Protocol

One-way transit time is approximately total,

i.e.,

Bs clock at T

4

reads approximately

( ) ( )

2

2 3 1 4

T T T T

( ) ( ) ( ) ( )

2 2

3 2 1 4 2 3 1 4

3

T T T T T T T T

T

+ +

=

+

Network Time Protocol

Bs clock at T

4

reads approximately (from previous slide)

Thus, difference between B and A clocks at T

4

is

( ) ( )

2

2 3 1 4

T T T T

( ) ( ) ( ) ( )

2 2

3 2 1 4 2 3 1 4

3

T T T T T T T T

T

+ +

=

+

What is stratum in NTP?

In the world of NTP, stratum levels define the distance from the reference clock. A reference clock is a stratum-0

device that is assumed to be accurate and has lttle or no delay associated with it. The reference clock typically

synchronizes to the correct time (UTC) using GPS transmissions, CDMA technology or other time signals such as Irig-

B, WWV, DCF77, etc. Stratum-0 servers cannot be used on the network, instead, they are directly connected to

computers which then operate as stratum-1 servers.

A server that is directly connected to a stratum-0 device is called a stratum-1 server. This includes all time servers

with built-in stratum-0 devices, such as the EndRun Time Servers, and also those with direct links to stratum-0

devices such as over an RS-232 connection or via an IRIG-B time code. The basic definition of a stratum-1 time

server is that it be directly linked (not over a network path) to a reliable source of UTC time such as GPS, WWV, or

CDMA transmissions. A stratum-1 time server acts as a primary network time standard.

A stratum-2 server is connected to the stratum-1 server OVER A NETWORK PATH. Thus, a stratum-2 server gets its

time via NTP packet requests from a stratum-1 server. A stratum-3 server gets its time via NTP packet requests from

a stratum-2 server, and so on.

As you progress through different strata there are network delays involved that reduce the accuracy of the NTP

server in relation to UTC. Timestamps generated by an EndRun Stratum 1 Time Server will typically have 10

microseconds accuracy to UTC. A stratum-2 server will have anywhere from 1/2 to 100 ms accuracy to UTC and each

subsequent stratum layer (stratum-3, etc.) will add an additional 1/2-100 ms of inaccuracy.

For another explanation of NTP Strata read this wikipedia article on NTP Servers Clock Strata.

NTP Stratum

Yellow arrows indicate a direct

connection;

Red arrows indicate a network

connection.

Ref: http://en.wikipedia.org/wiki/NTP_server#Clock_strata

NTP uses a hierarchical, semi-layered

system of levels of clock sources. Each

level of this hierarchy is termed

a stratum and is assigned a layer

number starting with 0 (zero) at the top.

The stratum level defines its distance

from the reference clock and exists to

prevent cyclical dependencies in the

hierarchy.

It is important to note that the

stratum is not an indication of quality or

reliability, it is common to find stratum

3 time sources that are higher quality

than other stratum 2 time

Network Time Protocol

Servers organized as strata

Stratum 0 server adjusts itself to WWV directly

Stratum 1 adjusts self to Stratum 0 servers

Etc.

Within a stratum, servers adjust with each other

If T

A

is slow, add c to clock rate

To speed it up gradually

If T

A

is fast, subtract c from clock rate

To slow it down gradually

WWV is the call sign of the United States National Institute of Standards and Technology's (NIST)

HF ("shortwave") radio station in Fort Collins, Colorado. WWV continuously transmits official U.S.

Government frequency and time signals on 2.5, 5, 10, 15 and 20 MHz. These carrier frequencies

and time signals are controlled by local atomic clocks traceable to NIST's primary standard in

Boulder, Colorado by GPS common view observations and other time transfer methods.

Ref: http://en.wikipedia.org/wiki/WWV_(radio_station)

Berkeley Algorithm

Berkeley Algorithm

Time Daemon polls other systems

Computes average time

Tells other machines how to adjust their clocks

Problem

Time not a reliable method of

synchronization

Users mess up clocks

(and forget to set their time zones!)

Unpredictable delays in Internet

Relativistic issues

If A and B are far apart physically, and

two events T

A

and T

B

are very close in time, then

which comes first? how do you know?

Example

At midnight PDT(Pacific

Daylight Time), bank posts

interest to your account

based on current balance.

At 3:00 AM EDT (Eastern

Daylight Zone), you

withdraw some cash.

Does interest get paid on

the cash you just

withdrew?

Depends upon which event

came first!

What if transactions made

on different replicas?

Solution: Logical Clocks

Not clocks at all

Just monotonic counters

Lamports temporal logic

Definition: a b means

a occurs before b

I.e., all processes agree

that a happens, then

later b happens

E.g., send(message)

receive(message)

Logical Clocks

Every machine maintains its own logical

clock C

Transmit C with every message

If C

received

> C

own

, then adjust C

own

forward to

C

received

+ 1

Result: Anything that is known to follow

something else in logical time has larger

logical clock value.

Logical Clocks

Clock Synchronization

When each machine has its own clock, an event

that occurred after another event may

nevertheless be assigned an earlier time.

Physical Clocks : Clock Synchronization

Maximum resolution desired for global time keeping

determines the maximum difference which can be

tolerated between synchronized clocks

The time keeping of a clock, its tick rate should satisfy:

The worst possible divergence between two clocks is

thus:

So the maximum time t between clock synchronization

operations that can ensure is:

o

o

o

+ < < 1 1

t

C

t A = o 2

o

2

= At

Physical Clocks : Clock Synchronization

Christians Algorithm

Periodically poll the machine with access to the

reference time source

Estimate round-trip delay with a time stamp

Estimate interrupt processing time

figure 3-6, page 129 Tanenbaum

Take a series of measurements to estimate the time it

takes for a timestamp to make it from the reference

machine to the synchronization target

This allows the synchronization to converge within

with a certain degree of confidence

Probabilistic algorithm and guarantee

Physical Clocks : Clock Synchronization

Wide availability of hardware and software to keep clocks

synchronized within a few milliseconds across the Internet

is a recent development

Network Time Protocol (NTP) discussed in papers by David Mill(s)

GPS receiver in the local network synchronizes other machines

What if all have GPS receivers

Increasing deployment of distributed system algorithms

depending on synchronized clocks

Supply and demand constantly in flux

Physical Clocks - 1

Computation of the mean solar day.

Physical Clocks - 2

TAI seconds are of constant length, unlike solar

seconds. Leap seconds are introduced when

necessary to keep in phase with the sun.

Clock Synchronization Algorithms

The relation between clock time and UTC when clocks tick at different rates.

Clock Synchronization

Physical Clocks

Physical clock example: counter + holding register +

oscillating quartz crystal

The counter is decremented at each oscillation

Counter interrupts when it reaches zero

Reloads from the holding register

Interrupt = clock tick (often 60 times/second)

Software clock: counts interrupts

This value represents number of seconds since some

predetermined time (Jan 1, 1970 for UNIX systems;

beginning of the Gregorian calendar for Microsoft)

Can be converted to normal clock times

Clock Skew

In a distributed system each computer has its

own clock

Each crystal will oscillate at slightly different

rate.

Over time, the software clock values on the

different computers are no longer the same.

Clock Skew

Clock skew(offset): the difference between the

times on two different clocks

Clock drift : the difference between a clock

and actual time

Ordinary quartz clocks drift by ~ 1sec in 11-12

days. (10

-6

secs/sec)

High precision quartz clocks drift rate is

somewhat better

Various Ways of Measuring Time*

The sun

Mean solar second gradually getting longer as earths

rotation slows.

International Atomic Time (TAI)

Atomic clocks are based on transitions of the cesium atom

Atomic second = value of solar second at some fixed time

(no longer accurate)

Universal Coordinated Time (UTC)

Based on TAI seconds, but more accurately reflects sun

time (inserts leap seconds to synchronize atomic second

with solar second)

Getting the Correct (UTC) Time*

WWV radio station or similar stations in other

countries (accurate to +/- 10 msec)

UTC services provided by earth satellites

(accurate to .5 msec)

GPS (Global Positioning System) (accurate to

20-35 nanoseconds)

Clock Synchronization Algorithms*

In a distributed system one machine may have

a WWV receiver and some technique is used

to keep all the other machines in synch with

this value.

Or, no machine has access to an external time

source and some technique is used to keep all

machines synchronized with each other, if not

with real time.

Clock Synchronization Algorithms

Network Time Protocol (NTP):

Objective: to keep all clocks in a system synchronized to UTC

time (1-50 msec accuracy) not so good in WAN

Uses a hierarchy of passive time servers

The Berkeley Algorithm:

Objective: to keep all clocks in a system synchronized to each

other (internal synchronization)

Uses active time servers that poll machines periodically

Reference broadcast synchronization (RBS)

Objective: to keep all clocks in a wireless system synchronized

to each other

Three Philosophies of Clock

Synchronization

Try to keep all clocks synchronized to real

time as closely as possible

Try to keep all clocks synchronized to each

other, even if they vary somewhat from UTC

time

Try to synchronize enough so that interacting

processes can agree upon an event order.

Refer to these clocks as logical clocks

6.2 Logical Clocks

Observation: if two processes (running on

separate processors) do not interact, it

doesnt matter if their clocks are not

synchronized.

Observation: When processes do interact,

they are usually interested in event order,

instead of exact event time.

Conclusion: Logical clocks are sufficient for

many applications

Formalization

The distributed system consists of n processes,

p

1

, p

2

, p

n

(e.g, a MPI group)

Each p

i

executes on a separate processor

No shared memory

Each p

i

has a state s

i

Process execution: a sequence of events

Changes to the local state

Message Send or Receive

Two Versions

Lamports logical clocks: synchronizes logical

clocks

Can be used to determine an absolute ordering

among a set of events although the order doesnt

necessarily reflect causal relations between

events.

Vector clocks: can capture the causal

relationships between events.

Lamports Logical Time

Lamport defined a happens-before relation

between events in a process.

"Events" are defined by the application. The

granularity may be as coarse as a procedure or

as fine-grained as a single instruction.

Happened Before Relation (a b)

a b: (page 244-245)

in the same [sequential] process,

send, receive in different processes, (messages)

transitivity: if a b and b c, then a c

If a b, then a and b are causally related; i.e.,

event a potentially has a causal effect on

event b.

Concurrent Events

Happens-before defines a partial order of

events in a distributed system.

Some events cant be placed in the order

a and b are concurrent (a || b) if

!(a b) and !(b a).

If a and b arent connected by the happened-

before relation, theres no way one could

affect the other.

Logical Clocks

Needed: method to assign a timestamp to event a

(call it C(a)), even in the absence of a global clock

The method must guarantee that the clocks have

certain properties, in order to reflect the definition of

happens-before.

Define a clock (event counter), C

i

, at each process

(processor) P

i

.

When an event a occurs, its timestamp ts(a) = C(a),

the local clock value at the time the event takes

place.

Correctness Conditions

If a and b are in the same process, and

a b then C

(a) < C

(b)

If a is the event of sending a message from Pi,

and b is the event of receiving the message by

Pj, then C

i

(a) < C

j

(b).

The value of C must be increasing (time

doesnt go backward).

Corollary: any clock corrections must be made by

adding a positive number to a time.

Implementation Rules

Between any two successive events a & b in Pi,

increment the local clock (C

i

= C

i

+ 1)

thus C

i

(b) = C

i

(a) + 1

When a message m is sent from P

i

, set its time-

stamp ts

m

to C

i

, the time of the send event after

following previous step.

When the message is received at P

j

the local

time must be greater than ts

m

. The rule is (C

j

=

max{C

j

, ts

m

} + 1).

Lamports Logical Clocks (2)

Figure 6-9. (a) Three processes, each with its own clock. The

clocks run at different rates.

Event a: P1 sends m1 to

P2 at t = 6,

Event b: P2 receives m1

at t = 16.

If C(a) is the time m1

was sent, and C(b) is the

time m1 is received, do

C(a) and C(b) satisfy the

correctness conditions ?

Lamports Logical Clocks (3)

Figure 6-9. (b) Lamports algorithm corrects the clocks.

Event c: P3

sends m3 to

P2 at t = 60

Event d: P2

receives m3

at t = 56

Do C(c) and

C(d) satisfy

the

conditions?

Application Layer

Application sends message m

i

Adjust local clock,

Timestamp m

i

Middleware sends

message

Network Layer

Message m

i

is received

Adjust local clock

Deliver m

i

to application

Middleware layer

Figure 6-10. The positioning of Lamports logical clocks in distributed systems

Handling clock management as a middleware operation

Figure 5.3 (Advanced Operating Systems,Singhal and Shivaratri)

How Lamports logical clocks advance

e11

e12 e13 e14

e15

e16

e17

e21 e22 e23

e24

e25

P1

P2

Which events are causally related?

Which events are concurrent?

eij represents event j

on processor i

A Total Ordering Rule

(does not guarantee causality)

A total ordering of events can be obtained if

we ensure that no two events happen at the

same time (have the same timestamp).

Why? So all processors can agree on an

unambiguous order.

How? Attach process number to low-order

end of time, separated by decimal point; e.g.,

event at time 40 at process P1 is 40.1,event at

time 40 at process P2 is 40.2

Figure 5.3 - Singhal and Shivaratri

e11

e12 e13 e14

e15

e16

e17

e21 e22 e23

e24

e25

P1

P2

What is the total ordering of the events in these

two processes?

Example: Total Order Multicast

Consider a banking database, replicated

across several sites.

Queries are processed at the geographically

closest replica

We need to be able to guarantee that DB

updates are seen in the same order

everywhere

Totally Ordered Multicast

Update 1: Process 1 at Site A adds $100 to an account,

(initial value = $1000)

Update 2: Process 2 at Site B increments the account by

1%

Without synchronization,

its possible that

replica 1 = $1111,

replica 2 = $1110

Message 1: add $100.00

Message 2: increment account by 1%

The replica that sees the messages in the

order m1, m2 will have a final balance of

$1111

The replica that sees the messages in the

order m2, m1 will have a final balance of

$1110

The Problem

Site 1 has final account balance of $1,111 after

both transactions complete and Site 2 has final

balance of $1,100.

Which is right? Either, from the standpoint of

consistency.

Problem: lack of consistency.

Both values should be the same

Solution: make sure both sites see/process all

messages in the same order.

Implementing Total Order

Assumptions:

Updates are multicast to all sites, including

(conceptually) the sender

All messages from a single sender arrive in the

order in which they were sent

No messages are lost

Messages are time-stamped with Lamport clock

values.

Implementation

When a process receives a message, put it in a

local message queue, ordered by timestamp.

Multicast an acknowledgement to all sites

Each ack has a timestamp larger than the

timestamp on the message it acknowledges

The message queue at each site will

eventually be in the same order

Implementation

Deliver a message to the application only when the

following conditions are true:

The message is at the head of the queue

The message has been acknowledged by all other

receivers. This guarantees that no update messages with

earlier timestamps are still in transit.

Acknowledgements are deleted when the message

they acknowledge is processed.

Since all queues have the same order, all sites

process the messages in the same order.

Causality

Causally related events:

Event a may causally affect event b if a b

Events a and b are causally related if either

a b or b a.

If neither of the above relations hold, then there is

no causal relation between a & b. We say that a

|| b (a and b are concurrent)

Vector Clock Rationale

Lamport clocks limitation:

If (ab) then C(a) < C(b) but

If C(a) < C(b) then we only know that either (ab) or

(a || b), i.e., b a

In other words, you cannot look at the clock

values of events on two different processors and

decide which one happens before.

Lamport clocks do not capture causality

Lamports Logical Clocks (3)

Figure 6-12.

Suppose we add a message to the

scenario in Fig. 6.12(b).

Tsnd(m1) < Tsnd(m3).

(6) < (32)

Does this mean

send(m1) send(m3)?

But

Tsnd(m1) < Tsnd(m2).

(6) < (20)

Does this mean

send(m1) send(m2)?

m

2

m

3

Figure 5.4

Time

P1

P2

P3

e11

.

e21

e12

e22

e31

e32

e33

(1)

(2)

(1) (3)

(1)

(2) (3)

C(e11) < C(e22) and C(e11) < C(e32) but while e11 e22, we cannot say e11

e32 since there is no causal path connecting them. So, with Lamport clocks we

can guarantee that if C(a) < C(b) then

b a , but by looking at the clock values alone we cannot say whether or

not the events are causally related.

Space

Vector Clocks How They Work

Each processor keeps a vector of values, instead of a

single value.

VC

i

is the clock at process i; it has a component for

each process in the system.

VC

i

[i] corresponds to P

i

s local time.

VC

i

[j] represents P

i

s knowledge of the time at P

j

(the # of events that P

i

knows have occurred at Pj

Each processor knows its own time exactly, and

updates the values of other processors clocks based

on timestamps received in messages.

Implementation Rules

IR1: Increment VC

i

[i] before each new event.

IR2: When process i sends a message m it sets ms

(vector) timestamp to VC

i

(after incrementing VC

i

[i])

IR3: When a process receives a message it does a

component-by-component comparison of the

message timestamp to its local time and picks the

maximum of the two corresponding components.

Adjust local components accordingly.

Then deliver the message to the application.

Review

Physical clocks: hard to keep synchronized

Logical clocks: can provide some notion of relative

event occurrence

Lamports logical time

happened-before relation defines causal relations

logical clocks dont capture causality

total ordering relation

use in establishing totally ordered multicast

Vector clocks

Unlike Lamport clocks, vector clocks capture causality

Have a component for each process in the system

Figure 5.5. Singhal and Shivaratri

(1, 0 , 0)

(2, 0, 0) (4, 5, 2)

e11

e12

e14

(0, 1, 0) (2, 2, 0) (2, 3, 1) (2, 5, 2)

(0, 0, 1)

(0, 0, 2)

e21

e22 e23

e24

e31

e32

P1

P2

P3

(2,4,2)

e25

Vector clock values. In a 3- process system, VC(Pi) = vc1, vc2, vc3

e13

(3, 0, 0)

e33

(0, 0, 3)

Establishing Causal Order

When Pi sends a message m to Pj, Pj knows

How many events occurred at Pi before m was sent

How many relevant events occurred at other sites before m

was sent (relevant = happened-before)

In Figure 5.5, VC(e

24

) = (2, 4, 2). Two events in P1 and

two events in P3 happened before e

24

.

Even though P1 and P3 may have executed other events, they

dont have a causal effect on e

24

.

Happened Before/Causally Related Events -

Vector Clock Definition

a b iff ts(a)

<

ts(b)

(a happens before b iff the timestamp of a is less

than the timestamp of b)

Events a and b are causally related if

ts(a)

<

ts(b)

or

ts(b)

<

ts(a)

Otherwise, we say the events are concurrent.

Any pair of events that satisfy the vector clock

definition of happens-before will also satisfy the

Lamport definition, and vice-versa.

Comparing Vector Timestamps

Less than: ts(a) <

ts(b)

iff at least one

component of ts(a) is strictly less than the

corresponding component of ts(b) and all other

components of ts(a) are either less than or

equal to the corresponding component in ts(b).

(3,3,5) (3,4,5), (3, 3, 3) (3, 3, 3),

(3,3,5) (3,2,4), (3, 3 ,5) | | (4,2,5).

Figure 5.4

Time

P1

P2

P3

e21

e12

e22

e31

e32

e33

(1, 0, 0)

(2, 0, 0)

(0, 1, 0) (2, 2, 0)

(0, 0,1)

(0, 0, 2) (0, 0, 3)

ts(e11) = (1, 0, 0) and ts(e32) = (0, 0, 2), which shows that the two

events are concurrent.

ts(e11) = (1, 0, 0) and ts(e22) = (2, 2, 0), which shows that

e11 e22

e11

Causal Ordering of Messages

An Application of Vector Clocks

Premise: Deliver a message only if messages

that causally precede it have already been

received

i.e., if send(m

1

) send(m

2

), then it should be true

that receive(m

1

) receive(m

2

) at each site.

If messages are not related (send(m

1

) ||

send(m

2

)), delivery order is not of interest.

Compare to Total Order

Totally ordered multicast (TOM) is stronger

(more inclusive) than causal ordering (COM).

TOM orders all messages, not just those that are

causally related.

Weaker COM is often what is needed.

Enforcing Causal Communication

Clocks are adjusted only when sending or

receiving messages; i.e, these are the only

events of interest.

Send m: P

i

increments VC

i

[i] by 1 and applies

timestamp, ts(m).

Receive m: Pi compares VC

i

to ts(m); set VC

i

[k]

to max{VC

i

[k] , ts(m)[k]} for each k, k i.

Message Delivery Conditions

Suppose: P

J

receives message m from P

i

Middleware delivers m to the application iff

ts(m)[i] = VC

j

[i] + 1

all previous messages from P

i

have been delivered

ts(m)[k] VC

i

[k] for all k i

P

J

has received all messages that P

i

had seen before it sent

message m.

In other words, if a message m is received

from P

i,

you should also have received every

message that P

i

received before it sent m; e.g.,

if m is sent by P

1

and ts(m) is (3, 4, 0) and you are

P

3

, you should already have received exactly 2

messages from P

1

and at least 4 from P

2

if m is sent by P

2

and ts(m) is (4, 5, 1, 3) and if you

are P

3

and VC

3

is (3, 3, 4, 3) then you need to wait

for a fourth message from P

2

and at least one

more message from P

1

.

P0

P1

P2

(1, 0, 0)

P1 received message m from P0 before sending

message m* to P2; P2 must wait for delivery of m

before receiving m*

(Increment own clock only on message send)

Before sending or receiving any messages, ones own

clock is (0, 0, 0)

VC2

(1, 0, 0) (1, 1, 0)

(1, 1, 0)

VC

1

m

m*

VC

0

VC

2

Figure 6-13. Enforcing Causal Communication

VC

0

(1, 1, 0)

(0, 0, 0)

VC2

Christian's Algorithm

Getting the current time from a time server.

One more introduction

Global Time & Global States of Distributed

Systems

Asynchronous distributed systems consist of several processes

without common memory which communicate (solely) via

messages with unpredictable transmission delays

Global time & global state are hard to realize in distributed

systems

Rate of event occurrence is very high

Event execution times are very small

We can only approximate the global view

Simulate synchronous distributed system on a given asynchronous systems

Simulate a global time Clocks (Physical and Logical)

Simulate a global state Global Snapshots

Simulate Synchronous

Distributed Systems

Synchronizers [Awerbuch 85]

Simulate clock pulses in such a way that a message is only generated

at a clock pulse and will be received before the next pulse

Drawback

Very high message overhead

The Concept of Time in Distributed Systems

A standard time is a set of instants with a temporal precedence order <

satisfying certain conditions [Van Benthem 83]:

Irreflexivity

Transitivity

Linearity

Eternity (x-y: x<y)

Density (x,y: x<y -z: x<z<y)

Transitivity and Irreflexivity imply asymmetry

A linearly ordered structure of time is not always adequate for distributed

systems

Captures dependence, not independence of distributed activities

Time as a partial order

A partially ordered system of vectors forming a lattice structure is a natural

representation of time in a distributed system

Global time in distributed systems

An accurate notion of global time is difficult to achieve in

distributed systems.

Uniform notion of time is necessary for correct operation of many

applications (mission critical distributed control, online

games/entertainment, financial apps, smart environments etc.)

Clocks in a distributed system drift

Relative to each other

Relative to a real world clock

Determination of this real world clock itself may be an issue

Clock synchronization is needed to simulate global time

Physical Clocks vs. Logical clocks

Physical clocks are logical clocks that must not deviate from the real-time

by more than a certain amount.

We often derive causality of events from loosely synchronized clocks

Physical Clock Synchronization

Physical Clocks

How do we measure real time?

17th century - Mechanical clocks based on

astronomical measurements

Solar Day - Transit of the sun

Solar Seconds - Solar Day/(3600*24)

Problem (1940) - Rotation of the earth varies (gets

slower)

Mean solar second - average over many days

Atomic Clocks

1948

Counting transitions of a crystal (Cesium 133, quartz) used

as atomic clock

crystal oscillates at well known frequency

TAI - International Atomic Time

9192631779 transitions = 1 mean solar second in 1948

UTC (Universal Coordinated Time)

From time to time, we skip a solar second to stay in phase with the

sun (30+ times since 1958)

UTC is broadcast by several sources (satellites)

From Distributed Systems (cs.nju.edu.cn/distribute-systems/lecture-notes/

124

How Clocks Work in Computers

Quartz

crystal

Counter

Holding

register

Each crystal oscillation

decrements the counter by 1

When counter gets 0, its

value reloaded from the

holding register

CPU

When counter is 0, an

interrupt is generated, which

is call a clock tick

At each clock tick, an interrupt

service procedure add 1 to time

stored in memory

Memory

Oscillation at a well-

defined frequency

Accuracy of Computer Clocks

Modern timer chips have a relative error of

1/100,000 - 0.86 seconds a day

To maintain synchronized clocks

Can use UTC source (time server) to obtain

current notion of time

Use solutions without UTC.

Cristians (Time Server) Algorithm

Uses a time server to synchronize clocks

Time server keeps the reference time (say UTC)

A client asks the time server for time, the server responds with its

current time, and the client uses the received value to set its clock

But network round-trip time introduces errors

Let RTT = response-received-time request-sent-time (measurable at client),

If we know (a) min = minimum client-server one-way transmission time and

(b) that the server timestamped the message at the last possible instant

before sending it back

Then, the actual time could be between [T+min,T+RTT min]

Cristians Algorithm

Client sets its clock to halfway between T+min and T+RTT min

i.e., at T+RTT/2

Expected (i.e., average) skew in client clock time = (RTT/2 min)

Can increase clock value, should never decrease it.

Can adjust speed of clock too (either up or down is ok)

Multiple requests to increase accuracy

For unusually long RTTs, repeat the time request

For non-uniform RTTs

Drop values beyond threshold; Use averages (or weighted

average)

Berkeley UNIX algorithm

One daemon without UTC

Periodically, this daemon polls and asks all the

machines for their time

The machines respond.

The daemon computes an average time and

then broadcasts this average time.

Decentralized Averaging Algorithm

Each machine has a daemon without UTC

Periodically, at fixed agreed-upon times, each

machine broadcasts its local time.

Each of them calculates the average time by

averaging all the received local times.

Distributed Time Service

Software service that provides precise, fault-tolerant clock

synchronization for systems in local area networks (LANs) and

wide area networks (WANs).

determine duration, perform event sequencing and

scheduling.

Each machine is either a time server or a clerk

software components on a group of cooperating systems;

client obtains time from DTS(distributed time system) entity

DTS entities

DTS server

DTS clerk that obtain time from DTS servers on other hosts

Clock Synchronization in DCE

(Distributed Computing Environment)

DCEs time model is actually in an interval

I.e. time in DCE is actually an interval

Comparing 2 times may yield 3 answers

t1 < t2, t2 < t1, not determined

Periodically a clerk obtains time-intervals from several servers ,e.g. all

the time servers on its LAN

Based on their answers, it computes a new time and gradually

converges to it.

Compute the intersection where the intervals overlap. Clerks then adjust

the system clocks of their client systems to the midpoint of the computed

intersection.

When clerks receive a time interval that does not intersect with the

majority, the clerks declare the non-intersecting value to be faulty.

Clerks ignore faulty values when computing new times, thereby ensuring

that defective server clocks do not affect clients.

Network Time Protocol (NTP)

Most widely used physical clock synchronization protocol on

the Internet (http://www.ntp.org)

Currently used: NTP V3 and V4

10-20 million NTP servers and clients in the Internet

Claimed Accuracy (Varies)

milliseconds on WANs, submilliseconds on LANs, submicroseconds

using a precision timesource

Nanosecond NTP in progress

NTP Design

Hierarchical tree of time servers.

The primary server at the root

synchronizes with the UTC.

The next level contains

secondary servers, which act as a

backup to the primary server.

At the lowest level is the

synchronization subnet which

has the clients.

Logical Clock Synchronization

Causal Relations

Distributed application results in a set of distributed

events

Induces a partial order causal precedence relation

Knowledge of this causal precedence relation is

useful in reasoning about and analyzing the

properties of distributed computations

Liveness and fairness in mutual exclusion

Consistency in replicated databases

Distributed debugging, checkpointing

Logical Clocks

Used to determine causality in distributed systems

Time is represented by non-negative integers

Event structures represent distributed computation

(in an abstract way)

A process can be viewed as consisting of a sequence of

events, where an event is an atomic transition of the local

state which happens in no time

Process Actions can be modeled using the 3 types of

events

Send Message

Receive Message

Internal (change of state)

Logical Clocks

A logical Clock C is some abstract mechanism which assigns to

any event eeE the value C(e) of some time domain T such

that certain conditions are met

C:ET :: T is a partially ordered set : e<eC(e)<C(e) holds

Consequences of the clock condition [Morgan 85]:

Events occurring at a particular process are totally ordered by their

local sequence of occurrence

If an event e occurs before event e at some single process, then event e is

assigned a logical time earlier than the logical time assigned to event e

For any message sent from one process to another, the logical time of

the send event is always earlier than the logical time of the receive

event

Each receive event has a corresponding send event

Future can not influence the past (causality relation)

Event Ordering

Lamport defined the happens before (=>)

relation

If a and b are events in the same process, and a

occurs before b, then a => b.

If a is the event of a message being sent by one

process and b is the event of the message

being received by another process, then a => b.

If X =>Y and Y=>Z then X => Z.

If a => b then time (a) => time (b)

P1

P2

P3

e21

e11

e31

e22

e13

global time

e1

2

e23

e32

e14

Program order: e13 < e14

Send-Receive: e23 < e12

Transitivity: e21 < e32

Processor Order: e precedes e in the same process

Send-Receive: e is a send and e is the corresponding receive

Transitivity: exists e s.t. e < e and e< e

Example:

Event Ordering- the example

Causal Ordering

Happens Before also called causal ordering

Possible to draw a causality relation between 2

events if

They happen in the same process

There is a chain of messages between them

Happens Before notion is not straightforward in

distributed systems

No guarantees of synchronized clocks

Communication latency

Implementation of Logical Clocks

Requires

Data structures local to every process to represent logical time and

a protocol to update the data structures to ensure the consistency condition.

Each process Pi maintains data structures that allow it the following two

capabilities:

A local logical clock, denoted by LC_i , that helps process Pi measure its own

progress.

A logical global clock, denoted by GCi , that is a representation of process Pi s

local view of the logical global time. Typically, lci is a part of gci

The protocol ensures that a processs logical clock, and thus its view of the

global time, is managed consistently.

The protocol consists of the following two rules:

R1: This rule governs how the local logical clock is updated by a process when it

executes an event.

R2: This rule governs how a process updates its global logical clock to update its

view of the global time and global progress.

Types of Logical Clocks

Systems of logical clocks differ in their

representation of logical time and also in the

protocol to update the logical clocks.

3 kinds of logical clocks

Scalar

Vector

Matrix

Scalar Logical Clocks - Lamport

Proposed by Lamport in 1978 as an attempt to totally

order events in a distributed system.

Time domain is the set of non-negative integers.

The logical local clock of a process pi and its local

view of the global time are squashed into one integer

variable Ci .

Monotonically increasing counter

No relation with real clock

Each process keeps its own logical clock used to

timestamp events

Consistency with Scalar Clocks

To guarantee the clock condition, local clocks must

obey a simple protocol:

When executing an internal event or a send event at

process P

i

the clock C

i

ticks

C

i

+= d (d>0)

When P

i

sends a message m, it piggybacks a logical

timestamp t which equals the time of the send event

When executing a receive event at P

i

where a message

with timestamp t is received, the clock is advanced

C

i

= max(C

i

,t)+d (d>0)

Results in a partial ordering of events.

Total Ordering

Extending partial order to total order

Global timestamps:

(Ta, Pa) where Ta is the local timestamp and Pa is

the process id.

(Ta,Pa) < (Tb,Pb) iff

(Ta < Tb) or ( (Ta = Tb) and (Pa < Pb))

Total order is consistent with partial order.

time Proc_id

Independence

Two events e,e are mutually independent (i.e. e||e) if

~(e<e).~(e<e)

Two events are independent if they have the same timestamp

Events which are causally independent may get the same or different

timestamps

By looking at the timestamps of events it is not possible to

assert that some event could not influence some other event

If C(e)<C(e) then ~(e<e) however, it is not possible to decide whether

e<e or e||e

C is an order homomorphism which preserves < but it does not

preserves negations (i.e. obliterates a lot of structure by mapping E

into a linear order)

Problems with Total Ordering

A linearly ordered structure of time is not always adequate for

distributed systems

captures dependence of events

loses independence of events - artificially enforces an ordering for

events that need not be ordered loses information

Mapping partial ordered events onto a linearly ordered set of integers is

losing information

Events which may happen simultaneously may get different timestamps

as if they happen in some definite order.

A partially ordered system of vectors forming a lattice

structure is a natural representation of time in a distributed

system

Vector Times

The system of vector clocks was developed independently by Fidge,

Mattern and Schmuck.

To construct a mechanism by which each process gets an

optimal approximation of global time

In the system of vector clocks, the time domain is represented by a set of

n-dimensional non-negative integer vectors.

Each process has a clock C

i

consisting of a vector of length n, where n is

the total number of processes vt[1..n], where vt[j ] is the local logical

clock of Pj and describes the logical time progress at process Pj .

A process P

i

ticks by incrementing its own component of its clock

C

i

[i] += 1

The timestamp C(e) of an event e is the clock value after ticking

Each message gets a piggybacked timestamp consisting of the vector of the

local clock

The process gets some knowledge about the other process time approximation

C

i

=sup(C

i

,t):: sup(u,v)=w : w[i]=max(u[i],v[i]), i

Vector Clocks example

An Example of vector clocks

From A. Kshemkalyani and M. Singhal (Distributed Computing)

Figure 3.2: Evolution of vector time.

Vector Times (cont)

Because of the transitive nature of the scheme, a process may

receive time updates about clocks in non-neighboring process

Since process P

i

can advance the i

th

component of global time,

it always has the most accurate knowledge of its local time

At any instant of real time i,j: C

i

[i]> C

j

[i]

For two time vectors u,v

usv iff i: u[i]sv[i]

u<v iff usv . u=v

u||v iff ~(u<v) .~(v<u) :: || is not transitive

Structure of the Vector Time

For any n>0, (N

n

,s) is a lattice

The set of possible time vectors of an event set E is a sublattice of (N

n

,s)

For an event set E, the lattice of consistent cuts and the lattice of possible

time vectors are isomorphic

e,eeE:e<e iff C(e)<C(e) . e||e iff iff C(e)||C(e)

In order to determine if two events e,e are causally related or

not, just take their timestamps C(e) and C(e)

if C(e)<C(e) v C(e)<C(e), then the events are causally related

Otherwise, they are causally independent

Matrix Time

Vector time contains information about latest direct

dependencies

What does Pi know about Pk

Also contains info about latest direct dependencies

of those dependencies

What does Pi know about what Pk knows about Pj

Message and computation overheads are high

Powerful and useful for applications like distributed

garbage collection

Time Manager Operations

Logical Clocks

C.adjust(L,T)

adjust the local time displayed by clock C to T (can be gradually,

immediate, per clock sync period)

C.read

returns the current value of clock C

Timers

TP.set(T) - reset the timer to timeout in T units

Messages

receive(m,l); broadcast(m); forward(m,l)

Simulate A Global State

The notions of global time and global state are closely related

A process can (without freezing the whole computation)

compute the best possible approximation of a global state

[Chandy & Lamport 85]

A global state that could have occurred

No process in the system can decide whether the state did really

occur

Guarantee stable properties (i.e. once they become true, they remain

true)

P2

P1

P3

Time

e21

e31

e11

e22

Event Diagram

e23 e24 e25

e12 e13

e32 e33 e34

Poset Diagram

P2

P1

P3

Time

e21

e31

e11

e22 e23 e24 e25

e12 e13

e32 e33 e34

Equivalent Event Diagram

Rubber Band Transformation

P2

P1

P3

Time

e31

e11

e21

e12

P4

e41 e42

e22

cut

Poset Diagram

e21

e41

e31

e21

e22

e12

e42

Past

Chandy-Lamport Distributed Snapshot

Algorithm

Assumes FIFO communication in channels

Uses a control message, called a marker to separate messages in the

channels.

After a site has recorded its snapshot, it sends a marker, along all of its

outgoing channels before sending out any more messages.

The marker separates the messages in the channel into those to be included in

the snapshot from those not to be recorded in the snapshot.

A process must record its snapshot no later than when it receives a marker

on any of its incoming channels.

The algorithm terminates after each process has received a marker on all

of its incoming channels.

All the local snapshots get disseminated to all other processes and all the

processes can determine the global state.

Chandy-Lamport Distributed Snapshot

Algorithm

Marker receiving rule for Process Pi

If (Pi has not yet recorded its state) it

records its process state now

records the state of c as the empty set

turns on recording of messages arriving over other channels

else

Pi records the state of c as the set of messages received over c

since it saved its state

Marker sending rule for Process Pi

After Pi has recorded its state,for each outgoing channel c:

Pi sends one marker message over c

(before it sends any other message over c)

Computing Global States without FIFO

Assumption

Algorithm

All process agree on some future virtual time s or a set of virtual time

instants s

1

,s

n

which are mutually concurrent and did not yet occur

A process takes its local snapshot at virtual time s

After time s the local snapshots are collected to construct a global

snapshot

P

i

ticks and then fixes its next time s=C

i

+(0,,0,1,0,,0) to be the

common snapshot time

P

i

broadcasts s

P

i

blocks waiting for all the acknowledgements

P

i

ticks again (setting C

i

=s), takes its snapshot and broadcast a dummy

message (i.e. force everybody else to advance their clocks to a value > s)

Each process takes its snapshot and sends it to P

i

when its local clock

becomes > s

Computing Global States without FIFO

Assumption (cont)

Inventing a n+1 virtual process whose clock is managed by P

i

P

i

can use its clock and because the virtual clock C

n+1

ticks only when P

i

initiates a new run of snapshot :

The first n component of the vector can be omitted

The first broadcast phase is unnecessary

Counter modulo 2

2 states

White (before snapshot)

Red (after snapshot)

Every message is red or white, indicating if it was send before or after the

snapshot

Each process (which is initially white) becomes red as soon as it receives a red

message for the first time and starts a virtual broadcast algorithm to ensure

that all processes will eventually become red

Computing Global States without FIFO

Assumption (cont)

Virtual broadcast

Dummy red messages to all processes

Flood the network by using a protocol where a process sends dummy red

messages to all its neighbors

Messages in transit

White messages received by red process

Target process receives the white message and sends a copy to the initiator

Termination

Distributed termination detection algorithm [Mattern 87]

Deficiency counting method

Each process has a counter which counts messages send messages received.

Thus, it is possible to determine the number of messages still in transit

Ordering and Cuts

A distributed system is .

A collection of sequential processes

p

1

, p

2

, p

3

..p

n

Network capable of implementing communication

channels between pairs of processes for message

exchange

Channels are reliable but may deliver messages out of

order

Every process can communicate with every other

process(may not be directly)

There is no reasoning based on global clocks

All kinds of synchronization must be done by message

passing

Distributed Computation

A distributed computation is a single execution of a distributed

program by a collection of processes. Each sequential process

generates a sequence of events that are either internal events, or

communication events

The local history of process p

i

during a computation is a (possibly

infinite) sequence of events h

i

= e

i

1

, e

i

2

....

A partial local history of a process is a prefix of the local history h

i

n

= e

i

1

, e

i

2

e

i

n

The global history of a computation is the set H = U

i=1

n

h

i

Global history

It is just the collection of events that have

occurred in the system

It does not give us any idea about the relative

times between the events

As there is no notion of global time, events

can only be ordered based on a notion of

cause and effect

Happened Before Relation ()

If a and b are events in the same process then

a b

If a is the sending of a message m by a process

and b is the corresponding receive event then

a b

Finally if a b b c then a c

If a b and b a then a and b are

concurrent

defines a partial order on the set H

Space Time Diagram

Graphical representation of a distributed system

If there is a path between two events then they are

related

Else they are concurrent

Is this notion of ordering really important?

Some idea of ordering of events is fundamental to reason

about how a system works

Global State Detection is a fundamental problem in

distributed computing

Enables detecting stable properties of a system

How do we get a snapshot of the system when there is no

notion of global time or shared memory

How do we ensure that that the state collected is consistent

Use this problem to illustrate the importance of ordering

This will also give us the notion of what is a consistent

global state

Global States and Cuts

Global State is a n-tuple of local states one for

each process

Cut is a subset of the global history that

contains an initial prefix of each local state

Therefore every cut is a natural global state

Intuitively a cut partitions the space time

diagram along the time axis

A Cut is identified by the last event of each

process that is part of the cut

Example of a Cut

Introduction to consistency

Consider this solution for the common problem of

deadlock detection

System has 3 processes p1, p2, p3

An external process p0 sends a message to each process

(Active Monitoring)

Each process on getting this message reports its local

state

Note that this global state thus collected at p

0

is a cut

p

0

uses this information to create a wait for graph

Consider the space time diagram below and

the cut C

2

1

2

3

Cycle formed

So what went wrong?

p

0

detected a cycle when there was no

deadlock

State recorded contained a message received

by p3 which p1 never sent

The system could never be in such a state and

hence the state p0 saw was inconsistent

So we need to make sure that application see

consistent states

So what is a consistent global state?

A cut C is consistent if for all events e and e

Intuitively if an event is part of a cut then all

events that happened before it must also be

part of the cut

A consistent cut defines a consistent global

state

Notion of ordering is needed after all !!

( ) ( ) C e e e C e e . e ' '

Passive Deadlock Detection

Lets change our approach to deadlock

detection

p

0

now monitors the system passively

Each process sends p

0

a message when an event

occurs

What global state does p

0

now see

Basically hell breaks lose

FIFO Channels

Communication channels need not preserve message

order

Therefore p

0

can construct any permutation of events as

a global state

Some of these may not even be valid (events of the same

process may not be in order)

Implement FIFO channels using sequence numbers

Now we know that we p

0

sees constructs valid runs

But the issue of consistency still remains

) ' ( ) ( ) ' ( ) ( m deliver m deliver m send m send j j i i

Ok lets now fix consistency

Assume a global real-time clock and bound of on the

message delay

Dont panic we shall get rid of this assumption soon

RC(e): Time when event e occurs

Each process reports to p

0

the global timestamp along

with the event

Delivery Rule at p

0

: At time t, deliver all received

messages upto t- in increasing timestamp order

So do we have a consistent state now?

Clock Condition

( ) ( ) C e e e C e e . e ' '

e is observed before e iff RC(e) < RC(e)

Recall our definition of consistency

Therefore state is consistent iff

This is the clock condition

For timestamps from a global clock this is

obviously true

Can we satisfy it for asynchronous systems?

) ' ( ) ( ' e RC e RC e e <

Logical Clocks

Turns out that the clock condition can be

satisfied in asynchronous systems as well

is defined such that Clock Condition holds if

A and b are events of the same process and a

comes before b then RC(a)<RC(b)

If a is the send of an event and b is corrsponding

receive then RC(a)<RC(b)

Lamports Clocks

Local variable LC in every process

LC: Kind of a logical clock

Simple counter that assigns timestamps to

events

Every send event is time stamped

LC modification rules

LC(e

i

) = LC + 1 if e

i

is an internal event or send

max{LC,TS(m)} + 1 if e

i

is receive(m)

Example of Logical Clocks

p1

p2

p3

1

1

1

2

2 4

4

3

5

Observations on Lamports Clocks

Lamport says

a b then C(a) < C(b)

However

C(a) < C(b) then a b ??

Solution: Vector Clocks

Clock (C) is a vector of length n

C[i] : Own logical time

C*j+ : Best guess about js logical time

Vector Clocks Example

1,0,0 2,0,0 3,4,1

0,1,0

2,2,0

0,0,1

2,3,1

2,4,1

Lets formalise the idea

C[i] is incremented between successive local

events

On receiving message timestamped message

m

Can be shown that both sides of relation holds

]) [ ], [ max( : ] [ , k t k C k C k m =

So are Lamport clocks useful only for finding

global state?

Definitely not!!!

Mutual Exclusion using Lamport clocks

Only one process can use resource at a time

Requests are granted in the order in which they

are made

If every process releases the resource then every

request is eventually granted

Assumptions

FIFO reliable channels

Direct connection between processes

Algorithm

p1

p2

p3

1,1

(1,1)

(1,1)(1,2)

1,2

(1,2)

(1,2)

(1,2)

(1,1)(1,2)

2 3

2

2

r4 r3

r3

r3

p1 has higher time stamp messages from p2 and p3. Its message is at top of queue. So

p1 enters

p1 sends release and now p2 enters

Algorithm Summary

Requesting CS

Send timestamped REQUEST

Place request on request queue

On receiving REQUEST

Put request on queue

Send back timestamped REPLY

Enter CS if

Received larger timestamped REPLY

Request at the head of queue

Releasing CS

Send RELEASE message

On receiving RELEASE remove request

Global State Revisited

Earlier in the talk we had discussed the

problem where a process actively tries to get

the global state

Solution to the problem that calculates only

consistent global states

Model

Process only knows about its internal events

Messages it sends and receives

Requirements

Each process records it own local state

The state of the communication channels is

recorded

All these small parts form a consistent whole

State Detection must run along with

underlying computation

FIFO reliable channels

Global States

What exactly is channel state

Let c be a channel from p to q

p records its local state(Lp) and so does q(Lq)

P has some sends in Lp whose receives may

not be in Lq

It is these sent messages that are the state of

q

Intuitively messages in transit when local

states collected

Distributed Mutual Exclusion

Agenda for Spring 2013

Lamport's Mutual Exclusion Algorithm

Ricart & Agrawala Algorithm

Token Ring Mutex Algorithm

Bully Algorithm

Ring Algorithm

Suzuki-Kasami's broadcast Algorithm

Raymond's Tree Based Algorithm

Mutual Exclusion?

A condition in which there is a set of

processes, only one of which is able to access

a given resource or perform a given function

at any time

Mutual Exclusion

Distributed components still need to coordinate their

actions, including but not limited to access to shared

data

Mutual exclusion to some limited set of operations and data

is thus required

Consider several approaches and compare and contrast

their advantages and disadvantages

Centralized Algorithm

The single central process is essentially a monitor

Central server becomes a semaphore server

Three messages per use: request, grant, release

Centralized performance constraint and point of failure

Mutual Exclusion: Distributed Algorithm Factors

Functional Requirements

1) Freedom from deadlock

2) Freedom from starvation

3) Fairness

4) Fault tolerance

Performance Evaluation

Number of messages

Latency

Semaphore system Throughput

Synchronization is always overhead and must be

accounted for as a cost

Mutual Exclusion: Distributed Algorithm Factors

Performance should be evaluated under a variety of loads

Cover a reasonable range of operating conditions

We care about several types of performance

Best case

Worst case

Average case

Different aspects of performance are important for different reason

and in different contexts

Centralized Systems

Mutual exclusion via:

Test & set

Semaphores

Messages

Monitors

Distributed Mutual Exclusion

Assume there is agreement on how a resource

is identified

Pass identifier with requests

Create an algorithm to allow a process to

obtain exclusive access to a resource

Distributed Mutual Exclusion

Centralized Algorithm

Token Ring Algorithm

Distributed Algorithm

Decentralized Algorithm

Centralized algorithm

Mimic single processor system

One process elected as coordinator

P

C

request(R)

grant(R)

1. Request resource

2. Wait for response

3. Receive grant

4. access resource

5. Release resource

release(R)

Centralized algorithm

If another process claimed resource:

Coordinator does not reply until release

Maintain queue

Service requests in FIFO order

P

0

C

request(R)

grant(R)

release(R)

P

1

P

2

request(R)

Queue

P

1

request(R)

P

2

grant(R)

Centralized algorithm

Benefits

Fair

All requests processed in order

Easy to implement, understand, verify

Problems

Process cannot distinguish being blocked from a

dead coordinator

Centralized server can be a bottleneck

Token Ring algorithm

Assume known group of processes

Some ordering can be imposed on group

Construct logical ring in software

Process communicates with neighbor

P

0

P

1

P

2

P

3

P

4

P

5

Token Ring algorithm

Initialization

Process 0 gets token for resource R

Token circulates around ring

From P

i

to P

(i+1)

mod N

When process acquires token

Checks to see if it needs to enter critical section

If no, send token to neighbor

If yes, access resource

Hold token until done

P

0

P

1

P

2

P

3

P

4

P

5

token(R)

Token Ring algorithm

Only one process at a time has token

Mutual exclusion guaranteed

Order well-defined

Starvation cannot occur

If token is lost (e.g. process died)

It will have to be regenerated

Does not guarantee FIFO order

Lamports Mutual Exclusion

Each process maintains request queue

Contains mutual exclusion requests

Requesting critical section:

Process P

i

sends request(i, T

i

) to all nodes

Places request on its own queue

When a process P

j

receives

a request, it returns a timestamped ack

Lamports Mutual Exclusion

Entering critical section (accessing resource):

P

i

received a message (ack or release) from every

other process with a timestamp larger than T

i

P

i

s request has the earliest timestamp in its queue

Difference from Ricart-Agrawala:

Everyone responds always - no hold-back

Process decides to go based on whether its request is

the earliest in its queue

Lamports Mutual Exclusion

Releasing critical section:

Remove request from its own queue

Send a timestamped release message

When a process receives a release message

Removes request for that process from its queue

This may cause its own entry have the earliest timestamp

in the queue, enabling it to access the critical section

Mutual Exclusion: Lamports Algorithm

Every site keeps a request queue sorted by logical time stamp

Uses Lamports logical clocks to impose a total global order on events

associated with synchronization

Algorithm assumes ordered message delivery between every pair of

communicating sites

Messages sent from site S

j

in a particular order arrive at S

j

in the same order

Note: Since messages arriving at a given site come from many sources the

delivery order of all messages can easily differ from site to site

Lamports Algorithm : Request Resource r

Thus, each site has a request queue containing resource use requests and replies

Note that the requests and replies for any given pair of sites must be in the same

order in queues at both sites

Because of message order delivery assumption

i

j i i

eue request_qu queuse local on the request the places

and S to j) , REQUEST(ts sends S Site 1)

r

R e

i resource using processes all of set the is R -

i

j

i

i i j

eue request_qu on

request the places and S site to message REPLY stamped time a

returns it S site from ) , REQUEST(ts receives S site When 2) i

Lamports Algorithm

Entering CS for Resource r

Site S

i

enters the CS protecting the resource when

This ensures that no message from any site with a smaller timestamp could ever arrive

This ensures that no other site will enter the CS

Recall that requests to all potential users of the resource and replies from then go

into request queues of all processes including the sender of the message

i) , (ts n larger tha stamp time

a with sites other all from message a received has S Site L1)

i

i

i

i

eue request_qu queue the of

head at the is S site from request The L2)

Lamports Algorithm

Releasing the CS

The site holding the resource is releasing it, call that site

Note that the request for resource r had to be at the head of the

request_queue at the site holding the resource or it would never

have entered the CS

Note that the request may or may not have been at the head of

the request_queue at the receiving site

i

S

r

R e

j

i i

S to message i) RELEASE(r, a sends and

eue request_qu of front the from request its removes S Site 1)

j i

j

eue request_qu from r) , REQUEST(ts

removes it message i) RELEASE(r, a receives S site When 2)

Lamport ME Example

request (i5)

queue(j10)

reply(12)

queue(i5)

P

i

in

critical

section

queue(j10, i5)

request (j10)

release(i5)

queue(j10)