Professional Documents

Culture Documents

03a Optimization1

Uploaded by

Waqas HassanOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

03a Optimization1

Uploaded by

Waqas HassanCopyright:

Available Formats

Introduction to Optimization

(Part 1)

Daniel Kirschen

Economic dispatch problem

Several generating units serving the load

What share of the load should each

generating unit produce?

Consider the limits of the generating units

Ignore the limits of the network

A B C

L

2011 D. Kirschen and University of Washington 2

Characteristics of the generating units

2011 D. Kirschen and University of Washington 3

Thermal generating units

Consider the running costs only

Input / Output curve

Fuel vs. electric power

Fuel consumption measured by its energy content

Upper and lower limit on output of the generating unit

B T G

(Input)

Electric Power Fuel

(Output)

Output

P

min

P

max

I

n

p

u

t

J/h

MW

Cost Curve

Multiply fuel input by fuel cost

No-load cost

Cost of keeping the unit running if it could produce zero MW

Output

P

min

P

max

C

o

s

t

$/h

MW

No-load cost

2011 D. Kirschen and University of Washington 4

Incremental Cost Curve

Incremental cost curve

Derivative of the cost curve

In $/MWh

Cost of the next MWh

2011 D. Kirschen and University of Washington 5

D FuelCost

D Power

vs Power

F

P

Cost [$/h]

MW

Incremental Cost

[$/MWh]

MW

Mathematical formulation

Objective function

Constraints

Load / Generation balance:

Unit Constraints:

2011 D. Kirschen and University of Washington 6

C C

A

(P

A

) +C

B

(P

B

) +C

C

(P

C

)

L P

A

+ P

B

+ P

C

P

A

min

P

A

P

A

max

P

B

min

P

B

P

B

max

P

C

min

P

C

P

C

max

A B C

L

This is an optimization problem

Introduction to Optimization

An engineer can do with one dollar which any

bungler can do with two

A. M. Wellington (1847-1895)

2011 D. Kirschen and University of Washington 8

Objective

Most engineering activities have an objective:

Achieve the best possible design

Achieve the most economical operating conditions

This objective is usually quantifiable

Examples:

minimize cost of building a transformer

minimize cost of supplying power

minimize losses in a power system

maximize profit from a bidding strategy

2011 D. Kirschen and University of Washington 9

Decision Variables

The value of the objective is a function of

some decision variables:

Examples of decision variables:

Dimensions of the transformer

Output of generating units, position of taps

Parameters of bids for selling electrical energy

2011 D. Kirschen and University of Washington 10

F f x

1

, x

2

, x

3

, .. x

n

( )

Optimization Problem

What value should the decision variables take

so that is minimum or

maximum?

2011 D. Kirschen and University of Washington 11

F f x

1

, x

2

, x

3

, .. x

n

( )

Example: function of one variable

2011 D. Kirschen and University of Washington 12

x

f(x)

x

*

f(x

*

)

f(x) is maximum for x = x*

Minimization and Maximization

2011 D. Kirschen and University of Washington 13

x

f(x)

x

*

f(x

*

)

If x = x* maximizes f(x) then it minimizes - f(x)

-f(x)

-f(x

*

)

Minimization and Maximization

maximizing f(x) is thus the same thing as

minimizing g(x) = -f(x)

Minimization and maximization problems are

thus interchangeable

Depending on the problem, the optimum is

either a maximum or a minimum

2011 D. Kirschen and University of Washington 14

Necessary Condition for Optimality

2011 D. Kirschen and University of Washington 15

x

f(x)

x

*

f(x

*

)

If x x

*

maximises f ( x ) then:

f ( x ) < f ( x

*

) for x < x

*

df

dx

> 0 for x < x

*

f ( x ) < f ( x

*

) for x > x

*

df

dx

< 0 for x > x

*

df

dx

< 0

df

dx

> 0

Necessary Condition for Optimality

2011 D. Kirschen and University of Washington 16

x

f(x)

x

*

If x x

*

maximises f ( x) then

df

dx

0 for x x

*

df

dx

0

Example

2011 D. Kirschen and University of Washington 17

x

f(x)

df

dx

0 For what values of x is ?

In other words, for what values of x is the necessary condition for

optimality satisfied?

Example

A, B, C, D are stationary points

A and D are maxima

B is a minimum

C is an inflexion point

x

f(x)

A B C D

2011 D. Kirschen and University of Washington 18

How can we distinguish minima and maxima?

2011 D. Kirschen and University of Washington 19

x

f(x)

A B C D

For x A and x D, we have:

d

2

f

dx

2

< 0

The objective function is concave around a maximum

How can we distinguish minima and maxima?

x

f(x)

A B C D

For x B we have:

d

2

f

dx

2

> 0

The objective function is convex around a minimum

2011 D. Kirschen and University of Washington 20

How can we distinguish minima and maxima?

2011 D. Kirschen and University of Washington 21

x

f(x)

A B C D

For x C, we have:

d

2

f

dx

2

0

The objective function is flat around an inflexion point

Necessary and Sufficient Conditions of Optimality

Necessary condition:

Sufficient condition:

For a maximum:

For a minimum:

df

dx

0

d

2

f

dx

2

> 0

d

2

f

dx

2

< 0

2011 D. Kirschen and University of Washington 22

Isnt all this obvious?

Cant we tell all this by looking at the objective

function?

Yes, for a simple, one-dimensional case when we

know the shape of the objective function

For complex, multi-dimensional cases (i.e. with

many decision variables) we cant visualize the

shape of the objective function

We must then rely on mathematical techniques

2011 D. Kirschen and University of Washington 23

Feasible Set

The values that the decision variables can take

are usually limited

Examples:

Physical dimensions of a transformer must be

positive

Active power output of a generator may be

limited to a certain range (e.g. 200 MW to 500

MW)

Reactive power output of a generator may be

limited to a certain range (e.g. -100 MVAr to 150

MVAr)

2011 D. Kirschen and University of Washington 24

Feasible Set

x

f(x)

A D

x

MAX

x

MIN

Feasible Set

The values of the objective function outside

the feasible set do not matter

2011 D. Kirschen and University of Washington 25

Interior and Boundary Solutions

A and D are interior maxima

B and E are interior minima

X

MIN

is a boundary minimum

X

MAX

is a boundary maximum

x

f(x)

A D

x

MAX

x

MIN

B E

Do not satisfy the

Optimality conditions!

2011 D. Kirschen and University of Washington 26

Two-Dimensional Case

x

1

x

2

f(x

1

,x

2

)

x

2

*

x

1

*

f(x

1

,x

2

) is minimum for x

1

*

, x

2

*

2011 D. Kirschen and University of Washington 27

Necessary Conditions for Optimality

x

1

x

2

f(x

1

,x

2

)

x

2

*

x

1

*

f ( x

1

,x

2

)

x

1

x

1

*

,x

2

*

0

f ( x

1

,x

2

)

x

2

x

1

*

,x

2

*

0

2011 D. Kirschen and University of Washington 28

Multi-Dimensional Case

At a maximum or minimum value of f x

1

, x

2

, x

3

, .. x

n

( )

we must have:

f

x

1

0

f

x

2

0

f

x

n

0

A point where these conditions are satisfied is called a stationary point

2011 D. Kirschen and University of Washington 29

Sufficient Conditions for Optimality

x

1

x

2

f(x

1

,x

2

) minimum maximum

2011 D. Kirschen and University of Washington 30

Sufficient Conditions for Optimality

x

1

x

2

f(x

1

,x

2

)

Saddle point

2011 D. Kirschen and University of Washington 31

Sufficient Conditions for Optimality

2

f

x

1

2

2

f

x

1

x

2

2

f

x

1

x

n

2

f

x

2

x

1

2

f

x

2

2

2

f

x

2

x

n

2

f

x

n

x

1

2

f

x

n

x

2

2

f

x

n

2

Calculate the Hessian matrix at the stationary point:

2011 D. Kirschen and University of Washington 32

Sufficient Conditions for Optimality

Calculate the eigenvalues of the Hessian matrix at the

stationary point

If all the eigenvalues are greater or equal to zero:

The matrix is positive semi-definite

The stationary point is a minimum

If all the eigenvalues are less or equal to zero:

The matrix is negative semi-definite

The stationary point is a maximum

If some or the eigenvalues are positive and other are

negative:

The stationary point is a saddle point

2011 D. Kirschen and University of Washington 33

Contours

x

1

x

2

f(x

1

,x

2

)

F

1

F

2

F

2

F

1

2011 D. Kirschen and University of Washington 34

Contours

x

1

x

2

Minimum or maximum

A contour is the locus of all the point that give the same value

to the objective function

2011 D. Kirschen and University of Washington 35

Example 1

Minimise C x

1

2

+ 4 x

2

2

2 x

1

x

2

Necessary conditions for optimality:

C

x

1

2 x

1

2 x

2

0

C

x

2

2 x

1

+ 8 x

2

0

x

1

0

x

2

0

is a stationary

point

2011 D. Kirschen and University of Washington 36

Example 1

Sufficient conditions for optimality:

Hessian Matrix:

2

C

x

1

2

2

C

x

1

x

2

2

C

x

2

x

1

2

C

x

2

2

2 - 2

- 2 8

must be positive definite (i.e. all eigenvalues must be positive)

2 2

2 8

0

2

10 +12 0

=

10 t 52

2

0

The stationary point

is a minimum

2011 D. Kirschen and University of Washington 37

Example 1

2011 D. Kirschen and University of Washington 38

x

1

x

2

C=1

C=4

C=9

Minimum: C=0

Example 2

Minimize C x

1

2

+ 3x

2

2

+ 2x

1

x

2

Necessary conditions for optimality:

C

x

1

2x

1

+ 2x

2

0

C

x

2

2x

1

+ 6x

2

0

x

1

0

x

2

0

is a stationary

point

2011 D. Kirschen and University of Washington 39

Example 2

Sufficient conditions for optimality:

Hessian Matrix:

2

C

x

1

2

2

C

x

1

x

2

2

C

x

2

x

1

2

C

x

2

2

- 2 2

2 6

+ 2 2

2 6

0

2

4 8 0

=

4 + 80

2

> 0

or =

4 80

2

< 0

The stationary point

is a saddle point

2011 D. Kirschen and University of Washington 40

Example 2

2011 D. Kirschen and University of Washington 41

x

1

x

2

C=1

C=4

C=9

C=1

C=4

C=9

C=-1

C=-4 C=-9

C=0

C=0

C=-9 C=-4

This stationary point is a saddle point

Optimization with Constraints

Optimization with Equality Constraints

There are usually restrictions on the values

that the decision variables can take

2011 D. Kirschen and University of Washington 43

Minimise

f x

1

, x

2

,.. , x

n

( )

subject to:

1

x

1

, x

2

,.. , x

n

( )

0

m

x

1

, x

2

,.. , x

n

( )

0

Objective function

Equality constraints

Number of Constraints

N decision variables

M equality constraints

If M > N, the problems is over-constrained

There is usually no solution

If M = N, the problem is determined

There may be a solution

If M < N, the problem is under-constrained

There is usually room for optimization

2011 D. Kirschen and University of Washington 44

Example 1

Minimise f x

1

, x

2

( ) 0.25 x

1

2

+ x

2

2

Subject to x

1

, x

2

( ) 5 x

1

x

2

0

x

1

x

2

x

1

, x

2

( ) 5 x

1

x

2

0

f x

1

, x

2

( ) 0.25 x

1

2

+ x

2

2

Minimum

2011 D. Kirschen and University of Washington 45

Example 2: Economic Dispatch

L

G

1

G

2

x

1

x

2

C

1

a

1

+ b

1

x

1

2

C

2

a

2

+ b

2

x

2

2

C C

1

+ C

2

a

1

+ a

2

+ b

1

x

1

2

+ b

2

x

2

2

Cost of running unit 1

Cost of running unit 2

Total cost

Optimization problem:

Minimise C a

1

+ a

2

+ b

1

x

1

2

+ b

2

x

2

2

Subject to: x

1

+ x

2

L

2011 D. Kirschen and University of Washington 46

Solution by substitution

Minimise C a

1

+ a

2

+ b

1

x

1

2

+ b

2

x

2

2

Subject to: x

1

+ x

2

L

x

2

L x

1

C a

1

+ a

2

+ b

1

x

1

2

+ b

2

L x

1

( )

2

Unconstrained minimization

dC

dx

1

2b

1

x

1

- 2b

2

L - x

1

( ) 0

x

1

b

2

L

b

1

+ b

2

x

2

b

1

L

b

1

+ b

2

d

2

C

dx

1

2

2b

1

+ 2b

2

> 0 minimum

2011 D. Kirschen and University of Washington 47

Solution by substitution

Difficult

Usually impossible when constraints are non-

linear

Provides little or no insight into solution

Solution using Lagrange multipliers

2011 D. Kirschen and University of Washington 48

Gradient

Consider a function f (x

1

, x

2

,.. , x

n

)

The gradient of f is the vector f

f

x

1

f

x

2

f

x

n

2011 D. Kirschen and University of Washington 49

Properties of the Gradient

Each component of the gradient vector

indicates the rate of change of the function in

that direction

The gradient indicates the direction in which a

function of several variables increases most

rapidly

The magnitude and direction of the gradient

usually depend on the point considered

At each point, the gradient is perpendicular to

the contour of the function

2011 D. Kirschen and University of Washington 50

Example 3

f ( x , y ) ax

2

+ by

2

f

f

x

f

y

2 ax

2by

x

y

2011 D. Kirschen and University of Washington 51

A

B

C

D

Example 4

f ( x , y ) ax + by

f

f

x

f

y

a

b

x

y

f f

1

f f

2

f f

3

f

f

f

2011 D. Kirschen and University of Washington 52

Lagrange multipliers

f 0.25 x

1

2

+ x

2

2

6

x

1

, x

2

( ) 5 x

1

x

2

Minimise f x

1

, x

2

( ) 0.25 x

1

2

+ x

2

2

subject to x

1

, x

2

( ) 5 x

1

x

2

0

f 0.25 x

1

2

+ x

2

2

5

2011 D. Kirschen and University of Washington 53

Lagrange multipliers

f x

1

, x

2

( ) 6

f x

1

, x

2

( ) 5

f

f

x

1

f

x

2

f

f

f

2011 D. Kirschen and University of Washington 54

Lagrange multipliers

f x

1

, x

2

( ) 6

x

1

, x

2

( )

f x

1

, x

2

( ) 5

w

w

x

1

w

x

2

w

w

w

2011 D. Kirschen and University of Washington 55

Lagrange multipliers

f x

1

, x

2

( ) 6

x

1

, x

2

( )

f x

1

, x

2

( ) 5

The solution must be on the constraint

f

f

2011 D. Kirschen and University of Washington 56

A

B

To reduce the value of f, we must move

in a direction opposite to the gradient

?

Lagrange multipliers

f x

1

, x

2

( ) 6

x

1

, x

2

( )

f x

1

, x

2

( ) 5

We stop when the gradient of the function

is perpendicular to the constraint because

moving further would increase the value

of the function

At the optimum, the gradient of the

function is parallel to the gradient

of the constraint

w

w

w

f

f

f

2011 D. Kirschen and University of Washington 57

A

B

C

Lagrange multipliers

At the optimum, we must have:

f w

Which can be expressed as:

f + l w 0

is called the Lagrange multiplier

The constraint must also be satisfied:

x

1

, x

2

( ) 0

In terms of the co-ordinates:

f

x

1

+

x

1

0

f

x

2

+

x

2

0

2011 D. Kirschen and University of Washington 58

Lagrangian function

To simplify the writing of the conditions for optimality,

it is useful to define the Lagrangian function:

x

1

, x

2

, ( ) f x

1

, x

2

( ) + x

1

, x

2

( )

The necessary conditions for optimality are then given

by the partial derivatives of the Lagrangian:

x

1

, x

2

, ( )

x

1

f

x

1

+

x

1

0

x

1

, x

2

, ( )

x

2

f

x

2

+

x

2

0

x

1

, x

2

, ( )

x

1

, x

2

( ) 0

2011 D. Kirschen and University of Washington 59

Example

Minimise f x

1

, x

2

( ) 0.25 x

1

2

+ x

2

2

subject to x

1

, x

2

( ) 5 x

1

x

2

0

x

1

, x

2

, ( ) 0.25 x

1

2

+ x

2

2

+ 5 x

1

x

2

( )

x

1

, x

2

, ( )

x

1

0.5 x

1

0

x

1

, x

2

, ( )

x

2

2 x

2

0

x

1

, x

2

, ( )

5 x

1

x

2

0

2011 D. Kirschen and University of Washington 60

Example

x

1

, x

2

, ( )

x

1

0.5 x

1

0 x

1

2

x

1

, x

2

, ( )

x

2

2 x

2

0 x

2

1

2

x

1

, x

2

, ( )

5 x

1

x

2

0 5 2

1

2

0

2

x

1

4

x

2

1

2011 D. Kirschen and University of Washington 61

Example

Minimise f x

1

, x

2

( ) 0.25 x

1

2

+ x

2

2

Subject to x

1

, x

2

( ) 5 x

1

x

2

0

x

1

x

2

x

1

, x

2

( ) 5 x

1

x

2

0

Minimum

4

1

f x

1

, x

2

( ) 5

2011 D. Kirschen and University of Washington 62

Important Note!

If the constraint is of the form:

It must be included in the Lagrangian as follows:

And not as follows:

ax

1

+ bx

2

L

= f x

1

,.. , x

n

( )

+ L ax

1

bx

2

( )

= f x

1

,.. , x

n

( )

+ ax

1

+bx

2

( )

2011 D. Kirschen and University of Washington 63

Application to Economic Dispatch

L

G

1

G

2

x

1

x

2

minimise f x

1

, x

2

( ) C

1

x

1

( ) + C

2

x

2

( )

s. t . x

1

, x

2

( ) L x

1

x

2

0

x

1

, x

2

, ( ) C

1

x

1

( ) + C

2

x

2

( ) + L x

1

x

2

( )

x

1

dC

1

dx

1

0

x

2

dC

2

dx

2

0

L x

1

x

2

0

dC

1

dx

1

dC

2

dx

2

Equal incremental cost

solution

2011 D. Kirschen and University of Washington 64

Equal incremental cost solution

x

1

C

1

( x

1

)

x

2

C

2

( x

2

)

Cost curves:

x

1

dC

1

dx

1

x

2

dC

2

dx

2

Incremental

cost curves:

2011 D. Kirschen and University of Washington 65

Interpretation of this solution

x

1

dC

1

dx

1

x

2

dC

2

dx

2

L x

1

*

x

2

*

L

+

-

-

If < 0, reduce

If > 0, increase

2011 D. Kirschen and University of Washington 66

x

1

*

x

2

*

Physical interpretation

x

x

C( x )

dC(x)

dx

D C

D x

dC

dx

lim

x0

D C

D x

For D x sufficiently small:

D C

dC

dx

D x

If D x 1MW :

D C

dC

dx

The incremental cost is the cost of

one additional MW for one hour.

This cost depends on the output of

the generator.

2011 D. Kirschen and University of Washington 67

Physical interpretation

dC

1

dx

1

: Cost of one more MW from unit 1

dC

2

dx

2

: Cost of one more MW from unit 2

Suppose that

dC

1

dx

1

>

dC

2

dx

2

Decrease output of unit 1 by 1MW decrease in cost =

dC

1

dx

1

Increase output of unit 2 by 1MW increase in cost =

dC

2

dx

2

Net change in cost =

dC

2

dx

2

dC

1

dx

1

< 0

2011 D. Kirschen and University of Washington 68

Physical interpretation

dC

1

dx

1

dC

2

dx

2

It pays to increase the output of unit 2 and decrease the

output of unit 1 until we have:

The Lagrange multiplier is thus the cost of one more MW

at the optimal solution.

This is a very important result with many applications in

economics.

2011 D. Kirschen and University of Washington 69

Generalization

Minimise

f x

1

, x

2

,.. , x

n

( )

subject to:

1

x

1

, x

2

,.. , x

n

( )

0

m

x

1

, x

2

,.. , x

n

( )

0

Lagrangian:

= f x

1

,.. , x

n

( )

+

1

1

x

1

,.. , x

n

( )

+ +

m

m

x

1

,.. , x

n

( )

One Lagrange multiplier for each constraint

n + m variables: x

1

, , x

n

and

1

, ,

m

2011 D. Kirschen and University of Washington 70

Optimality conditions

= f x

1

,.. , x

n

( )

+

1

1

x

1

,.. , x

n

( )

+ +

m

m

x

1

,.. , x

n

( )

x

1

f

x

1

+

1

1

x

1

+ +

m

m

x

1

0

x

n

f

x

n

+

1

1

x

n

+ +

m

m

x

n

0

1

1

x

1

, , x

n

( ) 0

m

m

x

1

, , x

n

( ) 0

n equations

m equations

n + m equations in

n + m variables

2011 D. Kirschen and University of Washington 71

You might also like

- Untitled PDFDocument1 pageUntitled PDFWaqas HassanNo ratings yet

- Door Opener Website InfoDocument3 pagesDoor Opener Website InfoWaqas HassanNo ratings yet

- Laplace For EngineersDocument37 pagesLaplace For EngineersKaran_Chadha_8531No ratings yet

- Ogan Modern Control Theory Chapter13 Design of Linear Feedback Control SystemsDocument58 pagesOgan Modern Control Theory Chapter13 Design of Linear Feedback Control SystemsWaqas Hassan100% (1)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Ds Brief ProBee-ZS10Document2 pagesDs Brief ProBee-ZS10firosekhanNo ratings yet

- Niskama KarmaDocument4 pagesNiskama Karmachitta84No ratings yet

- 08 Property Risk Survey Risk AssessmentDocument20 pages08 Property Risk Survey Risk AssessmentRezza SiburianNo ratings yet

- Sensor EFI Toyota Corona 4A-FEDocument8 pagesSensor EFI Toyota Corona 4A-FEKrisma Triyadi100% (1)

- Contactos Bioseguridad Covid19Document10 pagesContactos Bioseguridad Covid19Aldo Ezequilla RamirezNo ratings yet

- Warhammer 50k - The Shape of The Nightmare To ComeDocument115 pagesWarhammer 50k - The Shape of The Nightmare To ComeCallum MacAlister100% (1)

- Test Suite - DIGSI5Document12 pagesTest Suite - DIGSI5Matija KosNo ratings yet

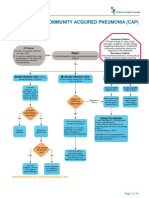

- Complicated Community Acquired Pneumonia Clinical PathwayDocument14 pagesComplicated Community Acquired Pneumonia Clinical PathwayFaisalMuhamadNo ratings yet

- Boq - Fencing EstimateDocument2 pagesBoq - Fencing EstimateAugustine Believe100% (3)

- Ornithology Center Thesis SynopsisDocument6 pagesOrnithology Center Thesis SynopsisprasannaNo ratings yet

- Application Engineering BulletinDocument11 pagesApplication Engineering BulletinCesar G.No ratings yet

- Air Asia India - x1Document11 pagesAir Asia India - x1arshdeep1990100% (1)

- Chiller Cooling Tower AHUDocument9 pagesChiller Cooling Tower AHUAli Hassan RazaNo ratings yet

- Course Completion Certificate 12 ADocument26 pagesCourse Completion Certificate 12 ABabu BalaramanNo ratings yet

- The Vampire: by H.S. OlcottDocument8 pagesThe Vampire: by H.S. OlcottAlisson Heliana Cabrera MocetónNo ratings yet

- Português Español Italiano Français English DeutschDocument2 pagesPortuguês Español Italiano Français English DeutschgandroiidNo ratings yet

- Layout Plan: TOTAL 630sftDocument1 pageLayout Plan: TOTAL 630sftSyed Mohammad Saad FarooqNo ratings yet

- Designing Winning PalatantsDocument12 pagesDesigning Winning PalatantsDante Lertora BavestrelloNo ratings yet

- Assighment 3Document7 pagesAssighment 3Samih S. BarzaniNo ratings yet

- HoneywellControLinksS7999ConfigurationDisplay 732Document32 pagesHoneywellControLinksS7999ConfigurationDisplay 732Cristobal GuzmanNo ratings yet

- BCC 47BFinal PDFDocument545 pagesBCC 47BFinal PDFDragomir GospodinovNo ratings yet

- Jung, Carl Gustav - Volume 9 - The Archetypes of The Collective UnconsciousDocument26 pagesJung, Carl Gustav - Volume 9 - The Archetypes of The Collective UnconsciousKris StarrNo ratings yet

- ESP32 Using PWMDocument7 pagesESP32 Using PWMMarcos TrejoNo ratings yet

- Remembering Zulu, our beloved pet dog who taught unconditional loveDocument3 pagesRemembering Zulu, our beloved pet dog who taught unconditional loveAnandan Menon100% (1)

- BRC Order of Prayer PDFDocument20 pagesBRC Order of Prayer PDFMaryvincoNo ratings yet

- Sony HCD-GNX60Document76 pagesSony HCD-GNX60kalentoneschatNo ratings yet

- A Novel Op-Amp Based LC Oscillator For Wireless CommunicationsDocument6 pagesA Novel Op-Amp Based LC Oscillator For Wireless CommunicationsblackyNo ratings yet

- Patient Information: Corp.: Bill To:: Doc. No: LPL/CLC/QF/2806Document1 pagePatient Information: Corp.: Bill To:: Doc. No: LPL/CLC/QF/2806Modi joshiNo ratings yet

- Discovering Computers 2011: Living in A Digital WorldDocument36 pagesDiscovering Computers 2011: Living in A Digital WorlddewifokNo ratings yet