Professional Documents

Culture Documents

Design and Analysis of Algorithm

Uploaded by

Muhammad KazimOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Design and Analysis of Algorithm

Uploaded by

Muhammad KazimCopyright:

Available Formats

Dynamic Programming

11-2

What is Dynamic Programming?

Dynamic programming solves

optimization problems by combining

solutions to subproblems

11-3

What is Dynamic Programming?

contd

Recall the divide-and-conquer approach

Partition the problem into independent

subproblems

Solve the subproblems recursively

Combine solutions of subproblems

This contrasts with the dynamic

programming approach

11-4

What is Dynamic Programming?

contd

Dynamic programming is applicable when

subproblems are not independent

i.e., subproblems share subsubproblems

Solve every subsubproblem only once and

store the answer for use when it reappears

Divide and conquer vs. dynamic

programming

Elements of DP Algorithms

Sub-structure: decompose problem into smaller sub-

problems. Express the solution of the original

problem in terms of solutions for smaller problems.

Table-structure: Store the answers to the sub-

problem in a table, because sub-problem solutions

may be used many times.

Bottom-up computation: combine solutions on

smaller sub-problems to solve larger sub-problems,

and eventually arrive at a solution to the complete

problem.

8 -7

The shortest path

To find a shortest path in a multi-stage graph

Apply the greedy method :

the shortest path from S to T :

1 + 2 + 5 = 8

S A B T

3

4

5

2 7

1

5 6

8 -8

The shortest path in

multistage graphs

e.g.

The greedy method can not be applied to this case:

(S, A, D, T) 1+4+18 = 23.

The real shortest path is:

(S, C, F, T) 5+2+2 = 9.

S T

13 2

B E

9

A D

4

C F

2

1

5

11

5

16

18

2

8 -9

Dynamic programming approach

Dynamic programming approach (forward approach):

d(S, T) = min{1+d(A, T), 2+d(B, T), 5+d(C, T)}

S T

2

B

A

C

1

5

d(C, T)

d(B, T)

d(A, T)

A

T

4

E

D

11

d(E, T)

d(D, T)

d(A,T) = min{4+d(D,T), 11+d(E,T)}

= min{4+18, 11+13} = 22.

8 -10

Dynamic programming

d(B, T) = min{9+d(D, T), 5+d(E, T), 16+d(F, T)}

= min{9+18, 5+13, 16+2} = 18.

d(C, T) = min{ 2+d(F, T) } = 2+2 = 4

d(S, T) = min{1+d(A, T), 2+d(B, T), 5+d(C, T)}

= min{1+22, 2+18, 5+4} = 9.

The above way of reasoning is called

backward reasoning.

B T

5

E

D

F

9

16

d(F, T)

d(E, T)

d(D, T)

8 -11

Backward approach

(forward reasoning)

d(S, A) = 1

d(S, B) = 2

d(S, C) = 5

d(S,D)=min{d(S, A)+d(A, D),d(S, B)+d(B, D)}

= min{ 1+4, 2+9 } = 5

d(S,E)=min{d(S, A)+d(A, E),d(S, B)+d(B, E)}

= min{ 1+11, 2+5 } = 7

d(S,F)=min{d(S, A)+d(A, F),d(S, B)+d(B, F)}

= min{ 2+16, 5+2 } = 7

8 -12

d(S,T) = min{d(S, D)+d(D, T),d(S,E)+

d(E,T), d(S, F)+d(F, T)}

= min{ 5+18, 7+13, 7+2 }

= 9

8 -13

Principle of optimality

Principle of optimality: Suppose that in solving a

problem, we have to make a sequence of

decisions D

1

, D

2

, , D

n

. If this sequence is

optimal, then the last k decisions, 1 < k < n

must be optimal.

e.g. the shortest path problem

If i, i

1

, i

2

, , j is a shortest path from i to j, then

i

1

, i

2

, , j must be a shortest path from i

1

to j

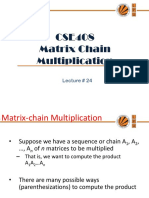

1.Matrix Chain Multiplication

Matrix-chain multiplication problem

Given a chain A

1

, A

2

, , A

n

of n matrices,with

sizes p

0

p

1

, p

1

p

2

, p

2

p

3

, , p

n-1

p

n

Parenthesize the product A

1

A

2

A

n

such that

the total number of scalar multiplications is

minimized.

Matrix Multiplication

Number of scalar multiplications = pqr

pq qr

pr

Example

Matrix Dimensions

A

1

13 x 5

A

2

5 X 89

A

3

89 X 3

A

4

3 X 34

Parenthesization Scalar multiplications

1 ((A

1

A

2

) A

3

) A

4

10,582

2 (A

1

A

2

) (A

3

A

4

) 54,201

3 (A

1

(A

2

A

3

)) A

4

2, 856

4 A

1

((A

2

A

3

) A

4

) 4, 055

5 A

1

(A

2

(A

3

A

4

)) 26,418

1. 13 x 5 x 89 scalar multiplications to get (A B) 13 x 89 result

13 x 89 x 3 scalar multiplications to get ((AB)C) 13 x 3 result

13 x 3 x 34 scalar multiplications to get (((AB)C)D) 13 x 34

Dynamic Programming Approach

The structure of an optimal solution

Let us use the notation A

i..j

for the matrix that results

from the product A

i

A

i+1

A

j

An optimal parenthesization of the product A

1

A

2

A

n

splits the product between A

k

and A

k+1

for some

integer k where1 k < n

First compute matrices A

1..k

and A

k+1..n

; then

multiply them to get the final matrix A

1..n

Dynamic Programming Approach

contd

Key observation: parenthesizations of the

subchains A

1

A

2

A

k

and A

k+1

A

k+2

A

n

must also be

optimal if the parenthesization of the chain A

1

A

2

A

n

is optimal.

That is, the optimal solution to the problem contains

within it the optimal solution to subproblems.

Dynamic Programming Approach

contd

Recursive definition of the value of an

optimal solution.

Let m[i, j] be the minimum number of scalar

multiplications necessary to compute A

i..j

Minimum cost to compute A

1..n

is m[1, n]

Suppose the optimal parenthesization of A

i..j

splits the product between A

k

and A

k+1

for

some integer k where i k < j

Dynamic Programming Approach

contd

A

i..j

= (A

i

A

i+1

A

k

)(A

k+1

A

k+2

A

j

)= A

i..k

A

k+1..j

Cost of computing A

i..j

= cost of computing A

i..k

+

cost of computing A

k+1..j

+ cost of multiplying A

i..k

and A

k+1..j

Cost of multiplying A

i..k

and A

k+1..j

is p

i-1

p

k

p

j

m[i, j ] = m[i, k] + m[k+1, j ] + p

i-1

p

k

p

j

for i k < j

m[i, i ] = 0 for i=1,2,,n

Dynamic Programming Approach

contd

But optimal parenthesization occurs at

one value of k among all possible i k < j

Check all these and select the best one

m[i, j ] =

0 if i=j

min {m[i, k] + m[k+1, j ] + p

i-1

p

k

p

j

} if i<j

i k< j

Dynamic Programming Approach

contd

To keep track of how to construct an

optimal solution, we use a table s

s[i, j ] = value of k at which A

i

A

i+1

A

j

is

split for optimal parenthesization.

Ex:-

[A

1

]

54

[A

2

]

46

[A

3

]

62

[A

4

]

27

P

0

=5, p

1

=4, p

2

=6, p

3

=2, p

4

=7

M

11

=0 M

22

=0 M

33

=0 M

44

=0

M

12

=120

s

12

=1

M

23

=48

s

23

=2

M

34

=84

s

34

=3

M

13

=88

s

13

=1

M

24

=104

s

24

=3

M

14

=158

s

14

=3

1

2

3

4

1 2 3 4 m(1,4)

m(1,3) m(2,4)

m(1,2) m(2,3) m(3,4)

Computation Sequence

( A

1

(

A

2

A

3

)

)

A

4

Optimal Parenthesization

Matrix Chain Multiplication Algorithm

First computes costs for chains of length l=1

Then for chains of length l=2,3, and so on

Computes the optimal cost bottom-up.

Input: Array p[0n] containing matrix dimensions and n

Result: Minimum-cost table m and split table s

Algorithm Matrix_Chain_Mul(p[], n)

{

for i:= 1 to n do

m[i, i]:= 0 ;

for len:= 2 to n do // for lengths 2,3 and so on

{

for i:= 1 to ( n-len+1 ) do

{

j:= i+len-1;

m[i, j]:= ;

for k:=i to j-1 do

{

q:= m[i, k] + m[k+1, j] + p[i-1] p[k] p[j];

if q < m[i, j]

{

m[i, j]:= q;

s[i, j]:= k;

}

}

}

}

return m and s

}

Time complexity of above algorithm is O(n

3

)

11-27

Constructing Optimal Solution

Our algorithm computes the minimum-

cost table m and the split table s

The optimal solution can be constructed

from the split table s

Each entry s[i, j ]=k shows where to split the

product A

i

A

i+1

A

j

for the minimum cost.

Example

Copy the table of previous example and then

construct optimal parenthesization.

8 -29

0/1 knapsack problem

n objects , weight W

1

, W

2

, .,W

n

profit P

1

, P

2

, .,P

n

capacity M

maximize

subject to s M

x

i

= 0 or 1, 1sisn

e. g.

s s n i

i i

x P

1

s s n i

i i

x W

1

i W

i

P

i

1 10 40

2 3 20

3 5 30

M=10

8 -30

The multistage graph solution

The 0/1 knapsack problem can be described

by a multistage graph.

S T

0

1

0

10

00

01

100

010

011

000

001

0

0

0

0

0

0

40

0

20

0

30

0

0

30

x

1

=1

x

1

=0

x

2

=0

x

2

=1

x

2

=0

x

3

=0

x

3

=1

x

3

=0

x

3

=1

x

3

=0

8 -31

The dynamic programming

approach

The longest path represents the optimal solution:

x

1

=0, x

2

=1, x

3

=1

= 20+30 = 50

Let f

i

(Q) be the value of an optimal solution to

objects 1,2,3,,i with capacity Q.

f

i

(Q) = max{ f

i-1

(Q), f

i-1

(Q-W

i

)+P

i

}

The optimal solution is f

n

(M).

i i

x P

8 -32

Optimal binary search trees

e.g. binary search trees for 3, 7, 9, 12;

3

7

12

9

(a) (b)

9

3

7

12

12

3

7

9

(c)

12

3

7

9

(d)

8 -33

Optimal binary search trees

n identifiers : a

1

<a

2

<a

3

<< a

n

P

i

, 1sisn : the probability that a

i

is searched.

Q

i

, 0sisn : the probability that x is searched

where a

i

< x < a

i+1

(a

0

=-, a

n+1

=).

1

0 1

= +

= =

n

i

i

n

i

i

Q P

8 -34

Identifiers : 4, 5, 8, 10, 11,

12, 14

Internal node : successful

search, P

i

External node :

unsuccessful search, Q

i

10

14

E

7

5

11

12 E

4

4

E

0

E

1

8

E

2

E

3

E

5

E

6

The expected cost of a binary tree:

The level of the root : 1

= =

- + -

n

0 i

i i

n

1 i

i i

1) ) (level(E Q ) level(a P

8 -35

The dynamic programming

approach

Let C(i, j) denote the cost of an optimal binary

search tree containing a

i

,,a

j

.

The cost of the optimal binary search tree with a

k

as its root :

a

k

a

1

...a

k-1

a

k+1

...a

n

P

1

...P

k-1

Q

0

...Q

k-1

P

k+1

...P

n

Q

k

...Q

n

C(1,k-1) C(k+1,n)

( ) ( ) ( ) ( )

)

`

+ + + + +

(

+ + + + =

+ =

=

s s

n 1, k C Q P Q 1 k 1, C Q P Q P min n) C(1,

n

1 k i

i i k

1 k

1 i

i i 0 k

n k 1

8 -36

( ) ( )

( ) ( )

( ) ( ) ( )

)

`

+ + + + + =

)

`

+ + + + +

+ + + + =

=

s s

+ =

=

s s

j

i m

m m 1 - i

j k i

j

1 k m

m m k

1 k

i m

m m 1 - i k

j k i

Q P Q j 1, k C 1 k i, C min

j 1, k C Q P Q

1 k i, C Q P Q P min j) C(i,

General formula

a

k

a

1

...a

k-1

a

k+1

...a

n

P

1

...P

k-1

Q

0

...Q

k-1

P

k+1

...P

n

Q

k

...Q

n

C(1,k-1) C(k+1,n)

8 -37

Computation relationships of

subtrees

e.g. n=4

Time complexity : O(n

3

)

when j-i=m, there are (n-m) C(i, j)s to compute.

Each C(i, j) with j-i=m can be computed in O(m) time.

C(1,4)

C(1,3) C(2,4)

C(1,2) C(2,3) C(3,4)

) O(n ) m) m(n O(

3

1 - n m 1

=

s s

Optimal Binary Search Tree(OBST)

Problem

n identifiers : a

1

<a

2

<a

3

<< a

n

p(i), 1sisn : the probability that a

i

is searched.

q(i), 0sisn : the probability that x is searched

where a

i

< x < a

i+1

(a

0

=-, a

n+1

=).

Build a binary search tree ( BST ) with minimum

search cost.

Ex:- (a1,a2,a3)=( do,if,while ), p(i)=q(i)=1/7 for all i

The number of possible binary search trees= (1/n+1)2n

cn

= ( 6c

3

) =5

while

if

do

while

if

do

while

if

do

while

do

if

if

while

do

(a)

(b)

(d) (e)

(c)

For the above example,

cost( tree a ) = 15 / 7

cost( tree b ) = 13/7

cost( tree c ) = 15/7

cost( tree d ) = 15/7

cost( tree e ) = 15/7

Therefore, tree b is optimal

Ex. 2

p(1)=.5, p(2)=.1, p(3)=.05

q(0)=.15, q(1)=.1, q(2)=.05, q(3)=.05

cost( tree a ) = 2.65

cost( tree b ) = 1.9

cost( tree c ) = 1.5

cost( tree d ) = 2.05

cost( tree e ) = 1.6

Therefore, tree c is optimal

Algorithm search(x)

{ found:=false;

t:=tree;

while( (t0) and not found ) do

{

if( x=t->data ) then found:=true;

else if( x<t->data ) then t:=t->lchild;

else t:=t->rchild;

}

if( not found ) then return 0;

else return 1;

}

Cost of searching a successful identifier

= frequencry * level

Cost of searching an unsuccessful

identifier

= frequencry * ( level -1 )

8 -41

Identifiers : do,if,while

Internal node : successful

search, p(i)

External node :

unsuccessful search, q(i)

The expected cost of a binary tree:

while

if

do

E

0

E

1

E

2

E

3

= =

- + -

n

0 i

i i

n

1 i

i i

1) ) (level(E Q ) level(a P

The dynamic programming approach

Make a decision as which of the a

i

s should be assigned

to the root node of the tree.

If we choose a

k

, then it is clear that the internal nodes for

a

1,

a

2

,,a

k-1

as well as the external nodes for the

classes E

0

, E

1

,.,E

k-1

will lie in the left subtree l of the

root. The remaining nodes will be in the right subtree r.

a

k

a

1

...a

k-1

a

k+1

...a

n

P

1

...P

k-1

Q

0

...Q

k-1

P

k+1

...P

n

Q

k

...Q

n

C(1,k-1) C(k+1,n)

cost(r)= p(i)*level(a

i

) + q(i)*(level(E

i

)-1)

cost(r)= p(i)*level(a

i

) + q(i)*(level(E

i

)-1)

In both the cases the level is measured by considering

the root of the respective subtree to be at level 1.

Using w(i, j) to represent the sum q(i) + ( q(l)+p(l) ),

we obtain the following as the expected cost of the above

search tree.

p(k) + cost(l) + cost(r) + w(0,k-1) + w(k,n)

l=i+1

j

1i<k

0i<k

k<i n k i n

If we use c(i,j) to represent the cost of an optimal binary

search tree t

ij

containing a

i+1

,,a

j

and E

i

,,E

j

, then

cost(l)=c(0,k-1), and cost(r)=c(k,n).

For the tree to be optimal, we must choose k such that

p(k) + c(0,k-1) + c(k,n) + w(0,k-1) + w(k,n) is minimum.

Hence, for c(0,n) we obtain

c(0,n)= min c(0,k-1) + c(k, n) +p(k)+ w(0,k-1) + w(k,n)

We can generalize the above formula for any c(i,j) as shown below

c (i, j)= min c (i,k-1) + c (k,j) + p(k)+ w(i,k-1) + w(k,j)

0<kn

i<kj

c(i, j)= min cost(i,k-1) + cost(k,j) + w (i, j)

Therefore, c(0,n) can be solved by first computing all c(i, j)

such that j - i=1, next we compute all c(i,j) such that j - i=2,

then all c(i, j) wiht j i=3, and so on.

During this computation we record the root r(i, j) of each tree

t

ij

, then an optimal binary search tree can be constructed from

these r(i, j).

r(i, j) is the value of k that minimizes the cost value.

i<kj

Ex 1: Let n=4, and ( a1,a2,a3,a4 ) = (do, if, int, while). Let p(1 : 4 ) = ( 3, 3, 1, 1) and q(0: 4)

= ( 2, 3, 1,1,1 ). ps and qs have been multiplied by 16 for convenience.Initially, we have

c(i,i) = 0, w(i, i) = q(i), and r(i, i) = 0 for all 0 i n and w(i, j) = p(j) + q(j) + w(i, j-1 ).

c(i, j)= min cost(i,k-1) + cost(k,j) + w (i, j)

j - i=1

w(0,1)=p(1)+q(1)+w(0,0)=3+3+2=8

0<k 1, -> k=1

c(0,1)=min{ c(0,0)+c(1,1) } + w(0,1)=min{0+0}+8=8

r(0,1)=1;

w(1,2)=p(2)+q(2)+w(1,1)=3+1+3=7

1<k 2, -> k=2

c(1,2)=min { c(1,1) + c(2,2) } + w(1,2) = 7

r(1,2)=2

w(2,3)=p(3)+q(3)+w(2,2)=3

2<k 3, -> k=3

c(2,3)=min { c(2,2) + c(3,3) } + w( 2,3 )=3

r(2,3)=3

w(3,4)=p(4)+q(4)+w(3,3)=3

3<k 4, -> k=4

c(3,4)=min { c( 3,3 ) + c ( 4,4 ) } + w( 3,4 )=3

r(3,4)=4

i<kj

D.E. Knuth Observation:

The optimal k can be found by limiting the search to the range r( i, j 1) k r( i+1, j )

j - i=2

w(0,2)=p(2)+q(2)+w(0,1)=3+1+8=12

r(0,1) k r( 1,2) -> 1 k 2, -> k=1,2

c(0,2)=min{ c(0,0)+c(1,2), c(0,1)+c(2,2)} + w(0,2)=min{ 7,8 } + 12= 19

r(0,2)=1

w(1,3)=p(3)+q(3)+w(1,2)=1+1+7=9

r(1,2) k r( 2,3) -> 2 k 3, -> k=2,3

c(1,3)=min { c(1,1)+c(2,3), c(1,2)+c(3,3) } + w(1,3)=min{ 3,7}+9=12

r(1,3)=2

w(2,4)=p(4)+q(4)+w(2,3)=1+1+3=5

r(2,3) k r( 3,4) -> 3 k 4, -> k=3,4

c(2,4)=min { c(2, 2)+c(3 , 4), c(2,3 )+c(4 , 4) }+ w( 2,4 )=min(3,3)+5=8

r(2,4)=3

j i =3

w(0,3)=p(3)+q(3)+w(0,2)=1+1+12=14

r(0,2) k r( 1,3) -> 1 k 2, -> k=1,2

c(0,3)=min{ c(0, 0)+c(1, 3), c(0, 1)+c(2, 3) } + w( 0, 3 )=min{ 12,11) + 14=25

r(0,3)=2

w(1,4)=p(4)+q(4)+ w(1,3)=1+1+9=11

r(1,3) k r( 2,4) -> 2 k 3, -> k=2,3

c(1,4)=min{ c( 1, 1 )+c(2 , 4), c( 1,2 )+c(3 , 4) } + w( 1,4 ) =min{ 8,10 } +11=19

r(1,4)=2

j i =4

w( 0,4 )=p(4)+q(4)+ w ( 0, 3 )= 1 + 1 + 14 = 16

r(0,3) k r( 1,4) -> 2 k 2, -> k=2

c( 0,4 )=min{ c( 0,1) + c(2,4) }+ w( 0,4 )=min{ 16} +16=32

r( 0,4 )=2

w

00

=2

c

00

=0

r

00

=0

w

11

=3

c

11

=0

r

11

=0

w

22

=1

c

22

=0

r

22

=0

w

33

=1

c

33

=0

r

33

=0

w

44

=1

c

44

=0

r

44

=0

w

01

=8

c

01

=8

r

01

=1

w

12

=7

c

12

=7

r

12

=2

w

23

=3

c

23

=3

r

23

=3

w

34

=3

c

34

=3

r

34

=4

w

02

=12

c

02

=19

r

02

=1

w

13

=9

c

13

=12

r

13

=2

w

24

=5

c

24

=8

r

24

=3

w

03

=14

c

03

=25

r

03

=2

w

14

=11

c

14

=19

r

14

=2

0

1

2

3

j-i

w

04

=16

c

04

=32

r

04

=2

4

Computation of c(0,4), w(0,4), and r(0,4)

From the table we can see that c(0,4)=32 is the minimum

cost of a binary search tree for ( a1, a2, a3, a4 ).

The root of tree t

04

is a

2.

The left subtree is t

01

and the right subtree t

24

.

Tree t

01

has root a

1

; its left subtree is t

00

and right

subtree t

11

.

Tree t

24

has root a

3

; its left subtree is t

22

and right

subtree t

34

.

Thus we can construct OBST.

if

do int

while

Home Work :

Ex 2: Let n=4, and ( a1,a2,a3,a4 ) = (count, float, int,while).

Let p(1 : 4 ) =( 1/20, 1/5, 1/10, 1/20) and

q(0: 4) = ( 1/5,1/10, 1/5,1/20,1/20 ).

Using the r(i, j)s construct an optimal binary search tree.

Time complexity of above procedure to

evaluate the cs and rs

Above procedure requires to compute c(i, j) for

( j - i) = 1,2,.,n .

When j i = m, there are n-m+1 c( i, j )s to compute.

The computation of each of these c( i, j )s requires to find

m quantities.

Hence, each such c(i, j) can be computed in time o(m).

The total time for all c(i,j)s with j i= m is

= m( n-m+1)

= mn-m

2

+m

=O(mn-m

2

)

Therefore, the total time to evaluate all the c(i, j)s and

r(i, j)s is

( mn m

2

) = O(n

3

)

1 m n

We can reduce the time complexity by using the

observation of D.E. Knuth

Observation:

The optimal k can be found by limiting the search

to the range r( i, j 1) k r( i+1, j )

In this case the computing time is O(n

2

).

OBST Algorithm

Algorithm OBST(p,q,n)

{

for i:= 0 to n-1 do

{ // initialize.

w[ i, i ] :=q[ i ]; r[ i, i ] :=0; c[ i, i ]=0;

// Optimal trees with one node.

w[ i, i+1 ]:= p[ i+1 ] + q[ i+1 ] + q[ i ] ;

c[ i, i+1 ]:= p[ i+1 ] + q[ i+1 ] + q[ i ] ;

r[ i, i+1 ]:= i + 1;

}

w[n, n] :=q[ n ]; r[ n, n ] :=0; c[ n, n ]=0;

// Find optimal trees with m nodes.

for m:= 2 to n do

{

for i := 0 to n m do

{

j:= i + m ;

w[ i, j ]:= p[ j ] + q[ j ] + w[ i, j -1 ];

// Solve using Knuths result

x := Find( c, r, i, j );

c[ i, j ] := w[ i, j ] + c[ i, x -1 ] + c[ x, j ];

r[ i, j ] :=x;

}

}

Algorithm Find( c, r, i, j )

{

min :=;

for k := r[ i, j -1 ] to r[ i+1, j ] do

{

if ( c[ i, k -1 ] +c[ k, j ] < min ) then

{

min := c[ i, k-1 ] + c[ k, j ]; y:= k;

}

}

return y;

}

Traveling Salesperson Problem (TSP)

Problem:-

You are given a set of n cities.

You are given the distances between the cities.

You start and terminate your tour at your home city.

You must visit each other city exactly once.

Your mission is to determine the shortest tour. OR

minimize the total distance traveled.

e.g. a directed graph :

Cost matrix:

1

2

3

2

4

4

2

5 6

7

10

4

8

3 9

1 2 3 4

1

2 10 5

2 2

3 4 3

4

4 6 8 7

0

0

0

0

The dynamic programming approach

Let g( i, S ) be the length of a shortest path starting at

vertex i, going through all vertices in S and terminating

at vertex 1.

The length of an optimal tour :

The general form:

k})} {1, - V g(k, {c {1}) - V g(1,

1k

n k 2

min

+ =

s s

{j})} - S g(j, {c min S) g(i,

ij

S j

+ =

e

1

2

Equation 1 can be solved for g( 1, V- {1} ) if we know

g( k, V- {1,k} ) for all choices of k.

The g values can be obtained by using equation 2 .

Clearly,

g( i, ) = C

i1

, 1 i n.

Hence we can use eq 2 to obtain g( i, S ) for all S of

size 1. Then we can obtain g( i, s) for all S of size 2

and so on.

Thus,

g(2, )=C

21

=2 , g(3, )=C

31

=4

g(4, )=C

41

=6

We can obtain

g(2, {3})=C

23

+ g(3, )=9+4=13

g(2, {4})=C24 + g(4, )=

g(3, {2})=C32 + g(2, )=3+2=5

g(3, {4})=C34 + g(4, )=4+6=10

g(4, {2})=C42 + g(2, )=8+2=10

g(4, {3})=C43 + g(3, )=7+4=11

Next, we compute g(i,S) with |S | =2,

g( 2,{3,4} )=min { c23+g(3,{4}), c24+g(4,{3}) }

=min {19, }=19

g( 3,{2,4} )=min { c32+g(2,{4}), c34+g(4,{2}) }

=min {,14}=14

g(4,{2,3} )=min {c42+g(2,{3}), c43+g(3,{2}) }

=min {21,12}=12

Finally,

We obtain

g(1,{2,3,4})=min { c12+ g( 2,{3,4} ),

c13+ g( 3,{2,4} ),

c14+ g(4,{2,3} ) }

=min{ 2+19,10+14,5+12}

=min{21,24,17}

=17.

A tour can be constructed if we retain with each g( i, s )

the value of j that minimizes the tour distance.

Let J( i, s ) be this value, then J( 1, { 2, 3, 4 } ) = 4.

Thus the tour starts from 1 and goes to 4.

The remaining tour can be obtained from g( 4, { 2,3 } ).

So J( 4, { 3, 2 } )=3

Thus the next edge is <4, 3>. The remaining tour is

g(3, { 2 } ). So J(3,{ 2 } )=2

The optimal tour is: (1, 4, 3, 2, 1)

Tour distance is 5+7+3+2 = 17

Floyd-Warshall Algorithm

All pairs shortest path problem

All-Pairs Shortest Path Problem

Let G=( V,E ) be a directed graph consisting of n

vertices.

Weight is associated with each edge.

The problem is to find a shortest path between every pair

of nodes.

Ex:-

1 2 3 4 5

1 0 1 1 5

2 9 0 3 2

3 0 4

4 2 0 3

5 3 0

v

1

v

2

v

3

v

4

v

5

3

2

2

4

1

3

1

9

3

5

Idea of Floyd-Warshall Algorithm

Assume vertices are {1,2,n}

Let d

k

( i, j ) be the length of a shortest path from i to j

with intermediate vertices numbered not higher than k

where 0 k n, then

d

0

( i, j )=c( i, j )

(no intermediate vertices at all)

d

k

( i, j )=min { d

k-1

( i, j ), d

k-1

( i, k )+ d

k-1

( k, j ) }

and d

n

( i, j ) is the length of a shortest path from i to j

In summary, we need to find d

n

with d

0

=cost matrix .

General formula

d

k

[ i, j ]= min { d

k-1

[ i, j ], d

k-1

[ i, k ]+ d

k-1

[ k, j ] }

V

i

V

j

V

k

Shortest Path using intermediate vertices

{ V

1, . . .

V

k -1

}

Shortest path using intermediate vertices

{ V

1

, . . . V

k

}

d

k-1

[i, j]

d

k-1

[k, j]

d

k-1

[i, k]

d

0

=

d

1

=

d

2

=

d

3

=

d

4

=

d

5

=

Algorithm

Algorithm AllPaths( c, d, n )

// c[1:n,1:n] cost matrix

// d[i,j] is the length of a shortest path from i to j

{

for i := 1 to n do

for j := 1 to n do

d [ i, j ] := c [ i, j ] ; // copy c into d

for k := 1 to n do

for i := 1 to n do

for j := 1 to n do

d [ i, j ] := min ( d [ i, j ] , d [ i, k ] + d [ k, j ] );

}

Time Complexity is O ( n

3

)

0/1 Knapsack Problem

Let x

i

= 1 when item i is selected and let x

i

= 0

when item i is not selected.

i = 1

n

p

i

x

i

maximize

i = 1

n

w

i

x

i

<= m

subject to

and x

i

= 0 or 1 for all i

All profits and weights are positive.

Dynamic programming approach

Let f

n

(m) be the value of an optimal

solution, then

f

n

(m)= max { f

n-1

(m), f

n-1

(

m- w

n

) + p

n

}

General formula

f

i

(y)= max { f

i-1

(y), f

i-1

(

y-w

i

) + p

i

}

Start with s

0

=( 0, 0 )

We can compute s

i+1

from s

i

by first computing

S

i

=

S

i

+ (p

i+1

, w

i+1

)

OR

s

i

={ ( P, W ) ( P- p

i+1

, W- w

i+1

) s

i

}

Now, s

i+1

can be computed by merging the pairs in s

i

and S

i

together.

1

1

1

Discarding or Purging Rule :-

if s

i+1

contains two pairs ( p

j

, w

j

) and ( p

k

, w

k

)

with the property that p

j

p

k

and

w

j

w

k

, then the pair

( p

j

, w

j

) can be discarded.

Discarding or purging rule is also known as dominance rule.

When generating s

i

s, we can also purge all pairs ( p, w )

with w > m as these pairs determine the value of f

n

(x) only

for x > m.

The optimal solution f

n

(m) is given by the highest profit

pair in s

n

.

Ex: knapsack instance n=3, (w

1

, w

2

, w

3

)=(2,3,4), (p

1

,p

2

,p

3

)=(1,2,5), and

m=6. for this data we have

S

0

={ (0,0) } ; s

0

={ (1,2) }

S

1

={ (0,0), (1,2) } ; s

1

={ (2,3), (3,5) }

S

2

={ (0,0), (1,2), (2,3), (3,5) } ; s

2

={ ( 5,4), (6,6), (7,7), (8,9) }

S

3

={ (0,0), (1,2), (2,3), ( 5,4), (6,6) }

Note that the pair (3, 5) has been eliminated from s

3

as a result of the purging rule.

Also, note that the pairs (7, 7) and (8, 8) have been eliminated from s

3

. Because, for

these pairs w>m i.e.,

7>6 and 9>6.

Therefore, the optimal solution is (6,6) ( highest profit pair ).

If (p, w) is the highest profit pair in sn, a set of 0/1 values for the x

i

s can be

determined by a search through the s

i

s.

We can set x

n

=0 if (p, w) s

n-1

else x

n

=1 . This leaves us to determine how

either (p, w) or (p p

n

, w w

n

) was obtained in s

n-1

. This can be done recursively.

Solution vector is ( x

1

, x

2

, x

3

)=(1, 0, 1).

1

1

1

Home work :

1. Given n=3, weights (w1,w2,w3)= (18,15,10), prots (p1, p2, p3)=(25,24,15)

and the knapsack capacity m=20. Compute the sets S

i

containing the pair

(Pi.Wi).

2. Consider the Knapsack instance n=6, m=165, (p1, p2, ....., p6) = (w1,w2, ......,w6)

= (100,50,20,10,7,3). Generate the S

i

sets containing the pair (pi,wi) and thus nd

the optimal solution.

Reliability Design

The problem is to design a system that is

composed of several devices connected in

series.

D

1

D

2

D

3

D

n

n devices connected in series

Let r

i

be the reliability of device D

i

( that is, r

i

is the

probability that device i will function properly ).

Then, the reliability of entire system is r

i

Even if the individual devices are very reliable, the

reliability of the entire system may not be very good.

Ex. If n=10 and r

i

= 0.99, 1 i 10, then r

i

=0.904

Hence, it is desirable to duplicate devices.

Multiple copies of the same device type are connected in

parallel as shown below.

D

1

D

1

D

1

D

2

D

2

D

3

D

3

D

3

D

3

D

n

D

n

D

n

Multiple devices connected in parallel in each stage

If stage i contains m

i

copies of device D

i

, then the

probability that all m

i

have malfunction is ( 1-r

i

)

m

.

Hence the reliability of stage i becomes 1-(1-r

i

)

m

.

Ex:- If r

I

=.99 and m

I

=2 , the stage reliability becomes 0.9999

Let

i

( m

i

) be the reliability of stage i, i n

Then, the reliability of system of n stages is

i

( m

i

)

1 i n

i

i

Our problem is to use device duplication to maximize

reliability. This maximization is to be carried out under a

cost constraint.

Let c

i

be the cost of each device i and c be the

maximum allowable cost of the system being designed.

We wish to solve the following maximization problem:

maximize

i

( m

i

)

subjected to c

i

m

i

c

m

i

1 and integer,

1 i n

1 i n

1 i n

Dynamic programming approach

Since, each c

i

>0, each m

i

must be in the range

1 m

i

u

i

, where

u

i

= ( c + c

i

- c

j

)

The upper bound u

i

follows from the observation that

m

i

1.

An optimal solution m

1

,m

2

,,m

n

is the result of a

sequence of decisions, one decision for each m

i

1 j n

C

i

Let f

n

(c) be the reliability of an optimal

solution, then

f

n

(c)= max {

n

( m

n

) f

n-1

( c- c

n

m

n

) }

General formula

f

i

(x)= max {

i

( m

i

) f

i-1

(x - c

i

m

i

) }

Clearly, f

0

(x)=1, for all x, 0 x c

1 m

n

u

n

1 m

i

u

i

Let s

i

consist of tuples of the form ( f, x )

Where f = f

i

( x )

Purging rule :- if s

i+1

contains two pairs ( f

j

, x

j

) and

( f

k

, x

k

) with the property that

f

j

f

k

and

x

j

w

k

, then we can

purge ( f

j

, x

j

)

When generating s

i

s, we can also purge all pairs ( f, x )

with c - x < c

k

as such pairs will not leave sufficient

funds to complete the system.

We obtain s

i+1

from s

i

by trying all possible values for m

i

and combining the resulting tuples together.

The optimal solution f

n

(c ) is given by the

highest reliability pair.

Start with S

0

=(1, 0 )

i +1 k n

Ex: ( D1, D2, D3)=(30, 15, 20), c=105, r1=.9, r2=.8, r3=.5.

Therefore, u1=(105+30-65)/30=2.33=2

u2=( 105+15-65)/15=3.66=3

u3=( 105+20-65)/20=3

S

0

={ (1,0) } s

1

0={ (.9, 30) }, merge s

1

0 and s

2

0 to get s

1

s

2

0 ={ (.99, 60) }

S

1

={ ((.9, 30), (.99, 60) } s

1

1={ (.72, 45), (.792, 75) } merge s

1

1, s

2

1, and s

3

1 to get s

2

s

2

1={ (.864, 60 ), (.9504, 90) }

s

3

1 ={ (.8928, 75), (.98208, 105)

S

2

={ (.72, 45), (.864, 60), (.8928, 75) } Note 1: Tuple ( .792, 75) is eliminated purging rule.

2: Tuples ( .9504,90) & ( .98208,105 ) will not leave sufficient funds to

complete the system.

s

1

2 ={ (.36, 65),(.432, 80),(.4464, 95) } purging rule

s

2

2={ (.54, 85),(.648, 100),(.6696, 115) }

s

3

2={ (.63, 105),(.756, 120),(.7812, 135) }

S

3

={ (.36, 65), (.432, 80), (.54, 85), (.648, 100 ) ----- The best design has a reliability of .648 and a cost of 100.

Tracing back through the sis , we can determine that m

1

=1, m

2

=2, m

3

=2.

Total cost is >105

You might also like

- UECM2043, UECM2093 Operations Research Tutorial 2 LP ProblemsDocument4 pagesUECM2043, UECM2093 Operations Research Tutorial 2 LP Problemskamun0100% (2)

- Dynamic ProgrammingDocument101 pagesDynamic ProgrammingrenumathavNo ratings yet

- Dynamic ProgrammingDocument26 pagesDynamic Programmingshraddha pattnaikNo ratings yet

- Matrix-Chain Multiplication: - Suppose We Have A Sequence or Chain A, A,, A of N Matrices To Be MultipliedDocument15 pagesMatrix-Chain Multiplication: - Suppose We Have A Sequence or Chain A, A,, A of N Matrices To Be Multipliedrosev15No ratings yet

- The Fundamentals: Algorithms The IntegersDocument55 pagesThe Fundamentals: Algorithms The Integersnguyên trần minhNo ratings yet

- DAADocument62 pagesDAAanon_385854451No ratings yet

- Design & Analysis of Algorithms (DAA) Unit - IIIDocument17 pagesDesign & Analysis of Algorithms (DAA) Unit - IIIYogi NambulaNo ratings yet

- Matrix Chain OrderingDocument15 pagesMatrix Chain OrderingZeseya SharminNo ratings yet

- Lecture 24 (Matrix Chain Multiplication)Document17 pagesLecture 24 (Matrix Chain Multiplication)Almaz RizviNo ratings yet

- Dynamic Programming Unit Explains Matrix Chain Order ProblemDocument16 pagesDynamic Programming Unit Explains Matrix Chain Order ProblemtharunkuttiNo ratings yet

- Dynamic ProgrammingDocument52 pagesDynamic ProgrammingattaullahchNo ratings yet

- Matrix Chain Multiplication and Travelling Salesman Problem Using Dynamic ProgrammingDocument35 pagesMatrix Chain Multiplication and Travelling Salesman Problem Using Dynamic ProgrammingkvbommidiNo ratings yet

- Dynamic Prog Updated (1)Document66 pagesDynamic Prog Updated (1)basamsettisahithiNo ratings yet

- Welcome To Our Presentation: Topics:Dynamic ProgrammingDocument25 pagesWelcome To Our Presentation: Topics:Dynamic ProgrammingSushanta AcharjeeNo ratings yet

- Data Structures and Algorithms Unit - V: Dynamic ProgrammingDocument19 pagesData Structures and Algorithms Unit - V: Dynamic ProgrammingTaniaa AlamNo ratings yet

- U 2Document20 pagesU 2ganeshrjNo ratings yet

- Dynamic Programming Exercises: L2 VersionDocument15 pagesDynamic Programming Exercises: L2 VersionRichard Y. AlcantaraNo ratings yet

- Algo 09Document39 pagesAlgo 09arunenggeceNo ratings yet

- Ada 4Document3 pagesAda 4vipul jhaNo ratings yet

- 03-The fundamentals- Algorithms- IntegersDocument54 pages03-The fundamentals- Algorithms- Integerstungnt26999No ratings yet

- הרצאה 9Document64 pagesהרצאה 9דוד סיידוןNo ratings yet

- Dynamic ProgrammingDocument7 pagesDynamic ProgrammingGlenn GibbsNo ratings yet

- Find Longest Increasing Subsequence Dynamic Programming AlgorithmDocument8 pagesFind Longest Increasing Subsequence Dynamic Programming Algorithmsiddharth1kNo ratings yet

- Multiplym 2Document23 pagesMultiplym 2adyant.gupta2022No ratings yet

- Dynamic Programming: 1 Matrix-Chain MultiplicationDocument5 pagesDynamic Programming: 1 Matrix-Chain MultiplicationPrabir K DasNo ratings yet

- Dynamic ProgrammingDocument47 pagesDynamic Programmingrahulj_171No ratings yet

- Dynamic ProgrammingDocument110 pagesDynamic ProgrammingJatin BindalNo ratings yet

- Dynamic ProgrammingDocument35 pagesDynamic ProgrammingMuhammad JunaidNo ratings yet

- Data Structures and Algorithms Unit - V: Dynamic ProgrammingDocument19 pagesData Structures and Algorithms Unit - V: Dynamic ProgrammingVaruni DeviNo ratings yet

- DynamicDocument18 pagesDynamicBhavini Rajendrakumar BhattNo ratings yet

- DynprogDocument30 pagesDynprogankit47No ratings yet

- HW 5 SolnDocument12 pagesHW 5 SolnExulansisNo ratings yet

- Document 1Document3 pagesDocument 1mi5a.saad12345No ratings yet

- HW 9 SolutionDocument8 pagesHW 9 SolutionResearchNo ratings yet

- Ada Module 3 NotesDocument40 pagesAda Module 3 NotesLokko PrinceNo ratings yet

- Unit 4Document28 pagesUnit 4Dr. Nageswara Rao EluriNo ratings yet

- CHAP5 DP and GreedyDocument75 pagesCHAP5 DP and GreedyDo DragonNo ratings yet

- Daa Unit VnotesDocument6 pagesDaa Unit VnotesAdi SinghNo ratings yet

- CS203 Week6Document7 pagesCS203 Week6Rajan KumarNo ratings yet

- Ald Assignment 5 AMAN AGGARWAL (2018327) : Divide and ConquerDocument12 pagesAld Assignment 5 AMAN AGGARWAL (2018327) : Divide and ConquerRitvik GuptaNo ratings yet

- Unit3 NotesDocument29 pagesUnit3 NotesprassadyashwinNo ratings yet

- SadcxDocument19 pagesSadcxVenkat BalajiNo ratings yet

- DAA U-4 Dynamic ProgrammingDocument23 pagesDAA U-4 Dynamic Programmings.s.r.k.m. guptaNo ratings yet

- Lecture 6 1Document97 pagesLecture 6 1Vivekanandhan VijayanNo ratings yet

- Daa Unit-3Document32 pagesDaa Unit-3pihadar269No ratings yet

- MATHEMATICSDocument862 pagesMATHEMATICSLeandro Baldicañas Piczon100% (1)

- Fundamentals of Algorithms 10B11CI411: Dynamic Programming Instructor: Raju PalDocument70 pagesFundamentals of Algorithms 10B11CI411: Dynamic Programming Instructor: Raju PalSurinder PalNo ratings yet

- Brute Force: Design and Analysis of Algorithms - Chapter 3 1Document18 pagesBrute Force: Design and Analysis of Algorithms - Chapter 3 1theresa.painterNo ratings yet

- MB0048 or Solved Winter Drive Assignment 2012Document9 pagesMB0048 or Solved Winter Drive Assignment 2012Pawan KumarNo ratings yet

- Dynamic ProgDocument48 pagesDynamic Prog2023itm005.saunakNo ratings yet

- Dynamic Programming - Formalized by Richard Bellman - "Programming" Relates To Use of Tables, Rather Than Computer ProgrammingDocument23 pagesDynamic Programming - Formalized by Richard Bellman - "Programming" Relates To Use of Tables, Rather Than Computer ProgrammingHana HoubaNo ratings yet

- Daa 4 Unit DetailsDocument14 pagesDaa 4 Unit DetailsSathyendra Kumar VNo ratings yet

- HW 1Document6 pagesHW 1Morokot AngelaNo ratings yet

- Dynamic Programming1Document38 pagesDynamic Programming1Mohammad Bilal MirzaNo ratings yet

- 7-5 Solution PDFDocument3 pages7-5 Solution PDFDaniel Bastos MoraesNo ratings yet

- SE-Comps SEM4 AOA-CBCGS DEC18 SOLUTIONDocument15 pagesSE-Comps SEM4 AOA-CBCGS DEC18 SOLUTIONSagar SaklaniNo ratings yet

- CS-2012 (Daa) - CS End Nov 2023Document21 pagesCS-2012 (Daa) - CS End Nov 2023bojat18440No ratings yet

- Design and Analysis of Algorithm: Greedy Method and Spanning TreesDocument31 pagesDesign and Analysis of Algorithm: Greedy Method and Spanning TreesSai Chaitanya BalliNo ratings yet

- Module 3Document19 pagesModule 3cbgopinathNo ratings yet

- List of Cad/Cam Subject Text Books: S.No Title of BookDocument6 pagesList of Cad/Cam Subject Text Books: S.No Title of BookSubbu SuniNo ratings yet

- Operation Research Question BankDocument4 pagesOperation Research Question Bankhosurushanker100% (1)

- (AK) BFTech Sem V POM - D.Sureshkumar, NIFT, KannurDocument6 pages(AK) BFTech Sem V POM - D.Sureshkumar, NIFT, KannurDebashruti BiswasNo ratings yet

- Topic 2 Part 4 Priority RR EtcDocument16 pagesTopic 2 Part 4 Priority RR EtcAmirul AdamNo ratings yet

- Principles of Management NotesDocument178 pagesPrinciples of Management NotesChelladurai Krishnasamy100% (1)

- Group 4 Presentation OM ERP and MRP IIDocument22 pagesGroup 4 Presentation OM ERP and MRP IICindi NabilaNo ratings yet

- CS570 Analysis of Algorithms Exam II SolutionsDocument14 pagesCS570 Analysis of Algorithms Exam II SolutionsDan GoodmanNo ratings yet

- Intro to Operations ResearchDocument8 pagesIntro to Operations Researchermias alemeNo ratings yet

- Chapter 1: Information Systems in Business Today 1.1 The Role On Information Systems in Business TodayDocument7 pagesChapter 1: Information Systems in Business Today 1.1 The Role On Information Systems in Business TodayRyanNo ratings yet

- ITGN256 Assignment Question Fall 2020-21Document7 pagesITGN256 Assignment Question Fall 2020-21Muhammad hamzaNo ratings yet

- Quesbank 270216063430Document4 pagesQuesbank 270216063430Man C Is a SuksNo ratings yet

- Effect of "Operations Research" in Science and Industry: I J C R BDocument7 pagesEffect of "Operations Research" in Science and Industry: I J C R BLydia Rejas Villanueva TomadaNo ratings yet

- ALODocument376 pagesALOAchal ChoxNo ratings yet

- VMKVEC Optimization Techniques NotesDocument14 pagesVMKVEC Optimization Techniques Notessmg26thmay100% (1)

- Notes On Minimum Cost Network Flow ModelsDocument8 pagesNotes On Minimum Cost Network Flow Modelssusheelshastry542No ratings yet

- Tutorial: Operations Research in Constraint Programming: John Hooker Carnegie Mellon UniversityDocument329 pagesTutorial: Operations Research in Constraint Programming: John Hooker Carnegie Mellon UniversityBhagirat DasNo ratings yet

- Updated - Chap07 Goal Programming and Multiple Objective OptimizationDocument39 pagesUpdated - Chap07 Goal Programming and Multiple Objective OptimizationbauemmvssNo ratings yet

- PSO - Kota Baru Parahyangan 2017Document52 pagesPSO - Kota Baru Parahyangan 2017IwanNo ratings yet

- Unit IIDocument29 pagesUnit IIsmg26thmayNo ratings yet

- Jurmatis: Analisis Pengendalian Ketersediaan Bahan Baku Di PT. Akasha Wira Internasional, TBK Menggunakan Metode EOQDocument10 pagesJurmatis: Analisis Pengendalian Ketersediaan Bahan Baku Di PT. Akasha Wira Internasional, TBK Menggunakan Metode EOQLaras NisrinaNo ratings yet

- CPS 356 Lecture Notes: CPU Scheduling AlgorithmsDocument10 pagesCPS 356 Lecture Notes: CPU Scheduling Algorithmssad cclNo ratings yet

- Application of Graph Theory in Operations ResearchDocument3 pagesApplication of Graph Theory in Operations ResearchInternational Journal of Innovative Science and Research Technology100% (2)

- Cse Vi Operations Research (10cs661) NotesDocument98 pagesCse Vi Operations Research (10cs661) NotesRajesh KannaNo ratings yet

- Application of StatisticsDocument5 pagesApplication of Statisticsmaksonaug70% (1)

- Ford-Fulkerson AlgorithmDocument6 pagesFord-Fulkerson Algorithmテラン みさおNo ratings yet

- Or Lectures 2011 - Part 2Document191 pagesOr Lectures 2011 - Part 2Ahmed RamadanNo ratings yet

- Linear Programming Model Maximizes Production ProfitDocument76 pagesLinear Programming Model Maximizes Production Profit123123azxc100% (1)

- JCL Mainframebrasil PDFDocument165 pagesJCL Mainframebrasil PDFRicardo Azeredo FeitosaNo ratings yet

- Three Dimensional Maritime Transportation ModelsDocument3 pagesThree Dimensional Maritime Transportation ModelsDan Nikos100% (1)