Professional Documents

Culture Documents

Necessity of High Performance Parallel Computing

Uploaded by

mtechsurendra123Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Necessity of High Performance Parallel Computing

Uploaded by

mtechsurendra123Copyright:

Available Formats

0

Introduction to parallel processing

Chapter 1 from Culler & Singh

Winter 2007

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

1

Introduction

What is Parallel Architecture?

Why Parallel Architecture?

Evolution and Convergence of Parallel Architectures

Fundamental Design Issues

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

2

What is Parallel Architecture?

A parallel computer is a collection of processing

elements that cooperate to solve large problems fast

Some broad issues:

Resource Allocation:

how large a collection?

how powerful are the elements?

how much memory?

Data access, Communication and Synchronization

how do the elements cooperate and communicate?

how are data transmitted between processors?

what are the abstractions and primitives for cooperation?

Performance and Scalability

how does it all translate into performance?

how does it scale?

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

3

Role of a computer architect:

To design and engineer the various levels of a computer

system to maximize performance and programmability within

limits of technology and cost.

Parallelism:

Provides alternative to faster clock for performance

Applies at all levels of system design

Is a fascinating perspective from which to view architecture

Is increasingly central in information processing

Why Study Parallel Architecture?

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

4

History: diverse and innovative organizational

structures, often tied to novel programming models

Rapidly maturing under strong technological

constraints

The killer micro is ubiquitous

Laptops and supercomputers are fundamentally similar!

Technological trends cause diverse approaches to converge

Technological trends make parallel computing

inevitable

In the mainstream

Need to understand fundamental principles and

design tradeoffs, not just taxonomies

Naming, Ordering, Replication, Communication performance

Why Study it Today?

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

5

Application Trends

Demand for cycles fuels advances in hardware, and vice-versa

Cycle drives exponential increase in microprocessor performance

Drives parallel architecture harder: most demanding applications

Range of performance demands

Need range of system performance with progressively increasing

cost

Platform pyramid

Goal of applications in using parallel machines: Speedup

Speedup (p processors) =

For a fixed problem size (input data set), performance = 1/time

Speedup fixed problem (p processors) =

Performance (p processors)

Performance (1 processor)

Time (p processors)

Time (1 processor)

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

6

Scientific Computing Demand

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

7

Large parallel machines a mainstay in many

industries, for example,

Petroleum (reservoir analysis)

Automotive (crash simulation, drag analysis, combustion

efficiency),

Aeronautics (airflow analysis, engine efficiency, structural

mechanics, electromagnetism),

Computer-aided design

Pharmaceuticals (molecular modeling)

Visualization

in all of the above

entertainment (films like Shrek 2, The Incredibles)

architecture (walk-throughs and rendering)

Financial modeling (yield and derivative analysis).

Engineering Computing Demand

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

8

Applications: Speech and Image Processing

1980

1985 1990 1995

1 MIPS

10 MIPS

100 MIPS

1 GIPS

Sub-Band

Speech Coding

200 Words

Isolated Speech

Recognition

Speaker

Verication

CELP

Speech Coding

ISDN-CD Stereo

Receiver

5, 000 Words

Continuous

Speech

Recognition

HDTVReceiver

CIF Video

1, 000 Words

Continuous

Speech

Recognition

Telephone

Number

Recognition

10 GIPS

Also CAD, Databases, . . .

100 processors gets you 10 years!

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

9

Learning Curve for Parallel Applications

AMBER molecular dynamics simulation program

Starting point was vector code for Cray-1

145 MFLOP on Cray90, 406 for final version on 128-processor Paragon,

891 on 128-processor Cray T3D

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

10

Also relies on parallelism for high end

Scale not so large, but use much more wide-spread

Computational power determines scale of business that can

be handled

Databases, online-transaction processing, decision

support, data mining, data warehousing ...

TPC benchmarks (TPC-C order entry, TPC-D

decision support)

Explicit scaling criteria provided

Size of enterprise scales with size of system

Problem size no longer fixed as p increases, so throughput

is used as a performance measure (transactions per minute

or tpm)

Commercial Computing

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

11

Transition to parallel computing has occurred for

scientific and engineering computing

In rapid progress in commercial computing

Database and transactions as well as financial

Usually smaller-scale, but large-scale systems also used

Desktop also uses multithreaded programs, which

are a lot like parallel programs

Demand for improving throughput on sequential

workloads

Greatest use of small-scale multiprocessors

Solid application demand exists and will increase

Summary of Application Trends

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

12

Technology Trends

P

e

r

f

o

r

m

a

n

c

e

0.1

1

10

100

1965 1970 1975 1980 1985 1990 1995

Supercomputers

Minicomputers

Mainf rames

Microprocessors

The natural building block for multiprocessors is now also about the fastest!

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

13

General Technology Trends

Microprocessor performance increases 50% - 100% per year

Transistor count doubles every 3 years

DRAM size quadruples every 3 years

Huge investment per generation is carried by huge commodity market

Not that single-processor performance is plateauing, but that

parallelism is a natural way to improve it.

0

20

40

60

80

100

120

140

160

180

1987 1988 1989 1990 1991 1992

Integer FP

Sun 4

260

MIPS

M/120

IBM

RS6000

540

MIPS

M2000

HP 9000

750

DEC

alpha

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

14

Basic advance is decreasing feature size ( )

Circuits become either faster or lower in power

Die size is growing too

Clock rate improves roughly proportional to improvement in

Number of transistors improves like

(or faster)

Performance > 100x per decade; clock rate 10x, rest transistor

count

How to use more transistors?

Parallelism in processing

multiple operations per cycle reduces CPI

Locality in data access

avoids latency and reduces CPI

also improves processor utilization

Both need resources, so tradeoff

Fundamental issue is resource distribution, as in uniprocessors

Technology: A Closer Look

Proc $

Interconnect

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

15

Clock Frequency Growth Rate

0.1

1

10

100

1,000

1970

1975

1980

1985

1990

1995

2000

2005

C

l

o

c

k

r

a

t

e

(

M

H

z

)

i4004

i8008

i8080

i8086

i80286

i80386

Pentium100

R10000

30% per year

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

16

Transistor Count Growth Rate

T

r

a

n

s

i

s

t

o

r

s

1,000

10,000

100,000

1,000,000

10,000,000

100,000,000

1970

1975

1980

1985

1990

1995

2000

2005

i4004

i8008

i8080

i8086

i80286

i80386

R2000

Pentium

R10000

R3000

100 million transistors on chip by early 2000s A.D.

Transistor count grows much faster than clock rate

- 40% per year, order of magnitude more contribution in 2 decades

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

17

Divergence between memory capacity and speed more

pronounced

Capacity increased by 1000x from 1980-2005, speed only 5x

4 Gigabit DRAM now, but gap with processor speed much greater

Larger memories are slower, while processors get faster

Need to transfer more data in parallel

Need deeper cache hierarchies

How to organize caches?

Parallelism increases effective size of each level of hierarchy,

without increasing access time

Parallelism and locality within memory systems too

New designs fetch many bits within memory chip; follow with fast

pipelined transfer across narrower interface

Buffer caches most recently accessed data

Disks too: Parallel disks plus caching

Storage Performance

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

18

Architecture translates technologys gifts to performance and

capability

Resolves the tradeoff between parallelism and locality

Current microprocessor: 1/3 compute, 1/3 cache, 1/3 off-chip

connect

Tradeoffs may change with scale and technology advances

Understanding microprocessor architectural trends

Helps build intuition about design issues or parallel machines

Shows fundamental role of parallelism even in sequential

computers

Four generations of architectural history: tube, transistor, IC,

VLSI

Here focus only on VLSI generation

Greatest delineation in VLSI has been in type of parallelism

exploited

Architectural Trends

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

19

Greatest trend in VLSI generation is increase in parallelism

Up to 1985: bit level parallelism: 4-bit -> 8 bit -> 16-bit

slows after 32 bit

adoption of 64-bit now under way, 128-bit far (not performance

issue)

great inflection point when 32-bit micro and cache fit on a chip

Mid 80s to mid 90s: instruction level parallelism

pipelining and simple instruction sets, + compiler advances (RISC)

on-chip caches and functional units => superscalar execution

greater sophistication: out of order execution, speculation,

prediction

to deal with control transfer and latency problems

2000s: Thread-level parallelism

Architectural Trends in VLSI

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

20

ILP Ideal Potential

0 1 2 3 4 5 6+

0

5

10

15

20

25

30

0 5 10 15

0

0.5

1

1.5

2

2.5

3

F

r

a

c

t

i

o

n

o

f

t

o

t

a

l

c

y

c

l

e

s

(

%

)

Number of instructions issued

S

p

e

e

d

u

p

Instructions issued per cy cle

Infinite resources and fetch bandwidth, perfect branch prediction and renaming

real caches and non-zero miss latencies

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

21

Raw Uniprocessor Performance: LINPACK

L

I

N

P

A

C

K

(

M

F

L

O

P

S

)

s

s

s

s

s

s

1

10

100

1,000

10,000

1975 1980 1985 1990 1995 2000

s CRA Y 100 x 100 matrix

CRA Y 1,000x1000 matrix

Micro 100 x 100 matrix

Micro 1,000x1000 matrix

CRA Y 1s

Xmp/14se

Xmp/416

Ymp

C90

T94

DEC 8200

IBM Power2/990

MIPS R4400

HP9000/735

DEC Alpha

DEC Alpha AXP

HP 9000/750

IBM RS6000/540

MIPS M/2000

MIPS M/120

Sun 4/260

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

22

Increasingly attractive

Economics, technology, architecture, application demand

Increasingly central and mainstream

Parallelism exploited at many levels

Instruction-level parallelism

Multiprocessor servers

Large-scale multiprocessors (MPPs)

Focus of this class: multiprocessor level of parallelism

Same story from memory system perspective

Increase bandwidth, reduce average latency with many local

memories

Wide range of parallel architectures make sense

Different cost, performance and scalability

Summary: Why Parallel Architecture?

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

23

Historically, parallel architectures tied to programming models

Divergent architectures, with no predictable pattern of growth.

Uncertainty of direction paralyzed parallel software development!

History of parallel architectures

Application Software

System

Software

SIMD

Message Passing

Shared Memory

Dataflow

Systolic

Arrays

Architecture

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

24

Architectural enhancements to support communication and

cooperation

OLD: Instruction Set Architecture

NEW: Communication Architecture

Defines

Critical abstractions, boundaries, and primitives (interfaces)

Organizational structures that implement interfaces (h/w or s/w)

Compilers, libraries and OS are important bridges today

Todays architectural viewpoint

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

25

Modern Layered Framework

CAD

Multipr ogramming Shar ed

addr ess

Message

passing

Data

parallel

Database Scientific modeling Parallel applications

Pr ogramming models

Communication abstraction

User/system boundary

Compilation

or library

Operating systems support

Communication har dwar e

Physical communication medium

Har dwar e/softwar e boundary

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

26

What programmer uses in coding applications

Specifies communication and synchronization

Examples:

Multiprogramming: no communication or synch. at program level

Shared address space: like bulletin board

Message passing: like letters or phone calls, explicit point to point

Data parallel: more regimented, global actions on data

Implemented with shared address space or message passing

Programming Model

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

27

User level communication primitives provided

Realizes the programming model

Mapping exists between language primitives of programming model

and these primitives

Supported directly by HW, or via OS, or via user SW

Lot of debate about what to support in SW and gap between

layers

Today:

HW/SW interface tends to be flat, i.e. complexity roughly uniform

Compilers and software play important roles as bridges today

Technology trends exert strong influence

Result is convergence in organizational structure

Relatively simple, general purpose communication primitives

Communication Abstraction

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

28

Comm. Arch. = User/System Interface + Implementation

User/System Interface:

Comm. primitives exposed to user-level by HW and system-level

SW

Implementation:

Organizational structures that implement the primitives: HW or OS

How optimized are they? How integrated into processing node?

Structure of network

Goals:

Performance

Broad applicability

Programmability

Scalability

Low Cost

Communication Architecture

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

29

Historically machines tailored to programming models

Prog. model, comm. abstraction, and machine organization lumped

together as the architecture

Evolution helps understand convergence

Identify core concepts

Shared Address Space

Message Passing

Data Parallel

Others:

Dataflow

Systolic Arrays

Examine programming model, motivation, intended applications,

and contributions to convergence

Evolution of Architectural Models

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

30

Any processor can directly reference any memory location

Communication occurs implicitly as result of loads and stores

Convenient:

Location transparency

Similar programming model to time-sharing on uniprocessors

Except processes run on different processors

Good throughput on multiprogrammed workloads

Naturally provided on wide range of platforms

History dates at least to precursors of mainframes in early 60s

Wide range of scale: few to hundreds of processors

Popularly known as shared memory machines or model

Ambiguous: memory may be physically distributed among

processors

Shared Address Space Architectures

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

31

Process: virtual address space plus one or more threads of control

Portions of address spaces of processes are shared

Writes to shared address visible to other threads (in other processes

too)

Natural extension of uniprocessors model: conventional memory operations

for comm.; special atomic operations for synchronization

OS uses shared memory to coordinate processes

Shared Address Space Model

S t o r e

P

1

P

2

P

n

P

0

L o a d

P

0

p r i v a t e

P

1

p r i v a t e

P

2

p r i v a t e

P

n

p r i v a t e

Virtual address spaces for a

collection of processes communicating

via shared addresses

Machine physical address space

Shared portion

of address space

Private portion

of address space

Common physical

addresses

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

32

Communication Hardware in SAS arch.

Also natural extension of uniprocessor

Already have processor, one or more memory modules and I/O

controllers connected by hardware interconnect of some sort

Memory capacity increased by adding modules, I/O by

controllers

Add processors for processing!

For higher-throughput multiprogramming, or parallel programs

I/O ctrl Mem Mem Mem

Int erconnect

Mem

I/O ctrl

Processor Processor

Int erconnect

I/O

dev ices

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

33

Mainframe approach

Motivated by multiprogramming

Extends crossbar used for mem bw and I/O

Originally processor cost limited to small

later, cost of crossbar

Bandwidth scales with p

High incremental cost; use multistage instead

Minicomputer approach

Almost all microprocessor systems have bus

Motivated by multiprogramming, TP

Used heavily for parallel computing

Called symmetric multiprocessor (SMP)

Latency larger than for uniprocessor

Bus is bandwidth bottleneck

caching is key: coherence problem

Low incremental cost

History of SAS architectures

P

P

C

C

I/O

I/O

M M M M

P P

C

I/O

M M C

I/O

$ $

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

34

Example of SAS: Intel Pentium Pro Quad

P-Pro bus (64-bit data, 36-bit addr ess, 66 MHz)

CPU

Bus interf ace

MIU

P-Pro

module

P-Pro

module

P-Pro

module 256-KB

L

2

$

Interrupt

cont roller

PCI

bridge

PCI

bridge

Memory

cont roller

1-, 2-, or 4-way

interleav ed

DRAM

P

C

I

b

u

s

P

C

I

b

u

s

PCI

I/O

cards

All coherence and

multiprocessing glue in

processor module

Highly integrated, targeted at

high volume

Low latency and bandwidth

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

35

Example of SAS: SUN Enterprise

Gigaplane bus (256 data, 41 addr ess, 83 MHz)

S

B

U

S

S

B

U

S

S

B

U

S

2

F

i

b

e

r

C

h

a

n

n

e

l

1

0

0

b

T

,

S

C

S

I

Bus interf ace

CPU/mem

cards P

$

2

$

P

$

2

$

Mem ctrl

Bus interf ace/switch

I/O cards

16 cards of either type:

processors + memory, or I/O

All memory accessed over bus, so

symmetric

Higher bandwidth, higher latency

bus

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

36

Problem is interconnect: cost (crossbar) or bandwidth (bus)

Dance-hall: bandwidth still scalable, but lower cost than crossbar

latencies to memory uniform, but uniformly large

Distributed memory or non-uniform memory access (NUMA)

Construct shared address space out of simple message transactions

across a general-purpose network (e.g. read-request, read-response)

Caching shared (particularly nonlocal) data?

Scaling Up SAS Architecture

M M M

M

M M

Network Network

P

$

P

$

P

$

P

$

P

$

P

$

Dance hall Distributed memory

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

37

Scale up to 1024 processors, 480MB/s links

Memory controller generates comm. request for nonlocal references

No hardware mechanism for coherence (SGI Origin etc. provide this

Example scaled-up SAS arch. : Cray T3E

Switch

P

$

XY

Z

External I /O

Mem

ct rl

and NI

Mem

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

38

Complete computer as building block, including I/O

Communication via explicit I/O operations

Programming model: directly access only private address space

(local memory), comm. via explicit messages (send/receive)

High-level block diagram similar to distributed-memory SAS

But comm. integrated at IO level, neednt be into memory system

Like networks of workstations (clusters), but tighter integration

Easier to build than scalable SAS

Programming model more removed from basic hardware

operations

Library or OS intervention

Message Passing Architectures

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

39

Send specifies buffer to be transmitted and receiving process

Recv specifies sending process and application storage to receive into

Memory to memory copy, but need to name processes

Optional tag on send and matching rule on receive

User process names local data and entities in process/tag space too

In simplest form, the send/recv match achieves pairwise synch event

Other variants too

Many overheads: copying, buffer management, protection

Message-Passing Abstraction

Pr ocess P Pr ocess Q

Addr ess Y

Addr ess X

Send X, Q, t

Receive Y , P , t Match

Local pr ocess

addr ess space

Local pr ocess

addr ess space

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

40

Early machines: FIFO on each link

Hw close to prog. Model; synchronous ops

Replaced by DMA, enabling non-blocking ops

Buffered by system at destination until recv

Diminishing role of topology

Store&forward routing: topology important

Introduction of pipelined routing made it less so

Cost is in node-network interface

Simplifies programming

Evolution of Message-Passing Machines

000 001

010 011

100

110

101

111

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

41

Made out of essentially complete RS6000 workstations

Network interface integrated in I/O bus (bw limited by I/O bus)

Example of MP arch. : IBM SP-2

Memory bus

MicroChannel bus

I/O

i860 NI

DMA

D

R

A

M

IBM SP-2 node

L

2

$

Power 2

CPU

Memory

controller

4-way

interleav ed

DRAM

General interconnection

network f ormed f r om

8-port switches

NIC

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

42

Example Intel Paragon

Memory bus (64-bit, 50 MHz)

i860

L

1

$

NI

DMA

i860

L

1

$

Driv er

Mem

ctrl

4-way

interleav ed

DRAM

Intel

Paragon

node

8 bits,

175 MHz,

bidirectional

2D grid network

with processing node

attached to ev ery switch

Sandia s Intel Paragon XP/S-based Super computer

Winter 2007 ENGR9861 R. Venkatesan

High-Performance Computer Architecture

43

Evolution and role of software have blurred boundary

Send/recv supported on SAS machines via buffers

Can construct global address space on MP using hashing

Page-based (or finer-grained) shared virtual memory

Hardware organization converging too

Tighter NI integration even for MP (low-latency, high-bandwidth)

At lower level, even hardware SAS passes hardware messages

Even clusters of workstations/SMPs are parallel systems

Emergence of fast system area networks (SAN)

Programming models distinct, but organizations converging

Nodes connected by general network and communication assists

Implementations also converging, at least in high-end machines

Toward Architectural Convergence

You might also like

- Val Nav Advanced ManualDocument82 pagesVal Nav Advanced ManualJorge Romero100% (1)

- Chapter 01 Computer Organization and Design, Fifth Edition: The Hardware/Software Interface (The Morgan Kaufmann Series in Computer Architecture and Design) 5th EditionDocument49 pagesChapter 01 Computer Organization and Design, Fifth Edition: The Hardware/Software Interface (The Morgan Kaufmann Series in Computer Architecture and Design) 5th EditionPriyanka Meena83% (6)

- Computer Organization and Design 4th Edition Chapter 1 SlidesDocument9 pagesComputer Organization and Design 4th Edition Chapter 1 SlidesMark PerkinsNo ratings yet

- Linear RegulatorDocument10 pagesLinear Regulatormtechsurendra123No ratings yet

- Legato Technologies Assessment 2: Test SummaryDocument41 pagesLegato Technologies Assessment 2: Test SummaryP.Nanda Kumar80% (5)

- Introduction to Parallel Processing FundamentalsDocument44 pagesIntroduction to Parallel Processing FundamentalspatasutoshNo ratings yet

- Ec6009 Advanced Computer Architecture Unit I Fundamentals of Computer Design 9Document15 pagesEc6009 Advanced Computer Architecture Unit I Fundamentals of Computer Design 9Anitha DenisNo ratings yet

- Aca 1st UnitDocument13 pagesAca 1st UnitShobha KumarNo ratings yet

- Cse.m-ii-Advances in Computer Architecture (12scs23) - NotesDocument213 pagesCse.m-ii-Advances in Computer Architecture (12scs23) - NotesnbprNo ratings yet

- ACA NotesDocument156 pagesACA NotesSharath MonappaNo ratings yet

- Unit I Fundamentals of Computer Design and Ilp-1-14Document14 pagesUnit I Fundamentals of Computer Design and Ilp-1-14vamsidhar2008No ratings yet

- CA Lecture 1Document28 pagesCA Lecture 1sibghamehboob6No ratings yet

- Cse Viii Advanced Computer Architectures (06cs81) NotesDocument156 pagesCse Viii Advanced Computer Architectures (06cs81) NotesJupe JonesNo ratings yet

- Advanced Computer Architecture: 563 L02.1 Fall 2011Document57 pagesAdvanced Computer Architecture: 563 L02.1 Fall 2011bashar_engNo ratings yet

- 2006 - EBook Architecture - All - 5th Semester - Computer Science and EngineeringDocument119 pages2006 - EBook Architecture - All - 5th Semester - Computer Science and Engineeringardiansyah permadigunaNo ratings yet

- Chapter 1 Fundamentals of Computer DesignDocument40 pagesChapter 1 Fundamentals of Computer DesignAbdullahNo ratings yet

- Fundamentals of Computer DesignDocument14 pagesFundamentals of Computer DesignYutyu YuiyuiNo ratings yet

- Computer Architecture: Challenges and Opportunities For The Next DecadeDocument13 pagesComputer Architecture: Challenges and Opportunities For The Next DecadePranju GargNo ratings yet

- Chapter 1: Computer Abstractions and TechnologyDocument50 pagesChapter 1: Computer Abstractions and TechnologyGreen ChiquitaNo ratings yet

- CH 1 Intro To Parallel ArchitectureDocument18 pagesCH 1 Intro To Parallel Architecturedigvijay dholeNo ratings yet

- 1Q. Explain The Fundamentals of Computer Design 1.1 Fundamentals of Computer DesignDocument19 pages1Q. Explain The Fundamentals of Computer Design 1.1 Fundamentals of Computer DesigntgvrharshaNo ratings yet

- Chapter 1: Understanding Computer Hardware and SoftwareDocument47 pagesChapter 1: Understanding Computer Hardware and SoftwareShyamala RamachandranNo ratings yet

- CIS775: Computer Architecture: Chapter 1: Fundamentals of Computer DesignDocument43 pagesCIS775: Computer Architecture: Chapter 1: Fundamentals of Computer DesignPranjal JainNo ratings yet

- Comp OrganizationDocument49 pagesComp Organizationmanas0% (1)

- CH 01. Fundamentals of Quantitative Design and AnalysisDocument27 pagesCH 01. Fundamentals of Quantitative Design and AnalysisFaheem KhanNo ratings yet

- Multicore Embeddedfinal RevisedDocument9 pagesMulticore Embeddedfinal RevisedkyooNo ratings yet

- Eight Great Ideas in Computer ArchitectureDocument64 pagesEight Great Ideas in Computer ArchitecturePavithra JanarthananNo ratings yet

- Fundamentals of Computer Design Unit 1-Chapter 1: ReferenceDocument53 pagesFundamentals of Computer Design Unit 1-Chapter 1: ReferencesandeepNo ratings yet

- RTSEC DocumentationDocument4 pagesRTSEC DocumentationMark JomaoasNo ratings yet

- Data-Level Parallelism in Vector, SIMD, and GPU ArchitecturesDocument2 pagesData-Level Parallelism in Vector, SIMD, and GPU ArchitecturesAngrry BurdNo ratings yet

- Matlab TutorialDocument196 pagesMatlab Tutorialnickdash09No ratings yet

- IntroDocument11 pagesIntroabesalok123No ratings yet

- Computer Performance Enhancing Techniques - Session-2Document36 pagesComputer Performance Enhancing Techniques - Session-2LathaNo ratings yet

- Billion Isca2015Document13 pagesBillion Isca2015Raj KarthikNo ratings yet

- Advanced Computer Architecture: AzvjvhdDocument61 pagesAdvanced Computer Architecture: AzvjvhdKeshav LamichhaneNo ratings yet

- The Greening of IT: A Practitioner's View: Tag Line, Tag LineDocument34 pagesThe Greening of IT: A Practitioner's View: Tag Line, Tag LineAhmed MallouhNo ratings yet

- Ch.2 Performance Issues: Computer Organization and ArchitectureDocument25 pagesCh.2 Performance Issues: Computer Organization and ArchitectureZhen XiangNo ratings yet

- CS6303 Computer Architecture ACT NotesDocument76 pagesCS6303 Computer Architecture ACT NotesSuryaNo ratings yet

- Parallel Computing's Growing RelevanceDocument25 pagesParallel Computing's Growing RelevancekarunakarNo ratings yet

- Computer Architecture & OrganizationDocument3 pagesComputer Architecture & OrganizationAlberto Jr. AguirreNo ratings yet

- 1Q. Explain The Fundamentals of Computer Design 1.1 Fundamentals of Computer DesignDocument19 pages1Q. Explain The Fundamentals of Computer Design 1.1 Fundamentals of Computer DesignGopi NathNo ratings yet

- CS6303 Computer Architecture ACT Notes b6608Document76 pagesCS6303 Computer Architecture ACT Notes b6608mightymkNo ratings yet

- Design Techniques For Low Power Systems: Paul J.M. Havinga, Gerard J.M. SmitDocument20 pagesDesign Techniques For Low Power Systems: Paul J.M. Havinga, Gerard J.M. SmitChakita VamshiNo ratings yet

- Understanding computer performance challengesDocument18 pagesUnderstanding computer performance challengesJoy loboNo ratings yet

- CSCE 313 Embedded Systems DesignDocument8 pagesCSCE 313 Embedded Systems DesignSudhakar SpartanNo ratings yet

- AOK Lecture03 PDFDocument28 pagesAOK Lecture03 PDFAndre SetiawanNo ratings yet

- Performance of A ComputerDocument83 pagesPerformance of A ComputerPrakherGuptaNo ratings yet

- Computer ArchitecutreDocument77 pagesComputer ArchitecutreBalaji McNo ratings yet

- Parallel Architecture Fundamentals: PA FundamentalsDocument18 pagesParallel Architecture Fundamentals: PA FundamentalsRaghuram PuramNo ratings yet

- Introduction to Multi-Core Processors SeminarDocument23 pagesIntroduction to Multi-Core Processors Seminar18E3436 Anjali naik100% (1)

- CAQA5e ch1Document42 pagesCAQA5e ch1Minal PatilNo ratings yet

- Ch01 Section1 XP 051Document42 pagesCh01 Section1 XP 051watchstgNo ratings yet

- Fundamentals of Quantitative Design and Analysis: A Quantitative Approach, Fifth EditionDocument24 pagesFundamentals of Quantitative Design and Analysis: A Quantitative Approach, Fifth Editionuma_saiNo ratings yet

- Advanced Computer Architecture FundamentalsDocument29 pagesAdvanced Computer Architecture FundamentalsmannanabdulsattarNo ratings yet

- Green OS: Future Low Power Operating SystemDocument4 pagesGreen OS: Future Low Power Operating SystemVinay KumarNo ratings yet

- Cs 6303 Unit 1Document90 pagesCs 6303 Unit 1Raghu Raman DuraiswamyNo ratings yet

- Advanced Computer ArchitectureDocument74 pagesAdvanced Computer ArchitectureMonica ChandrasekarNo ratings yet

- Energy Efficient High Performance Processors: Recent Approaches for Designing Green High Performance ComputingFrom EverandEnergy Efficient High Performance Processors: Recent Approaches for Designing Green High Performance ComputingNo ratings yet

- Fundamentals of Modern Computer Architecture: From Logic Gates to Parallel ProcessingFrom EverandFundamentals of Modern Computer Architecture: From Logic Gates to Parallel ProcessingNo ratings yet

- Pipelined Processor Farms: Structured Design for Embedded Parallel SystemsFrom EverandPipelined Processor Farms: Structured Design for Embedded Parallel SystemsNo ratings yet

- QN Bank IMDocument4 pagesQN Bank IMmtechsurendra123No ratings yet

- V-Model of Automotive Software DesignDocument11 pagesV-Model of Automotive Software Designmtechsurendra123No ratings yet

- 1 Linearpowersupply 120916082113 Phpapp02Document28 pages1 Linearpowersupply 120916082113 Phpapp02mtechsurendra123No ratings yet

- Linear DC Power SupplyDocument48 pagesLinear DC Power Supplymtechsurendra123No ratings yet

- Bio-Medical Electronics IEEE Journal PaperDocument8 pagesBio-Medical Electronics IEEE Journal Papermtechsurendra123No ratings yet

- Teaching Mistakes From The College ClassroomDocument22 pagesTeaching Mistakes From The College ClassroomsunilbagadeNo ratings yet

- Power Electronics and Switch Mode Power Supply: Technology Training That Works Technology Training That WorksDocument21 pagesPower Electronics and Switch Mode Power Supply: Technology Training That Works Technology Training That Worksmtechsurendra123No ratings yet

- 8051 UcDocument33 pages8051 UcMuhammad SohailNo ratings yet

- Unregulated Power Supply DesignDocument9 pagesUnregulated Power Supply Designmtechsurendra123No ratings yet

- Instruction Set 8051ucDocument16 pagesInstruction Set 8051ucmtechsurendra123No ratings yet

- Esde SyllabusDocument5 pagesEsde Syllabusmtechsurendra123No ratings yet

- PicDocument20 pagesPicMohammad Gulam Ahamad100% (3)

- A Cmos Cantilever-Based Label-Free Dna Soc With Improved Sensitivity For Hepatitis B Virus DetectionDocument12 pagesA Cmos Cantilever-Based Label-Free Dna Soc With Improved Sensitivity For Hepatitis B Virus Detectionmtechsurendra123No ratings yet

- Instruction Set 8051ucDocument16 pagesInstruction Set 8051ucmtechsurendra123No ratings yet

- Analog VLSI Design: Nguyen Cao QuiDocument72 pagesAnalog VLSI Design: Nguyen Cao QuiKhuong LamborghiniNo ratings yet

- Allen P.E. Holberg D.R. CMOS Analog Circuit Design SDocument477 pagesAllen P.E. Holberg D.R. CMOS Analog Circuit Design SMallavarapu Aditi KrishnaNo ratings yet

- LayoutDocument40 pagesLayoutSrinivas DevalankaNo ratings yet

- Design of Analog CMOS Integrated Circuits, Solutions (McGraw) - RAZAVIDocument329 pagesDesign of Analog CMOS Integrated Circuits, Solutions (McGraw) - RAZAVImtechsurendra12379% (33)

- Cadence Layout Tutorial With Post Layout SimulationDocument27 pagesCadence Layout Tutorial With Post Layout SimulationobaejNo ratings yet

- Ficha Técnica Microflow Alfa AquariaDocument2 pagesFicha Técnica Microflow Alfa AquariaDámaris BMNo ratings yet

- (Lecture Games) Python Programming Game: Andreas Lyngstad Johnsen Georgy UshakovDocument110 pages(Lecture Games) Python Programming Game: Andreas Lyngstad Johnsen Georgy UshakovGiani BuzatuNo ratings yet

- Security System For DNS Using CryptographyDocument14 pagesSecurity System For DNS Using CryptographySandeep UndrakondaNo ratings yet

- 63364enfanuc OpenCNC StatusMonitoring PackageDocument150 pages63364enfanuc OpenCNC StatusMonitoring PackageChiragPhadkeNo ratings yet

- HR Emails in MNC CompanysDocument10 pagesHR Emails in MNC CompanysD Taraka Ramarao PasagadiNo ratings yet

- Popup Calender in ExcelDocument22 pagesPopup Calender in ExcelshawmailNo ratings yet

- HCIA-Storage+V5 0+Version+InstructionDocument3 pagesHCIA-Storage+V5 0+Version+InstructionGustavo Borges STELMATNo ratings yet

- Finals Quiz Database DraftDocument8 pagesFinals Quiz Database DraftOwen LuzNo ratings yet

- IVSS2.0: Intelligent Video Surveillance ServerDocument1 pageIVSS2.0: Intelligent Video Surveillance ServeralbertNo ratings yet

- Open Development EnvironmentDocument8 pagesOpen Development EnvironmentFrancescoNo ratings yet

- Milestone Users Manual PDFDocument113 pagesMilestone Users Manual PDFArt ronicaNo ratings yet

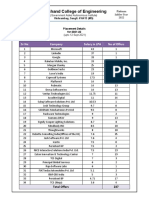

- Walchand College of Engineering: SR No Company Salary in LPA No of OffersDocument2 pagesWalchand College of Engineering: SR No Company Salary in LPA No of OffersHrudayraj VibhuteNo ratings yet

- Eclipse Download and InstallationMIODocument31 pagesEclipse Download and InstallationMIOAndrew G. McDonaldNo ratings yet

- Work To Do List Micro Project ReportDocument18 pagesWork To Do List Micro Project ReportsaitamaNo ratings yet

- Assignment 3CDocument5 pagesAssignment 3CXYZNo ratings yet

- India - Internet Penetration Rate 2021 - StatistaDocument2 pagesIndia - Internet Penetration Rate 2021 - StatistamojakepNo ratings yet

- 22 How To Create A Pivot Table Report in ExcelDocument2 pages22 How To Create A Pivot Table Report in ExcelmajestyvayohNo ratings yet

- Read MeDocument5 pagesRead MeCesar SarangoNo ratings yet

- PIMS1Document24 pagesPIMS1Chiranjibi BeheraNo ratings yet

- StatsDocument1,561 pagesStatsAlonso SH100% (1)

- MongoDB Cheat Sheet Red Hat DeveloperDocument16 pagesMongoDB Cheat Sheet Red Hat DeveloperYãsh ßhúvaNo ratings yet

- Read Me FirstDocument2 pagesRead Me FirstCristian StancuNo ratings yet

- Smartbox - BrochureDocument32 pagesSmartbox - BrochureKidz to Adultz ExhibitionsNo ratings yet

- (A) Describe The Concepts of User Requirements and System Requirements and Why These Requirements May Be Expressed Using Different NotationDocument2 pages(A) Describe The Concepts of User Requirements and System Requirements and Why These Requirements May Be Expressed Using Different NotationMyo Thi HaNo ratings yet

- BTRFS: The Linux B-Tree Filesystem: ACM Transactions On Storage August 2013Document55 pagesBTRFS: The Linux B-Tree Filesystem: ACM Transactions On Storage August 2013Klaus BertokNo ratings yet

- Cs525: Special Topics in DBS: Large-Scale Data ManagementDocument35 pagesCs525: Special Topics in DBS: Large-Scale Data ManagementWoya MaNo ratings yet

- Netzwerk-neu-A1-Kursbuch Pages 1-50 - Flip PDF Download | FlipHTML5Document176 pagesNetzwerk-neu-A1-Kursbuch Pages 1-50 - Flip PDF Download | FlipHTML5m5wd82w9fbNo ratings yet

- Agilent RTEConceptsGuide A.01.04Document170 pagesAgilent RTEConceptsGuide A.01.04Carolina MontañaNo ratings yet