Professional Documents

Culture Documents

Stat 497 - LN4

Uploaded by

Julian DiazOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Stat 497 - LN4

Uploaded by

Julian DiazCopyright:

Available Formats

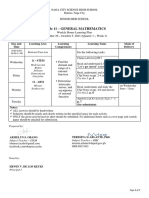

STAT 497

LECTURE NOTES 4

MODEL INDETIFICATION AND NON-

STATIONARY TIME SERIES MODELS

1

MODEL IDENTIFICATION

We have learned a large class of linear

parametric models for stationary time series

processes.

Now, the question is how we can find out the

best suitable model for a given observed

series. How to choose the appropriate model

(on order of p and q).

2

MODEL IDENTIFICATION

ACF and PACF show specific properties for

specific models. Hence, we can use them as a

criteria to identify the suitable model.

Using the patterns of sample ACF and sample

PACF, we can identify the model.

3

MODEL SELECTION THROUGH CRITERIA

Besides sACF and sPACF plots, we have also other

tools for model identification.

With messy real data, sACF and sPACF plots

become complicated and harder to interpret.

Dont forget to choose the best model with as

few parameters as possible.

It will be seen that many different models can fit

to the same data so that we should choose the

most appropriate (with less parameters) one and

the information criteria will help us to decide this.

4

MODEL SELECTION THROUGH CRITERIA

The three well-known information criteria are

Akaikes information criterion (AIC) (Akaike, 1974)

Schwarzs Bayesian Criterion (SBC) (Schwarz, 1978).

Also known as Bayesian Information Criterion (BIC)

Hannan-Quinn Criteria (HQIC) (Hannan&Quinn,

1979)

5

AIC

Assume that a statistical model of M parameters

is fitted to data

For the ARMA model and n observations, the

log-likelihood function

| | . 2 likelihood maximum ln 2 M AIC + =

( )

sidual SS

q p

a

a

S

n

L

Re

2

2

, ,

2

1

2 ln

2

ln u |

o

to =

( ) . , 0 ~ assuming

2

. .

|

.

|

\

|

a

d i i

t

N a o

6

AIC

Then, the maximized log-likelihood is

( )

constant

2

2 ln 1

2

ln

2

n l t o + =

n n

L

a

M n AIC

a

2 ln

2

+ = o

Choose model (or the value of M) with

minimum AIC.

7

SBC

The Bayesian information criterion (BIC) or

Schwarz Criterion (also SBC, SBIC) is a criterion

for model selection among a class of

parametric models with different numbers of

parameters.

When estimating model parameters using

maximum likelihood estimation, it is possible to

increase the likelihood by adding additional

parameters, which may result in overfitting.

The BIC resolves this problem by introducing a

penalty term for the number of parameters in

the model.

8

SBC

In SBC, the penalty for additional parameters

is stronger than that of the AIC.

It has the most superior large sample

properties.

It is consistent, unbiased and sufficient.

n M n SBC

a

ln ln

2

+ = o

9

HQIC

The Hannan-Quinn information criterion

(HQIC) is an alternative to AIC and SBC.

It can be shown [see Hannan (1980)] that in

the case of common roots in the AR and MA

polynomials, the Hannan-Quinn and Schwarz

criteria still select the correct orders p and q

consistently.

( ) n M n HQIC

a

ln ln 2 ln

2

+ = o

10

THE INVERSE AUTOCORRELATION

FUNCTION

The sample inverse autocorrelation function

(SIACF) plays much the same role in ARIMA

modeling as the sample partial

autocorrelation function (SPACF), but it

generally indicates subset and seasonal

autoregressive models better than the SPACF.

11

THE INVERSE AUTOCORRELATION

FUNCTION

Additionally, the SIACF can be useful for detecting

over-differencing. If the data come from a

nonstationary or nearly nonstationary model, the

SIACF has the characteristics of a noninvertible

moving-average. Likewise, if the data come from

a model with a noninvertible moving average,

then the SIACF has nonstationary characteristics

and therefore decays slowly. In particular, if the

data have been over-differenced, the SIACF looks

like a SACF from a nonstationary process

12

THE INVERSE AUTOCORRELATION

FUNCTION

Let Y

t

be generated by the ARMA(p, q) process

If u(B) is invertible, then the model

is also a valid ARMA(q, p) model. This model

is sometimes referred to as the dual model.

The autocorrelation function (ACF) of this dual

model is called the inverse autocorrelation

function (IACF) of the original model.

( ) ( ) ( ). , 0 ~ where

2

a t t q t p

WN a a B Y B o u | =

( ) ( )

t p t q

a B Z B | u =

13

THE INVERSE AUTOCORRELATION

FUNCTION

Notice that if the original model is a pure

autoregressive model, then the IACF is an ACF that

corresponds to a pure moving-average model. Thus, it

cuts off sharply when the lag is greater than p; this

behavior is similar to the behavior of the partial

autocorrelation function (PACF).

Under certain conditions, the sampling distribution of

the SIACF can be approximated by the sampling

distribution of the SACF of the dual model (Bhansali

1980). In the plots generated by ARIMA, the

confidence limit marks (.) are located at 2n

1/2

. These

limits bound an approximate 95% confidence interval

for the hypothesis that the data are from a white noise

process.

14

THE EXTENDED SAMPLE

AUTOCORRELATION FUNCTION_ESACF

The extended sample autocorrelation function

(ESACF) method can tentatively identify the

orders of a stationary or nonstationary ARMA

process based on iterated least squares

estimates of the autoregressive parameters.

Tsay and Tiao (1984) proposed the technique.

15

ESACF

Consider ARMA(p, q) model

or

then

follows an MA(q) model

16

( ) ( )

t

q

q t

p

p

a B B Y B B u u u | | + =

1 0 1

1 1

q t q t t p t p t t

a a a Y Y Y

+ + + + = u u | | u

1 1 1 1 0

( )

t

p

p t

Y B B Z | | + + =

1

1

.

1 1 0 q t q t t t

a a a Z

+ = u u u

ESACF

Given a stationary or nonstationary time series

Y

t

with mean corrected form with a

true autoregressive order of p+d and with a

true moving-average order of q, we can use the

ESACF method to estimate the unknown orders

and by analyzing the sample autocorrelation

functions associated with filtered series of the

form

17

=

t t

Y Y

( )

( )

( )

( )

= =

=

m

i

j t

j m

i t t j m

j m

t

Y Y Y B Z

1

,

,

,

| |

( ) process. j m, ARMA an is series that the

assumption under the estimates parameter the are s '

where

i

|

ESACF

It is known that OLS estimators for ARMA

process are not consistent so that an iterative

procedure is proposed to overcome this.

The j-th lag of the sample autocorrelation

function of the filtered series is the extended

sample autocorrelation function, and it is

denoted as

18

( ) ( ) ( )

( )

( ) 1 ,

1 , 1

1

1 ,

1

1 , 1 ,

+

+

+

=

j m

m

j m

m

j m

i

j m

i

j m

i

|

|

| | |

( )

.

m

j

ESACF

ESACF TABLE

19

AR

MA

0 1 2 3

0

1

2

3

( ) 0

1

( ) 0

2

( ) 0

3

( ) 0

4

( ) 3

4

( ) 3

3

( ) 3

2

( ) 2

2

( ) 1

2

( ) 3

1

( ) 1

1

( ) 2

1

( ) 1

3

( ) 1

4

( ) 2

4

( ) 2

3

ESACF

For an ARMA(p,q) process, we have the

following convergence in probability, that is,

for m=1,2, and j=1,2,, we have

20

( )

=

< = s

=

otherwise 0, X

0 , 0

q j p m

m

j

ESACF

Thus, the asymptotic ESACF table for ARMA(1,1)

model becomes

21

AR

MA

0 1 2 3 4

0 X X X X X

1 X 0 0 0 0

2 X X 0 0 0

3 X X X 0 0

4 X X X X 0

ESACF

In practice, we have finite samples and

may not be exactly zero.

However, we can use the Bartletts approximate

formula for the asymptotic variance of .

The orders are tentatively identified by finding a

right (maximal) triangular pattern with vertices

located at (p+d, q) and (p+d, q

max

) and in which

all elements are insignificant (based on

asymptotic normality of the autocorrelation

function). The vertex (p+d, q) identifies the order.

22

( )

q j p m

m

j

< s 0 ,

( ) m

j

MINIMUM INFORMATION CRITERION

MINIC TABLE

23

MA

AR 0 1 2 3

0 SBC(0,0) SBC(0,1) SBC(0,2) SBC(0,3)

1 SBC(1,0) SBC(1,1) SBC(1,2) SBC(1,3)

2 SBC(2,0) SBC(2,1) SBC(2,2) SBC(2,3)

3 SBC(3,0) SBC(3,1) SBC(3,2) SBC(3,3)

NON-STATIONARY TIME SERIES MODELS

Non-constant in mean

Non-constant in variance

Both

24

NON-STATIONARY TIME SERIES

MODELS

Inspection of the ACF serves as a rough

indicator of whether a trend is present in a

series. A slow decay in ACF is indicative of a

large characteristic root; a true unit root

process, or a trend stationary process.

Formal tests can help to determine whether a

system contains a trend and whether the

trend is deterministic or stochastic.

25

NON-STATIONARITY IN MEAN

Deterministic trend

Detrending

Stochastic trend

Differencing

26

DETERMINISTIC TREND

A deterministic trend is when we say that the

series is trending because it is an explicit

function of time.

Using a simple linear trend model, the

deterministic (global) trend can be estimated.

This way to proceed is very simple and

assumes the pattern represented by linear

trend remains fixed over the observed time

span of the series. A simple linear trend

model:

27

t t

a t Y + + = | o

DETERMINISTIC TREND

The parameter | measure the average change

in Y

t

from one period to the another:

The sequence {Y

t

} will exhibit only temporary

departures from the trend line o+|t. This type

of model is called a trend stationary (TS)

model.

28

( ) ( ) | |

( ) |

|

= A

+ = = A

t

t t t t t

Y E

a a t t Y Y Y

1 1

1

TREND STATIONARY

If a series has a deterministic time trend, then

we simply regress Y

t

on an intercept and a

time trend (t=1,2,,n) and save the residuals.

The residuals are detrended series. If Y

t

is

stochastic, we do not necessarily get

stationary series.

29

DETERMINISTIC TREND

Many economic series exhibit exponential

trend/growth. They grow over time like an

exponential function over time instead of a

linear function.

For such series, we want to work with the log

of the series:

30

( )

( ) ( ) |

|

| o

= A

+ + =

t

t t

Y E

a t Y

ln

: is rate growth average the So

ln

DETERMINISTIC TREND

Standard regression model can be used to

describe the phenomenon. If the deterministic

trend can be described by a k-th order

polynomial of time, the model of the process

31

( ). , 0 ~ where

2

2

2 1 0

a t

t

k

k t

WN a

a t t t Y

o

o o o o + + + + + =

DETERMINISTIC TREND

This model has a short memory.

If a shock hits a series, it goes back to

trend level in short time. Hence, the best

forecasts are not affected.

Rarely model like this is useful in practice.

A more realistic model involves stochastic

(local) trend.

32

STOCHASTIC TREND

A more modern approach is to consider trends

in time series as a variable. A variable trend

exists when a trend changes in an

unpredictable way. Therefore, it is considered

as stochastic.

33

STOCHASTIC TREND

Recall the AR(1) model: Y

t

= c +| Y

t1

+ a

t

.

As long as ||| < 1, everything is fine (OLS is

consistent, t-stats are asymptotically normal, ...).

Now consider the extreme case where | = 1, i.e.

Y

t

= c + Y

t1

+ a

t

.

Where is the trend? No t term.

34

STOCHASTIC TREND

Let us replace recursively the lag of Y

t

on the

right-hand side:

35

( )

+ + =

+ + + + =

+ + =

=

t

i

i

t t t

t t t

a Y tc

a a Y c c

a Y c Y

1

0

1 2

1

Deterministic trend

This is what we call a random walk with

drift. If c = 0, it is arandom walk.

STOCHASTIC TREND

Each a

i

shock represents shift in the intercept.

Since all values of {a

i

} have a coefficient of unity,

the effect of each shock on the intercept term is

permanent.

In the time series literature, such a sequence is

said to have a stochastic trend since each a

i

shock

imparts a permanent and random change in the

conditional mean of the series. To be able to

define this situation, we use Autoregressive

Integrated Moving Average (ARIMA) models.

36

DETERMINISTIC VS STOCHASTIC TREND

They might appear similar since they both lead to

growth over time but they are quite different.

To see why, suppose that through any policies, you

got a bigger Y

t

because the noise a

t

is big. What

will happen next period?

With a deterministic trend, Y

t+1

= c +|(t+1)+a

t+1

.

The noise a

t

is not affecting Y

t+1

. Your stupendous

policy had a one period impact.

With a stochastic trend, Y

t+1

= c + Y

t

+ a

t+1

= c +

(c + Y

t1

+ a

t

) + a

t+1

. The noise a

t

is affecting Y

t+1

.

In fact, the policy will have a permanent impact.

37

DETERMINISTIC VS STOCHASTIC TREND

Conclusions:

When dealing with trending series, we are always interested

in knowing whether the growth is a deterministic or

stochastic trend.

There are also economic time series that do not grow over

time (e.g., interest rates) but we will need to check if they

have a behavior similar to stochastic trends (| = 1 instead

of ||| < a, while c = 0).

A deterministic trend refers to the long-term trend that is not

affected by short term fluctuations in the economy. Some of

the occurrences in the economy are random and may have a

permanent effect of the trend. Therefore the trend must

contain a deterministic and a stochastic component.

38

AUTOREGRESSIVE INTEGRATED

MOVING AVERAGE (ARIMA) PROCESSES

Consider an ARIMA(p,d,q) process

39

( )( ) ( )

( )

( )

( ). 0, WN ~ and

roots common no share 1

and 1 where

1

2

a

1

1

0

o

u u u

| | |

u u |

t

q

q q

p

p p

t q t

d

p

a

B B B

B B B

a B Y B B

=

=

+ =

ARIMA MODELS

When d=0, u

0

is related to the mean of the

process.

When d>0, u

0

is a deterministic trend term.

Non-stationary in mean:

Non-stationary in level and slope:

40

( ). 1

1 0 p

| | u =

( )( ) ( )

t q t p

a B Y B B u u | + =

0

1

( )( ) ( )

t q t p

a B Y B B u u | + =

0

2

1

RANDOM WALK PROCESS

A random walk is defined as a process where

the current value of a variable is composed of

the past value plus an error term defined as a

white noise (a normal variable with zero mean

and variance one).

ARIMA(0,1,0) PROCESS

41

( )

( ). , 0 ~ where

1

2

1

a t

t t t t t t

WN a

a Y B Y a Y Y

o

= = A + =

RANDOM WALK PROCESS

Behavior of stock market.

Brownian motion.

Movement of a drunken men.

It is a limiting process of AR(1).

42

RANDOM WALK PROCESS

The implication of a process of this type is that the best

prediction of Y for next period is the current value, or

in other words the process does not allow to predict

the change (Y

t

Y

t-1

). That is, the change of Y is

absolutely random.

It can be shown that the mean of a random walk

process is constant but its variance is not. Therefore a

random walk process is nonstationary, and its variance

increases with t.

In practice, the presence of a random walk process

makes the forecast process very simple since all the

future values of Y

t+s

for s > 0, is simply Y

t

.

43

RANDOM WALK PROCESS

44

RANDOM WALK PROCESS

45

RANDOM WALK WITH DRIFT

Change in Y

t

is partially deterministic and

partially stochastic.

It can also be written as

46

t

Y

t t

a Y Y

t

+ =

A

0 1

u

trend

stochastic

1

trend

tic determinis

0 0

+ + =

=

t

i

i t

a t Y Y u

Pure model of a trend

(no stationary component)

RANDOM WALK WITH DRIFT

47

( )

0 0

u t Y Y E

t

+ =

After t periods, the cumulative change in Y

t

is tu

0

.

( ) flat not

0

+ =

+

u s Y Y Y E

t t s t

Each a

i

shock has a permanent effect on the

mean of Y

t

.

RANDOM WALK WITH DRIFT

48

ARIMA(0,1,1) OR IMA(1,1) PROCESS

Consider a process

Letting

49

( ) ( )

( ). , 0 ~ where

1 1

2

a t

t t

WN a

a B Y B

o

u =

( )

t t

Y B W = 1

( ) stationary a B W

t t

= u 1

ARIMA(0,1,1) OR IMA(1,1) PROCESS

Characterized by the sample ACF of the

original series failing to die out and by the

sample ACF of the first differenced series

shows the pattern of MA(1).

IF:

50

( ) . 1 where 1

1

u o o o = +

=

=

t

j

j t

j

t

a Y Y

( ) ( )

=

1

1

2 1

1 , ,

j

j t

j

t t t

Y Y Y Y E o o

Exponentially decreasing. Weighted MA of its past values.

ARIMA(0,1,1) OR IMA(1,1) PROCESS

51

( ) ( ) | | , , 1 , ,

2 1 1 1 +

+ =

t t t t t t t

Y Y Y E Y Y Y Y E o o

where o is the smoothing constant in the

method of exponential smoothing.

REMOVING THE TREND

Shocks to a stationary time series are

temporary over time. The series revert to its

long-run mean.

A series containing a trend will not revert to a

long-run mean. The usual methods for

eliminating the trend are detrending and

differencing.

52

DETRENDING

Detrending is used to remove deterministic

trend.

Regress Y

t

on time and save the residuals.

Then, check whether residuals are stationary.

53

DIFFERENCING

Differencing is used for removing the

stochastic trend.

d-th difference of ARIMA(p,d,q) model is

stationary. A series containing unit roots can

be made stationary by differencing.

ARIMA(p,d,q) d unit roots

54

Integrated of order d, I(d)

( ) d I Y

t

~

DIFFERENCING

Random Walk:

55

t t t

a Y Y =

1

t t

a Y = A

Non-stationary

Stationary

DIFFERENCING

Differencing always makes us to loose

observation.

1st regular difference: d=1

2

nd

regular difference: d=2

56

( )

t t t t

Y Y Y Y B A = =

1

1

( ) ( )

2 1

2 2

2

2 2 1 1

+ = + = A =

t t t t t t

Y Y Y Y B B Y Y B

difference 2nd not the is

2

t t

Y Y

DIFFERENCING

57

Y

t

AY

t

A

2

Y

t

Y

t

Y

t-2

3 * * *

8 83=5 * *

5 58=3 35=8 53=2

9 95=4 4(3)=7 98=1

KPSS TEST

To be able to test whether we have a

deterministic trend vs stochastic trend, we are

using KPSS (Kwiatkowski, Phillips, Schmidt and

Shin) Test (1992).

58

( ) ( )

( ) stationary difference 1 ~ :

stationary or trend level 0 ~ :

1

0

I Y H

I Y H

t

t

KPSS TEST

STEP 1: Regress Y

t

on a constant and trend and

construct the OLS residuals e=(e

1

,e

2

,,e

n

).

STEP 2: Obtain the partial sum of the residuals.

STEP 3: Obtain the test statistic

where is the estimate of the long-run variance

of the residuals.

59

=

=

t

i

i t

e S

1

=

=

n

t

t

S

n KPSS

1

2

2

o

2

o

KPSS TEST

STEP 4: Reject H

0

when KPSS is large, because

that is the evidence that the series wander

from its mean.

Asymptotic distribution of the test statistic

uses the standard Brownian bridge.

It is the most powerful unit root test but if

there is a volatility shift it cannot catch this

type non-stationarity.

60

PROBLEM

When an inappropriate method is used to

eliminate the trend, we may create other

problems like non-invertibility.

E.g.

61

( )

( )

( ) . and circle unit

the outside are 0 of roots the where

stationary Trend

1 0

t t

t t

a B

B

t Y B

u c

|

c o o |

=

=

+ + =

PROBLEM

But if we misjudge the series as difference

stationary, we need to take a difference.

Actually, detrending should be applied. Then,

the first difference:

62

( ) ( )

t t

B Y B c o | + = A 1

1

Now, we create a non-invertible unit root

process in the MA component.

PROBLEM

To overcome this, look at the inverse sample

autocorrelation function. If it has the same

ACF pattern of non-stationary process (that is,

slow decaying behavior), this means that we

over-differenced the series.

Go back and detrend the series instead of

differencing.

There are also smoothing filters to eliminate

the trend (Decomposition Methods).

63

NON-STATIONARITY IN VARIANCE

Stationarity in mean Stationarity in variance

Non-stationarity in mean Non-stationarity in

variance

If the mean function is time dependent,

1. The variance, Var(Y

t

) is time dependent.

2. Var(Y

t

) is unbounded as t.

3. Autocovariance and autocorrelation functions are

also time dependent.

4. If t is large wrt Y

0

, then

k

~ 1.

64

VARIANCE STABILIZING

TRANSFORMATION

The variance of a non-stationary process

changes as its level changes

for some positive constant c and a function f.

Find a function T so that the transformed

series T(Y

t

) has a constant variance.

65

| | ( )

t t

f c Y Var . =

The Delta Method

VARIANCE STABILIZING

TRANSFORMATION

Generally, we use the power function

66

( ) 1964) Cox, and (Box

1

=

t

t

Y

Y T

Transformation

1 1/Y

t

0.5 1/(Y

t

)

0.5

0 ln Y

t

0.5 (Y

t

)

0.5

1 Y

t

(no transformation)

VARIANCE STABILIZING

TRANSFORMATION

Variance stabilizing transformation is only for

positive series. If your series has negative values,

then you need to add each value with a positive

number so that all the values in the series are

positive. Now, you can search for any need for

transformation.

It should be performed before any other analysis

such as differencing.

Not only stabilize the variance but also improves

the approximation of the distribution by Normal

distribution.

67

You might also like

- Cambridge International As and A Level Mathematics Statistics Estadistica YprobabilidadDocument162 pagesCambridge International As and A Level Mathematics Statistics Estadistica YprobabilidadConservación EnergyNo ratings yet

- Ed Thorp - A Mathematician On Wall Street - Statistical ArbitrageDocument33 pagesEd Thorp - A Mathematician On Wall Street - Statistical Arbitragenick ragoneNo ratings yet

- Electric Circuit Variables and ElementsDocument30 pagesElectric Circuit Variables and Elementsjessdoria100% (3)

- Char LieDocument64 pagesChar LieppecNo ratings yet

- T9 - Table For Constants For Control and Formulas For Control ChartsDocument3 pagesT9 - Table For Constants For Control and Formulas For Control ChartsAmit JoshiNo ratings yet

- Thermodynamics IntroductionDocument255 pagesThermodynamics IntroductionPrashant KulkarniNo ratings yet

- Allen J.R.L.-physical Processes of Sedimentation-GEORGE ALLEN and UNWIN LTD (1980)Document128 pagesAllen J.R.L.-physical Processes of Sedimentation-GEORGE ALLEN and UNWIN LTD (1980)Dwiyana Yogasari100% (1)

- Supply, Installation, & Commissioning of The World'S Largest Grinding MillDocument12 pagesSupply, Installation, & Commissioning of The World'S Largest Grinding MillRicardo MartinezNo ratings yet

- MathsDocument292 pagesMathsDANIEL PAUL PAUL CHINNATHAMBINo ratings yet

- The Design of Optimum Multifactorial ExperimentsDocument22 pagesThe Design of Optimum Multifactorial ExperimentsGuillermo UribeNo ratings yet

- Box Jenkins MethodDocument14 pagesBox Jenkins MethodAbhishekSinghGaurNo ratings yet

- Rcs454: Python Language Programming LAB: Write A Python Program ToDocument39 pagesRcs454: Python Language Programming LAB: Write A Python Program ToShikha AryaNo ratings yet

- Process Control, Modelling & Simulation in Mineral ProcessingDocument28 pagesProcess Control, Modelling & Simulation in Mineral ProcessingLionel YdeNo ratings yet

- Zivot - Introduction To Computational Finance and Financial EconometricsDocument188 pagesZivot - Introduction To Computational Finance and Financial Econometricsjrodasch50% (2)

- Amelunxen, P, Bagdad Concentrator Process Control UpdateDocument6 pagesAmelunxen, P, Bagdad Concentrator Process Control UpdateGuadalupe EMNo ratings yet

- Six SigmaDocument18 pagesSix Sigmachethan626No ratings yet

- Paper Virtual Pro Molienda AG-SAGDocument15 pagesPaper Virtual Pro Molienda AG-SAGDiego Alonso Almeyda BarzolaNo ratings yet

- ARIMA Models For Time Series Forecasting - Introduction To ARIMA ModelsDocument6 pagesARIMA Models For Time Series Forecasting - Introduction To ARIMA ModelsgschiroNo ratings yet

- Hu - Time Series AnalysisDocument149 pagesHu - Time Series AnalysisMadMinarchNo ratings yet

- Optimisation of The Proeminent Hill Flotation CircuitDocument14 pagesOptimisation of The Proeminent Hill Flotation CircuitThiago JatobáNo ratings yet

- Schumann Album For The Young Op 68Document48 pagesSchumann Album For The Young Op 68Bubba ErdeljanNo ratings yet

- FORECASTING TIME SERIES WITH ARMA AND ARIMA MODELSDocument35 pagesFORECASTING TIME SERIES WITH ARMA AND ARIMA MODELSMochammad Adji FirmansyahNo ratings yet

- Forecasting Time Series With R - DataikuDocument16 pagesForecasting Time Series With R - DataikuMax GrecoNo ratings yet

- Modelling Volatility and Correlation: Introductory Econometrics For Finance © Chris Brooks 2002 1Document62 pagesModelling Volatility and Correlation: Introductory Econometrics For Finance © Chris Brooks 2002 1Bianca AlexandraNo ratings yet

- Statistics: 1.5 Oneway Analysis of VarianceDocument5 pagesStatistics: 1.5 Oneway Analysis of Varianceأبوسوار هندسةNo ratings yet

- Sesi 5 TWO SAMPLE TEST Levine - Smume7 - ch10Document44 pagesSesi 5 TWO SAMPLE TEST Levine - Smume7 - ch10Kevin Aditya100% (1)

- TS PartIIDocument50 pagesTS PartIIأبوسوار هندسةNo ratings yet

- Multiple Regression MSDocument35 pagesMultiple Regression MSWaqar AhmadNo ratings yet

- ARMA-Stochastic Time Series ModelingDocument19 pagesARMA-Stochastic Time Series ModelingKVVNo ratings yet

- The Impact of Foreign Aid in Economic Growth: An Econometric Analysis of BangladeshDocument8 pagesThe Impact of Foreign Aid in Economic Growth: An Econometric Analysis of Bangladeshijsab.comNo ratings yet

- PanelDocument93 pagesPaneljjanggu100% (1)

- Shumway and StofferDocument5 pagesShumway and StofferReem SakNo ratings yet

- INDE 3364 Final Exam Cheat SheetDocument5 pagesINDE 3364 Final Exam Cheat SheetbassoonsrockNo ratings yet

- Math4424: Homework 4: Deadline: Nov. 21, 2012Document2 pagesMath4424: Homework 4: Deadline: Nov. 21, 2012Moshi ZeriNo ratings yet

- Introduction To Stochastic Modeling 3rd Solution Manual PDFDocument2 pagesIntroduction To Stochastic Modeling 3rd Solution Manual PDFPhan Hải0% (1)

- Proc Arima ProcedureDocument122 pagesProc Arima ProcedureBikash BhandariNo ratings yet

- Time Series Analysis With MATLAB and Econometrics ToolboxDocument2 pagesTime Series Analysis With MATLAB and Econometrics ToolboxKamel RamtanNo ratings yet

- Time Series Diagnostic TestDocument20 pagesTime Series Diagnostic TestEzra AhumuzaNo ratings yet

- Examples FTSA Questions2Document18 pagesExamples FTSA Questions2Anonymous 7CxwuBUJz3No ratings yet

- Regresion Logistica RDocument38 pagesRegresion Logistica Ranon_305782103No ratings yet

- Bosch LTDDocument30 pagesBosch LTDJiss Tom100% (1)

- Metsim Print 2018 PDFDocument1 pageMetsim Print 2018 PDFWalter Andrés OrqueraNo ratings yet

- Pca IcaDocument34 pagesPca Icasachin121083No ratings yet

- Time Series and Arima ModelsDocument20 pagesTime Series and Arima ModelsTanmay H ShethNo ratings yet

- Markov Chains and Processes OverviewDocument23 pagesMarkov Chains and Processes OverviewWesam WesamNo ratings yet

- Fig. 1 The Process Yield HistogramDocument10 pagesFig. 1 The Process Yield HistogramALIKNFNo ratings yet

- Mind Map or Summary For Chapter 2Document3 pagesMind Map or Summary For Chapter 2Amirah AzlanNo ratings yet

- Kaplan-Meier Survival Curves and The Log-Rank TestDocument42 pagesKaplan-Meier Survival Curves and The Log-Rank TestagNo ratings yet

- Design of Experiments Via Taguchi Methods Orthogonal ArraysDocument21 pagesDesign of Experiments Via Taguchi Methods Orthogonal ArraysRohan ViswanathNo ratings yet

- Dimensionality Reduction: Principal Component Analysis (PCA)Document11 pagesDimensionality Reduction: Principal Component Analysis (PCA)tanmayi nandirajuNo ratings yet

- Forecasting Using Vector Autoregressive Models (VAR)Document4 pagesForecasting Using Vector Autoregressive Models (VAR)Editor IJRITCCNo ratings yet

- Groebner Business Statistics 7 Ch07Document34 pagesGroebner Business Statistics 7 Ch07Zeeshan RiazNo ratings yet

- The Process Mineralogy of Mine Wastes PDFDocument11 pagesThe Process Mineralogy of Mine Wastes PDFAlejandra VeraNo ratings yet

- What Is A Taguchi Design (Also Called An Orthogonal Array) ?Document11 pagesWhat Is A Taguchi Design (Also Called An Orthogonal Array) ?Nyssa SanNo ratings yet

- Metsim Brochure1Document2 pagesMetsim Brochure1ridwan septiawanNo ratings yet

- Time Series Group AssignmentDocument2 pagesTime Series Group AssignmentLit Jhun Yeang BenjaminNo ratings yet

- Stochastic Frontier Analysis StataDocument48 pagesStochastic Frontier Analysis StataAnonymous vI4dpAhc100% (2)

- Using R For Time Series Analysis - Time Series 0.2 DocumentationDocument37 pagesUsing R For Time Series Analysis - Time Series 0.2 DocumentationMax GrecoNo ratings yet

- ARIMAXDocument10 pagesARIMAXRyubi FarNo ratings yet

- Sadia Noor Final Stat 701Document12 pagesSadia Noor Final Stat 701Muhammad Naveed100% (1)

- Solved Examples For Chapter 19Document7 pagesSolved Examples For Chapter 19SofiaNo ratings yet

- Guidebook On Statewide Travel ForecastingDocument138 pagesGuidebook On Statewide Travel ForecastingJohn NganNo ratings yet

- Forecasting MethodsDocument26 pagesForecasting MethodsPurvaNo ratings yet

- ACFDocument27 pagesACFAnand SatsangiNo ratings yet

- The Box-Jenkins Methodology For RIMA ModelsDocument172 pagesThe Box-Jenkins Methodology For RIMA Modelsرضا قاجهNo ratings yet

- STAT 497 Midterm Exam QuestionsDocument71 pagesSTAT 497 Midterm Exam QuestionsAnisa Anisa100% (1)

- 2.2 Vector Autoregression (VAR) : M y T 1, - . - , TDocument24 pages2.2 Vector Autoregression (VAR) : M y T 1, - . - , TrunawayyyNo ratings yet

- Black LittermanDocument16 pagesBlack LittermanJulian DiazNo ratings yet

- Queen - 21 Partituras - Piano, Voice & GuitarDocument104 pagesQueen - 21 Partituras - Piano, Voice & GuitarEiner Hugo Jauregui OrmazaNo ratings yet

- Human Development, Knowledge Societies and Institutional Adjustment.Document22 pagesHuman Development, Knowledge Societies and Institutional Adjustment.Julian DiazNo ratings yet

- Sonata Facil KV 545Document12 pagesSonata Facil KV 545SofyxMusyxNo ratings yet

- International Journal of Computer Science and Security (IJCSS), Volume (3), IssueDocument153 pagesInternational Journal of Computer Science and Security (IJCSS), Volume (3), IssueAI Coordinator - CSC JournalsNo ratings yet

- EMT175 184finishedDocument30 pagesEMT175 184finishedcharles izquierdoNo ratings yet

- Viva QuestionsDocument12 pagesViva QuestionsVickyGaming YTNo ratings yet

- Schroedindiger Eqn and Applications3Document4 pagesSchroedindiger Eqn and Applications3kanchankonwarNo ratings yet

- Geological Model SimulationDocument10 pagesGeological Model SimulationYadirita Sanchez VillarrealNo ratings yet

- TNAU ScheduleDocument17 pagesTNAU ScheduleMadhan KumarNo ratings yet

- Geology of Radon in The United States: January 1992Document17 pagesGeology of Radon in The United States: January 1992Stefan StefNo ratings yet

- Model Building MethodologyDocument24 pagesModel Building MethodologymtlopezNo ratings yet

- Maths Ans Sol - JEEMain 2023 - PH 2 - 06 04 2023 - Shift 1Document5 pagesMaths Ans Sol - JEEMain 2023 - PH 2 - 06 04 2023 - Shift 1jayanthsaimuppirisettiNo ratings yet

- List of TheoremsDocument2 pagesList of Theoremskyut netoNo ratings yet

- Unit 1 Practice QuestionsDocument5 pagesUnit 1 Practice QuestionsKristen JimenezNo ratings yet

- DSA QuizDocument313 pagesDSA QuizHenryHai NguyenNo ratings yet

- DLD MCQsDocument60 pagesDLD MCQsRAVIHIMAJANo ratings yet

- Signal-Flow Graphs & Mason's RuleDocument18 pagesSignal-Flow Graphs & Mason's RuleRemuel ArellanoNo ratings yet

- 6th Math (Eng, Pbi) Term-1Sample Paper-1Document2 pages6th Math (Eng, Pbi) Term-1Sample Paper-1amrit7127No ratings yet

- Lesson Plan #4 - Electrostatic ForceDocument4 pagesLesson Plan #4 - Electrostatic Forcedmart033100% (1)

- General Mathematics - Module #3Document7 pagesGeneral Mathematics - Module #3Archie Artemis NoblezaNo ratings yet

- Median and Mean CalculationsDocument3 pagesMedian and Mean CalculationsSumalya BhattaacharyaaNo ratings yet

- MA Sample Paper 2 UnsolvedDocument8 pagesMA Sample Paper 2 UnsolvedHoneyNo ratings yet

- 2016 AAA HuDocument13 pages2016 AAA HuAnis SuissiNo ratings yet

- Fast Floating Point Square Root: Thomas F. Hain, David B. MercerDocument7 pagesFast Floating Point Square Root: Thomas F. Hain, David B. MercerValentina NigaNo ratings yet

- 2011 BDMS 4E Prelims 2 AM Paper 2Document25 pages2011 BDMS 4E Prelims 2 AM Paper 2Hui XiuNo ratings yet

- Pozos Productores en BoliviaDocument2 pagesPozos Productores en BoliviaAnonymous yu9fGomNaNo ratings yet

- Sample Paper 10: Class - X Exam 2021-22 (TERM - II) Mathematics BasicDocument3 pagesSample Paper 10: Class - X Exam 2021-22 (TERM - II) Mathematics BasicTanushi GulatiNo ratings yet