Professional Documents

Culture Documents

Introduction To Neural Networks

Uploaded by

Deepti Chandra VermaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Introduction To Neural Networks

Uploaded by

Deepti Chandra VermaCopyright:

Available Formats

Introduction to Neural Networks

CS405

What are connectionist neural

networks?

Connectionism refers to a computer modeling

approach to computation that is loosely based upon

the architecture of the brain.

Many different models, but all include:

Multiple, individual nodes or units that operate at

the same time (in parallel)

A network that connects the nodes together

Information is stored in a distributed fashion among the

links that connect the nodes

Learning can occur with gradual changes in connection

strength

Neural Network History

History traces back to the 50s but became popular in the 80s

with work by Rumelhart, Hinton, and Mclelland

A General Framework for Parallel Distributed Processing in Parallel

Distributed Processing: Explorations in the Microstructure of

Cognition

Peaked in the 90s. Today:

Hundreds of variants

Less a model of the actual brain than a useful tool, but still some

debate

Numerous applications

Handwriting, face, speech recognition

Vehicles that drive themselves

Models of reading, sentence production, dreaming

Debate for philosophers and cognitive scientists

Can human consciousness or cognitive abilities be explained by a

connectionist model or does it require the manipulation of symbols?

Comparison of Brains and Traditional

Computers

200 billion neurons, 32

trillion synapses

Element size: 10

-6

m

Energy use: 25W

Processing speed: 100 Hz

Parallel, Distributed

Fault Tolerant

Learns: Yes

Intelligent/Conscious:

Usually

1 billion bytes RAM but

trillions of bytes on disk

Element size: 10

-9

m

Energy watt: 30-90W (CPU)

Processing speed: 10

9

Hz

Serial, Centralized

Generally not Fault Tolerant

Learns: Some

Intelligent/Conscious:

Generally No

Biological Inspiration

My brain: It's my second favorite organ.

- Woody Allen, from the movie Sleeper

Idea : To make the computer more robust, intelligent, and learn,

Lets model our computer software (and/or hardware) after the brain

Neurons in the Brain

Although heterogeneous, at a low level the

brain is composed of neurons

A neuron receives input from other neurons

(generally thousands) from its synapses

Inputs are approximately summed

When the input exceeds a threshold the neuron

sends an electrical spike that travels that

travels from the body, down the axon, to the

next neuron(s)

Learning in the Brain

Brains learn

Altering strength between neurons

Creating/deleting connections

Hebbs Postulate (Hebbian Learning)

When an axon of cell A is near enough to excite a cell B and repeatedly

or persistently takes part in firing it, some growth process or metabolic

change takes place in one or both cells such that A's efficiency, as one

of the cells firing B, is increased.

Long Term Potentiation (LTP)

Cellular basis for learning and memory

LTP is the long-lasting strengthening of the connection between two

nerve cells in response to stimulation

Discovered in many regions of the cortex

Perceptrons

Initial proposal of connectionist networks

Rosenblatt, 50s and 60s

Essentially a linear discriminant composed of

nodes, weights

I1

I2

I3

W1

W2

W3

O

'

> +

,

_

otherwise

I w

O

i

i i

: 0

0 : 1

I1

I2

I3

W1

W2

W3

O

or

1

Activation Function

Perceptron Example

2

1

.5

.3

=-1

2(0.5) + 1(0.3) + -1 = 0.3 , O=1

Learning Procedure:

Randomly assign weights (between 0-1)

Present inputs from training data

Get output O, nudge weights to gives results toward our

desired output T

Repeat; stop when no errors, or enough epochs completed

Perception Training

) ( ) ( ) 1 ( t w t w t w

i i i

+ +

i i

I O T t w ) ( ) (

Weights include Threshold. T=Desired, O=Actual output.

5 . 1 ) 2 )( 1 0 ( 5 . 0 ) 1 (

1

+ + t w

Example: T=0, O=1, W1=0.5, W2=0.3, I1=2, I2=1,Theta=-1

7 . 0 ) 1 )( 1 0 ( 3 . 0 ) 1 (

2

+ + t w

2 ) 1 )( 1 0 ( 1 ) 1 ( + + t w

If we present this input again, wed output 0 instead

How might you use a perceptron

network?

This (and other networks) are generally used to learn how to

make classifications

Say you have collected some data regarding the diagnosis of

patients with heart disease

Age, Sex, Chest Pain Type, Resting BPS, Cholesterol, ,

Diagnosis (<50% diameter narrowing, >50% diameter narrowing)

67,1,4,120,229,, 1

37,1,3,130,250, ,0

41,0,2,130,204, ,0

Train network to predict heart disease of new patient

Perceptrons

Can add learning rate to speed up the learning process; just

multiply in with delta computation

Essentially a linear discriminant

Perceptron theorem: If a linear discriminant exists that can

separate the classes without error, the training procedure is

guaranteed to find that line or plane.

Class1

Class2

1

0

1

0

XOR Problem: Not Linearly Separable!

Exclusive Or (XOR) Problem

Input: 0,0 Output: 0

Input: 0,1 Output: 1

Input: 1,0 Output: 1

Input: 1,1 Output: 0

We could however construct multiple layers of perceptrons to get around

this problem. A typical multi-layered system minimizes LMS Error,

LMS Learning

LMS = Least Mean Square learning Systems, more general than the

previous perceptron learning rule. The concept is to minimize the total

error, as measured over all training examples, P. O is the raw output,

as calculated by

( )

P

P P

O T LMS ce Dis

2

2

1

) ( tan

E.g. if we have two patterns and

T1=1, O1=0.8, T2=0, O2=0.5 then D=(0.5)[(1-0.8)

2

+(0-0.5)

2

]=.145

We want to minimize the LMS:

E

W

W(old)

W(new)

C-learning rate

+

i

i i

I w

LMS Gradient Descent

Using LMS, we want to minimize the error. We can do this by finding

the direction on the error surface that most rapidly reduces the error

rate; this is finding the slope of the error function by taking the

derivative. The approach is called gradient descent (similar to hill

climbing).

k

k

w

Error

c w

To compute how much to change weight for link k:

k

j

j k

w

O

O

Error

w

Error

Chain rule:

) (

) (

2

1

) (

2

1

2

2

j j

j

j j

j

P

P P

j

O T

O

O T

O

O T

O

Error

We can remove the sum since we are taking the partial derivative wrt Oj

( ) ) (

'

k k k

k

j

W I Function Activation f I

w

O

( ) ( ) Function Activation f I O T c w

k j j k

' ) (

) ( W I f O

j

Activation Function

To apply the LMS learning rule, also known

as the delta rule, we need a differentiable

activation function.

( ) ( ) Function Activation f O T cI w

j j k k

'

'

> +

otherwise

I w

O

i

i i

: 0

0 : 1

Old:

New:

+

i

i i

I w

e

O

1

1

LMS vs. Limiting Threshold

With the new sigmoidal function that is differentiable, we

can apply the delta rule toward learning.

Perceptron Method

Forced output to 0 or 1, while LMS uses the net output

Guaranteed to separate, if no error and is linearly separable

Otherwise it may not converge

Gradient Descent Method:

May oscillate and not converge

May converge to wrong answer

Will converge to some minimum even if the classes are not

linearly separable, unlike the earlier perceptron training method

Backpropagation Networks

Attributed to Rumelhart and McClelland, late 70s

To bypass the linear classification problem, we can

construct multilayer networks. Typically we have fully

connected, feedforward networks.

I1

I2

1

Hidden Layer

H1

H2

O1

O2

Input Layer Output Layer

W

i,j

W

j,k

1s - bias

j

j x j

H w

e

x O

,

1

1

) (

I3

1

i

i x i

I w

e

x H

,

1

1

) (

Backprop - Learning

Learning Procedure:

Randomly assign weights (between 0-1)

Present inputs from training data, propagate to outputs

Compute outputs O, adjust weights according to the delta

rule, backpropagating the errors. The weights will be

nudged closer so that the network learns to give the desired

output.

Repeat; stop when no errors, or enough epochs completed

Backprop - Modifying Weights

( ) ( )

,

_

+

sum

j j k k

e

f Function Activation f O T cI w

1

1

; '

We had computed:

For the Output unit k, f(sum)=O(k). For the output units, this is:

( ) ) 1 (

, k k k k j k j

O O O T cH w

( )( ) ) ( 1 )( ( sum f sum f O T cI w

j j k k

I H O

W

i,j

W

j,k

For the Hidden units (skipping some math), this is:

k j

k

k k k k i j j j i

w O O O T I H cH w

, ,

) 1 ( ) ( ) 1 (

Backprop

Very powerful - can learn any function, given enough hidden

units! With enough hidden units, we can generate any

function.

Have the same problems of Generalization vs.

Memorization. With too many units, we will tend to

memorize the input and not generalize well. Some schemes

exist to prune the neural network.

Networks require extensive training, many parameters to

fiddle with. Can be extremely slow to train. May also fall

into local minima.

Inherently parallel algorithm, ideal for multiprocessor

hardware.

Despite the cons, a very powerful algorithm that has seen

widespread successful deployment.

Backprop Demo

QwikNet

Learning XOR, Sin/Cos functions

Unsupervised Learning

We just discussed a form of supervised learning

A teacher tells the network what the correct output is

based on the input until the network learns the target

concept

We can also train networks where there is no teacher.

This is called unsupervised learning. The

network learns a prototype based on the distribution

of patterns in the training data. Such networks allow

us to:

Discover underlying structure of the data

Encode or compress the data

Transform the data

Unsupervised Learning Hopfield

Networks

A Hopfield network is a type of content-

addressable memory

Non-linear system with attractor points that

represent concepts

Given a fuzzy input the system converges to the

nearest attractor

Possibility to have spurious attractors that is a blend

of multiple stored patterns

Also possible to have chaotic patterns that never

converge

Standard Binary Hopfield Network

Recurrent; Every unit is connected to every other unit

Weights connecting units are symmetrical

w

ij

= w

ji

If the weighted sum of the inputs exceeds a threshold, its

output is 1 otherwise its output is -1

Units update themselves asynchronously as their inputs

change

B

C

A

D

w

AD

w

AB

w

AC

w

BC

w

BD

w

CD

Hopfield Memories

Setting the weights:

A pattern is a setting of on or off for each unit

Given a set of Q patterns to store

For every weight connecting units i and j:

This is a form of a Hebbian rule which makes the weight

strength proportional to the product of the firing rates of the

two interconnected units

p

j

Q

p

p

i ij

x x w

1

Hopfield Network Demo

http://www.cbu.edu/~pong/ai/hopfield/hopfieldappl

et.html

Properties

Settles into a minimal energy state

Storage capacity low, only 13% of number of units

Can retrieve information even in the presence of noisy

data, similar to associative memory of humans

Unsupervised Learning Self

Organizing Maps

Self-organizing maps (SOMs) are a data

visualization technique invented by Professor

Teuvo Kohonen

Also called Kohonen Networks, Competitive Learning,

Winner-Take-All Learning

Generally reduces the dimensions of data through the

use of self-organizing neural networks

Useful for data visualization; humans cannot visualize

high dimensional data so this is often a useful technique

to make sense of large data sets

Basic Winner Take All Network

Two layer network

Input units, output units, each input unit is connected to each

output unit

I1

I2

O1

O2

Input Layer

W

i,j

I3

Output Layer

Basic Algorithm

Initialize Map (randomly assign weights)

Loop over training examples

Assign input unit values according to the values in the current

example

Find the winner, i.e. the output unit that most closely

matches the input units, using some distance metric, e.g.

Modify weights on the winner to more closely match the input

( )

2

1

n

i

i ij

I W

For all output units j=1 to m

and input units i=1 to n

Find the one that minimizes:

) (

1 t t

i

t

W X c W

+

where c is a small positive learning constant

that usually decreases as the learning proceeds

Result of Algorithm

Initially, some output nodes will randomly be a

little closer to some particular type of input

These nodes become winners and the weights

move them even closer to the inputs

Over time nodes in the output become

representative prototypes for examples in the input

Note there is no supervised training here

Classification:

Given new input, the class is the output node that is the

winner

Typical Usage: 2D Feature Map

In typical usage the output nodes form a 2D map

organized in a grid-like fashion and we update weights in a

neighborhood around the winner

I1

I2

Input Layer

I3

Output Layers

O11 O12 O13 O14 O15

O21 O22 O23 O24 O25

O31 O32 O33 O34 O35

O41 O42 O43 O44 O45

O51 O52 O53 O54 O55

Modified Algorithm

Initialize Map (randomly assign weights)

Loop over training examples

Assign input unit values according to the values in the current

example

Find the winner, i.e. the output unit that most closely

matches the input units, using some distance metric, e.g.

Modify weights on the winner to more closely match the input

Modify weights in a neighborhood around the winner so

the neighbors on the 2D map also become closer to the

input

Over time this will tend to cluster similar items closer on the map

Updating the Neighborhood

Node O

44

is the winner

Color indicates scaling to update neighbors

Output Layers

O11 O12 O13 O14 O15

O21 O22 O23 O24 O25

O31 O32 O33 O34 O35

O41 O42 O43 O44 O45

O51 O52 O53 O54 O55

) (

1 t t

i

t

W X c W

+

c=1

c=0.75

c=0.5

Consider if O

42

is winner for

some other

input; fight

over claiming

O

43

,

O

33

, O

53

Selecting the Neighborhood

Typically, a Sombrero Function or Gaussian

function is used

Neighborhood size usually decreases over time to

allow initial jockeying for position and then

fine-tuning as algorithm proceeds

Color Example

http://davis.wpi.edu/~matt/courses/soms/ap

plet.html

Kohonen Network Examples

Document Map:

http://websom.hut.fi/websom/milliondemo/

html/root.html

Poverty Map

http://www.cis.hut.fi/rese

arch/som-

research/worldmap.html

SOM for Classification

A generated map can also be used for classification

Human can assign a class to a data point, or use the

strongest weight as the prototype for the data point

For a new test case, calculate the winning node and classify

it as the class it is closest to

Handwriting recognition example: http://fbim.fh-

regensburg.de/~saj39122/begrolu/kohonen.html

Psychological and Biological

Considerations of Neural Networks

Psychological

Neural network models learn, exhibit some behavior similar to

humans, based loosely on brains

Create their own algorithms instead of being explicitly programmed

Operate under noisy data

Fault tolerant and graceful degradation

Knowledge is distributed, yet still some localization

Lashleys search for engrams

Biological

Learning in the visual cortex shortly after birth seems to correlate

with the pattern discrimination that emerges from Kohonen Networks

Criticisms of the mechanism to update weights; mathematically

driven; feedforward supervised network unrealistic

Connectionism

Whats hard for neural networks? Activities beyond

recognition, e.g.:

Variable binding

Recursion

Reflection

Structured representations

Connectionist and Symbolic Models

The Central Paradox of Cognition (Smolensky et al., 1992):

"Formal theories of logical reasoning, grammar, and other higher

mental faculties compel us to think of the mind as a machine for rule-

based manipulation of highly structured arrays of symbols. What we

know of the brain compels us to think of human information

processing in terms of manipulation of a large unstructured set of

numbers, the activity levels of interconnected neurons. Finally, the full

richness of human behavior, both in everyday environments and in the

controlled environments of the psychological laboratory, seems to defy

rule-based description, displaying strong sensitivity to subtle statistical

factors in experience, as well as to structural properties of information.

To solve the Central Paradox of Cognition is to resolve these

contradictions with a unified theory of the organization of the mind, of

the brain, of behavior, and of the environment."

Possible Relationships?

Symbolic systems implemented via

connectionism

Possible to create hierarchies of networks with

subnetworks to implement symbolic systems

Hybrid model

System consists of two separate components;

low-level tasks via connectionism, high-level

tasks via symbols

Proposed Hierarchical Model

Jeff Hawkins

Founder: Palm Computing, Handspring

Deep interest in the brain all his life

Book: On Intelligence

Variety of neuroscience research as input

Includes his own ideas, theories, guesses

Increasingly accepted view of the brain

The Cortex

Hawkinss point of interest in the brain

Where the magic happens

Hierarchically-arranged in regions

Communication up the hierarchy

Regions classify patterns of their inputs

Regions output a named pattern up the hierarchy

Communication down the hierarchy

A high-level region has made a prediction

Alerts lower-level regions what to expect

Hawkins Quotes

The human cortex is particularly large and

therefore has a massive memory capacity.

It is constantly predicting what you will see,

hear and feel, mostly in ways you are

unconscious of. These predictions are our

thoughts, and when combined with sensory

inputs, they are our perceptions. I call this

view of the brain the memory-prediction

framework of intelligence.

Hawkins Quotes

Your brain constantly makes predictions

about the very fabric of the world we live

in, and it does so in a parallel fashion. It

will just as readily detect an odd texture, a

misshapen nose, or an unusual motion. It

isnt obvious how pervasive these mostly

unconscious predictions are, which is

perhaps why we missed their importance.

Hawkins Quotes

Your brain constantly makes predictions

about the very fabric of the world we live

in, and it does so in a parallel fashion. It

will just as readily detect an odd texture, a

misshapen nose, or an unusual motion. It

isnt obvious how pervasive these mostly

unconscious predictions are, which is

perhaps why we missed their importance.

Hawkins Quotes

Suppose when you are out, I sneak over to

your home and change something about

your door. It could be almost anything. I

could move the knob over by and inch,

change a round knob into a thumb latch, or

turn it from brass to chrome. When you

come home that day and attempt to open the

door, you will quickly detect that

something is wrong.

Prediction

Prediction means that the neurons involved

in sensing your door become active in

advance of them actually receiving sensory

input.

When the sensory input does arrive, it is

compared with what is expected.

Two way communication; classification up the

hierarchy, prediction down the hierarchy

Prediction

Prediction is not limited to patterns of low-level

sensory information like hearing and seeing

Mountcastles principle : we have lots of

different neurons, but they basically do the

same thing (particularly in the neocortex)

What is true of low-level sensory areas must be true

for all cortical areas. The human brain is more

intelligent than that of other animals because it can

make predictions about more abstract kinds of

patterns and longer temporal pattern sequences.

Visual Hierarchies

Lowest visual level inputs pixels

Second level recognizes edges, lines, etc

from known patterns of pixels

Third level recognizes shapes from known

patterns of edges, lines, etc

Fourth level recognizes objects from known

patterns of shapes

Layers

Not there yet

Many issues remain to be addressed by

Hawkins model

Missing lots of details on how his model could

be implemented in a computer

Creativity?

Evolution?

Planning?

Rest of the brain, not just neocortex?

Links and Examples

http://davis.wpi.edu/~matt/courses/soms/ap

plet.html

http://websom.hut.fi/websom/milliondemo/

html/root.html

http://www.cis.hut.fi/research/som-

research/worldmap.html

http://www.patol.com/java/TSP/index.html

You might also like

- Competitive Learning: Fundamentals and Applications for Reinforcement Learning through CompetitionFrom EverandCompetitive Learning: Fundamentals and Applications for Reinforcement Learning through CompetitionNo ratings yet

- Back-Propagation Algorithm ExplainedDocument24 pagesBack-Propagation Algorithm ExplainedMyilvahanan JothivelNo ratings yet

- Long Short Term Memory: Fundamentals and Applications for Sequence PredictionFrom EverandLong Short Term Memory: Fundamentals and Applications for Sequence PredictionNo ratings yet

- Part7.2 Artificial Neural NetworksDocument51 pagesPart7.2 Artificial Neural NetworksHarris Punki MwangiNo ratings yet

- Ann Mid1: Artificial Neural Networks With Biological Neural Network - SimilarityDocument13 pagesAnn Mid1: Artificial Neural Networks With Biological Neural Network - SimilaritySirishaNo ratings yet

- MachineLearning Part5Document77 pagesMachineLearning Part5Raj ChauhanNo ratings yet

- Neural Networks: - Genetic Algorithms - Genetic Programming - Behavior-Based SystemsDocument74 pagesNeural Networks: - Genetic Algorithms - Genetic Programming - Behavior-Based SystemsMehlakNo ratings yet

- Understanding Neural Networks from Biological Inspiration to Technical ImplementationDocument6 pagesUnderstanding Neural Networks from Biological Inspiration to Technical Implementationbebo fayezNo ratings yet

- Neural NetworksDocument119 pagesNeural Networksmohanapriya100% (1)

- Introduction To Artificial Neural Networks and PerceptronDocument59 pagesIntroduction To Artificial Neural Networks and PerceptronPrathik NarayanNo ratings yet

- Convolutional Neural NetworksDocument21 pagesConvolutional Neural NetworksHarshaNo ratings yet

- Artificial Neural Networks: System That Can Acquire, Store, and Utilize Experiential KnowledgeDocument40 pagesArtificial Neural Networks: System That Can Acquire, Store, and Utilize Experiential KnowledgeKiran Moy Mandal100% (1)

- Machine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)Document14 pagesMachine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)harutyunNo ratings yet

- Uni2 NN 2023Document52 pagesUni2 NN 2023SONY P J 2248440No ratings yet

- ANN & FUZZY Techniques (MEPS303B) : Q1. Short Questions (Attempt Any 5)Document5 pagesANN & FUZZY Techniques (MEPS303B) : Q1. Short Questions (Attempt Any 5)nadeemq_0786No ratings yet

- Unit - 4Document26 pagesUnit - 4UNISA SAKHANo ratings yet

- Ci - Perceptron Networks1Document43 pagesCi - Perceptron Networks1Bhavisha SutharNo ratings yet

- ML Unit-2Document141 pagesML Unit-26644 HaripriyaNo ratings yet

- Networks of Artificial Neurons Single Layer PerceptronsDocument16 pagesNetworks of Artificial Neurons Single Layer PerceptronsSurenderMalanNo ratings yet

- Intro To Neural Nets PDFDocument29 pagesIntro To Neural Nets PDFjohnconnorNo ratings yet

- Understanding Neural Networks From Scratch in Python and RDocument12 pagesUnderstanding Neural Networks From Scratch in Python and RKhương NguyễnNo ratings yet

- Applicable Artificial Intelligence: Introduction To Neural NetworksDocument36 pagesApplicable Artificial Intelligence: Introduction To Neural Networksmuhammed suhailNo ratings yet

- cs4811 ch11 Neural Networks PDFDocument32 pagescs4811 ch11 Neural Networks PDFSurya RoGanda SitungkirNo ratings yet

- Introduction to Artificial Neural Networks (ANN) in 40 CharactersDocument42 pagesIntroduction to Artificial Neural Networks (ANN) in 40 CharactersRezaBachtiar100% (1)

- Assign 1 Soft CompDocument12 pagesAssign 1 Soft Compsharmasunishka30No ratings yet

- Introduction To Neural NetworkDocument20 pagesIntroduction To Neural NetworkSal SaadNo ratings yet

- HimanshuDocument22 pagesHimanshuHarsh Patidar39No ratings yet

- Fundamentals of Neural NetworksDocument62 pagesFundamentals of Neural NetworksDivya Aseeja100% (2)

- An Example To Understand A Neural Network ModelDocument39 pagesAn Example To Understand A Neural Network ModelFriday JonesNo ratings yet

- Machine Learning NNDocument16 pagesMachine Learning NNMegha100% (1)

- Anthony Kuh - Neural Networks and Learning TheoryDocument72 pagesAnthony Kuh - Neural Networks and Learning TheoryTuhmaNo ratings yet

- IndhufilesDocument17 pagesIndhufilesPrabu ManavalanNo ratings yet

- Fundamentals of Artificial Neural NetworksDocument27 pagesFundamentals of Artificial Neural Networksbhaskar rao mNo ratings yet

- ML Unit-IvDocument19 pagesML Unit-IvYadavilli VinayNo ratings yet

- ShayakDocument6 pagesShayakShayak RayNo ratings yet

- ML Unit - VDocument46 pagesML Unit - VHamsiNo ratings yet

- Neural NetworksDocument27 pagesNeural NetworksDania DallahNo ratings yet

- MLP 2Document12 pagesMLP 2KALYANpwnNo ratings yet

- Understanding Neural Networks From ScratchDocument15 pagesUnderstanding Neural Networks From Scratchsurajdhunna100% (1)

- Kevin Swingler - Lecture 4: Multi-Layer PerceptronsDocument20 pagesKevin Swingler - Lecture 4: Multi-Layer PerceptronsRoots999No ratings yet

- Institute For Advanced Management Systems Research Department of Information Technologies Abo Akademi UniversityDocument41 pagesInstitute For Advanced Management Systems Research Department of Information Technologies Abo Akademi UniversityKarthikeyanNo ratings yet

- Tutorial 6 1. List The Relationships Between Biological and Artificial Networks. Biological ArtificialDocument3 pagesTutorial 6 1. List The Relationships Between Biological and Artificial Networks. Biological ArtificialPeter KongNo ratings yet

- Deep Learning Algorithms Report PDFDocument11 pagesDeep Learning Algorithms Report PDFrohillaanshul12No ratings yet

- Uni2 NNDLDocument21 pagesUni2 NNDLSONY P J 2248440No ratings yet

- Neural Networks NotesDocument22 pagesNeural Networks NoteszomukozaNo ratings yet

- Introduction To Deep LearningDocument48 pagesIntroduction To Deep LearningEshan JainNo ratings yet

- ML Unit-IvDocument18 pagesML Unit-IvSBNo ratings yet

- Machine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)Document14 pagesMachine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)dollsicecreamNo ratings yet

- Nns Are A Study of Parallel and Distributed Processing Systems (PDPS)Document46 pagesNns Are A Study of Parallel and Distributed Processing Systems (PDPS)harutyunNo ratings yet

- ECSE484 Intro v2Document67 pagesECSE484 Intro v2Zaid SulaimanNo ratings yet

- Business Intelligence & Data Mining-10Document39 pagesBusiness Intelligence & Data Mining-10binzidd007No ratings yet

- Why Artificial Neural Networks?: Experiment 1 AIM: To Study The Basic Concept of Artificial Neural NetworkDocument32 pagesWhy Artificial Neural Networks?: Experiment 1 AIM: To Study The Basic Concept of Artificial Neural NetworkmanjeetNo ratings yet

- BTP Project ReportDocument13 pagesBTP Project ReportVandhana RathodNo ratings yet

- Handwritten Digits Recognition Using Neural NetworksDocument13 pagesHandwritten Digits Recognition Using Neural NetworksVandhana RathodNo ratings yet

- Backpropagation With ExampleDocument42 pagesBackpropagation With ExamplejayNo ratings yet

- Artificial Neural NetworksDocument26 pagesArtificial Neural Networksbt2014No ratings yet

- ML Session 15 BackpropagationDocument30 pagesML Session 15 Backpropagationkr8665894No ratings yet

- Artificial Neural Network in 40 CharactersDocument8 pagesArtificial Neural Network in 40 CharactersMaryam FarisNo ratings yet

- Introduction To Neural Networks - Chapter1Document43 pagesIntroduction To Neural Networks - Chapter1wondi BETNo ratings yet

- Q: What Is ANN?: X H X H X H X X X HDocument9 pagesQ: What Is ANN?: X H X H X H X X X HMuhammad Ahsan AkhtarNo ratings yet

- Testing and Debugging: Deepti ChandraDocument81 pagesTesting and Debugging: Deepti ChandraDeepti Chandra VermaNo ratings yet

- Lisp TutorialDocument145 pagesLisp TutorialLê Quang Long0% (1)

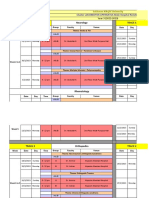

- E-Commerce Syllabus and FrameworkDocument91 pagesE-Commerce Syllabus and Frameworkpavanjammula100% (5)

- M. C. A (Fifth Semester) Examination, April-May 2019: Printed Pages-4Document4 pagesM. C. A (Fifth Semester) Examination, April-May 2019: Printed Pages-4Deepti Chandra VermaNo ratings yet

- UNIT 4: Network Security: E-Commerce PerspectiveDocument124 pagesUNIT 4: Network Security: E-Commerce PerspectiveDeepti Chandra VermaNo ratings yet

- 3 150916093649 Lva1 App6892 PDFDocument21 pages3 150916093649 Lva1 App6892 PDFDeepti Chandra VermaNo ratings yet

- 3 150916093649 Lva1 App6892 PDFDocument21 pages3 150916093649 Lva1 App6892 PDFDeepti Chandra VermaNo ratings yet

- Electronic Data Interchange 119329341512366 1Document67 pagesElectronic Data Interchange 119329341512366 1IshtiaqHamidSajalNo ratings yet

- 9 TcpipDocument52 pages9 TcpipDeepti Chandra VermaNo ratings yet

- 9 TcpipDocument52 pages9 TcpipDeepti Chandra VermaNo ratings yet

- Image RestorationDocument23 pagesImage RestorationDeepti Chandra VermaNo ratings yet

- Different Types of ResearchDocument2 pagesDifferent Types of ResearchDeepti Chandra VermaNo ratings yet

- Ischialgia 4yuli FixDocument20 pagesIschialgia 4yuli FixLindapratama SaifuddinNo ratings yet

- Dementia: Role of MRI: Updated VersionDocument30 pagesDementia: Role of MRI: Updated VersionAnonymous 61SwB8efNo ratings yet

- Self-Projection and The Brain: Randy L. Buckner and Daniel C. CarrollDocument9 pagesSelf-Projection and The Brain: Randy L. Buckner and Daniel C. CarrollJOHNATIELNo ratings yet

- Anatomy - Nervous System - Spinal Cord and Motor and Sensory PathwaysDocument43 pagesAnatomy - Nervous System - Spinal Cord and Motor and Sensory PathwaysYAMINIPRIYAN100% (1)

- Managing Phamtom PainDocument12 pagesManaging Phamtom PainjesusmonNo ratings yet

- Brazilian Journal of Physical TherapyDocument11 pagesBrazilian Journal of Physical TherapyilhamNo ratings yet

- Anatomy One Pager G&aDocument2 pagesAnatomy One Pager G&aJennaNo ratings yet

- 10 Science Notes 07 Control and Coordination 1Document8 pages10 Science Notes 07 Control and Coordination 1All Rounder HindustanNo ratings yet

- PainDocument20 pagesPainvinnu kalyanNo ratings yet

- A Complete Neurological Nursing Assessment GuideDocument57 pagesA Complete Neurological Nursing Assessment GuideSarithaRajeshNo ratings yet

- Coordination and Response BiologyDocument2 pagesCoordination and Response BiologyChrispin MsofeNo ratings yet

- Modul Sains SPM ObjektifDocument60 pagesModul Sains SPM ObjektifTheMovingFingerNo ratings yet

- Menieres DiseaseDocument43 pagesMenieres DiseaseChlodette Eizl M. Laurente100% (4)

- Case Report: Supevisor: Dr. Irma Tarida Listiawati, SP - KK By: Alif Ramadhan, S.KedDocument22 pagesCase Report: Supevisor: Dr. Irma Tarida Listiawati, SP - KK By: Alif Ramadhan, S.Kedpang_paangNo ratings yet

- Arterial Territories of The Human Brain Cerebral HemispheresDocument12 pagesArterial Territories of The Human Brain Cerebral HemispheresErika SanchezNo ratings yet

- Trace Decay Theory of ForgettingDocument3 pagesTrace Decay Theory of ForgettingJecel Biagan100% (2)

- Neural Control N Coordination-NotesDocument5 pagesNeural Control N Coordination-Notessudeep27krNo ratings yet

- Topografska anatomija moždane kore i limbičkih područjaDocument194 pagesTopografska anatomija moždane kore i limbičkih područjaGoran SimicNo ratings yet

- Nervous System Tumor Types and CharacteristicsDocument50 pagesNervous System Tumor Types and CharacteristicsNininghrNo ratings yet

- Fever PathophysiologyDocument9 pagesFever PathophysiologyNadya AzzahraNo ratings yet

- Otvori Glave PDFDocument1 pageOtvori Glave PDFAnnieNo ratings yet

- Dr. Diana Hylton, MD: Neurology - Female - Age 69Document13 pagesDr. Diana Hylton, MD: Neurology - Female - Age 69aroundtheclockNo ratings yet

- Sulaiman AlRajhi University Neurology & Orthopedics Hospital Rotation ScheduleDocument10 pagesSulaiman AlRajhi University Neurology & Orthopedics Hospital Rotation ScheduleAbdullah MelhimNo ratings yet

- RPMS DETAILED PRESENTATIONDocument59 pagesRPMS DETAILED PRESENTATIONmageshNo ratings yet

- Demyelinating DiseasesDocument15 pagesDemyelinating DiseasesMarikaniNo ratings yet

- Humans use receptors to help them respondDocument20 pagesHumans use receptors to help them respondshakeel shahulNo ratings yet

- Ieee AUTOMATIC NUMBER-PLATE RECOGNITION VNIS.1994.396858-NEURAL NETWORK APPROACHDocument3 pagesIeee AUTOMATIC NUMBER-PLATE RECOGNITION VNIS.1994.396858-NEURAL NETWORK APPROACHEdikan AkpanNo ratings yet

- Norris Claire White Paper Liftware PDFDocument14 pagesNorris Claire White Paper Liftware PDFmeysam NaaNo ratings yet

- Anat100 Tutorial 1Document2 pagesAnat100 Tutorial 1Teni LawalNo ratings yet

- Human Brain Facts by The NumbersDocument15 pagesHuman Brain Facts by The Numberswali4No ratings yet