Professional Documents

Culture Documents

Probability Theory: Much Inspired by The Presentation of Kren and Samuelsson

Uploaded by

duylinh65Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Probability Theory: Much Inspired by The Presentation of Kren and Samuelsson

Uploaded by

duylinh65Copyright:

Available Formats

Probability theory

Much inspired by the presentation of

Kren and Samuelsson

3 view of probability

Frequentist

Mathematical

Bayesian (knowledge-based)

Sample space

A universe of elementary outcomes. In

elementary treatments, we pretend that we

can come up with sets of equiprobable

outcomes (dice, coins, ...). Outcomes are

very small.

An event is a set of those outcomes. Events

are bigger than outcomes -- more

interesting.

Probability measure

Every event (=set of outcomes) is assigned

a probability, by a function we call a

probability measure.

The probability of every set is between 0

and 1, inclusive.

The probability of the whole set of

outcomes is 1.

If A and B are two event with no common

outcomes, then the probability of their

union is the sum of their probabilities.

Cards

Out universe of outcomes is single card

pulls.

Events: a red card (1/2); a jack (1/13);

Other things to remember

The probability that event P will not happen

(=event ~P will happen) is 1-prob(P).

Prob (null outcome) = 0.

p ( A B ) = p(A) + p(B) - p( A B).

Independence (definition)

Two events A and B are independent if the

probability of AB = probability of A times

the probability of B (that is, p(A)* p(B) ).

Conditional probability

This means: what's the probability of A if I

already know B is true?

p(A|B) = p(A and B) / p (B) =

p(A B) / p(B)

Probability of A given B.

p(A) is the prior probability; p(A|B) is called

a posterior probability. Once you know B is

true, the universe you care about shrinks to

B.

Bayes' rule

prob (A and B) = prob (B and A); so

prob (A |B) prob (B) = prob (B|A) prob (A)

-- just using the definition of prob (X|Y));

hence

) (

) ( ) | (

) | (

B prob

A prob A B prob

B A prob =

Bayes rule as scientific

reasoning

A hypothesis H which is supported by a set

of data D merits our belief to the degree

that:

1. We believed H before we learned about

D;

2. H predicts data D; and

3. D is unlikely.

A random variable

a.k.a. stochastic variable.

A random variable isn't a variable. It's a

function. It maps from the sample space to

the real numbers. This is a convenience: it is

our way of translating events (whatever

they are) to numbers.

Distribution function

Distribution function:

This is a function that takes a real number x

as its input, and finds all those outcomes in

the sample space that map onto x or

anything less than x.

For a die, F(0) = 0; F(1) = 1/6; F(2) = 1/3;

F(3) = 1/2; F(4) = 2/3; F(5) = 5/6; and F(6)

= F(7) = 1.

discrete distribution function

discrete, continuous

If the set of values that the distribution

function takes on is finite, or countable,

then the random variable (which isn't a

variable, it's a function) is discete; otherwise

it's continuous (also, it ought to be mostly

differentiable).

Distribution function aggregates

It's a little bit counterintuitive, in a way. What

about a function P for a die that tells us that P (

1) = 1/6, P(2) = 1/6, ... p(6) = 1/6?

That's a frequency function, or probability

function. We'll use the letter f for this. For the

case of continuous variables, we don't want to

ask what the probability of "1/6" is, because the

answer is always 0...

Rather, we ask what's the probability that

the value is in the interval (a,b) -- that's OK.

So for continuous variables, we care about

the derivative of the distribution function at

a point (that's the derivative of an integral,

after all...). This is called a probability

density function. The probability that a

random variable has a value in a set A is the

integral of the p.d.f. over that set A.

Frequency function f

The sum of the values of the frequency

function f must add up to 1!

The integral of the probability density

function must be 1.

A set of numbers that adds up to 1 is called

a distribution.

Means that have nothing to do

with meaning

The mean is the average; in everyday terms, we

add all the values and divide by the number of

items. The symbol is 'E', for 'expected' (why is the

mean expected? What else would you expect?)

Since the frequency function f tells you how many

there are of any particular value, the mean is

i

i i

x f x ) (

Weight a moment...

The mean is the first moment; the second moment is

the variance, which tells you how much the random

variable jiggles. It's the sum of the differences from

the mean (square those differences so they're

positive). The square root of this is the standard

deviation. (We don't divide by N here; that's inside

the f-function, remember?)

i

i i

x f x ) ( ) (

2

Particular probability

distributions:

Binomial

Gaussian, also known as normal

Poisson

Binomial distribution

If we run an experiment n times

(independently: simultaneous or not, we

don't care), and we care only about how

many times altogether a particular outcome

occurs -- that's a binomial distribution, with

2 parameters: the probability p of that

outcome on a single trial, and n the number

of trials.

If you toss a coin 4 times, what's the

probability that you'll get 3 heads?

If you draw a card 5 times (with

replacement), what's the probability that

you'll get exactly 1 ace?

If you generate words randomly, what's the

probability that you'll have two the's in the

first 10 words?

In general, the answer is

k n k k n k

q p

k k n

n

q p

k

n

=

|

.

|

\

|

! )! (

!

Normal or Gaussian distribution

Start off with something simple, like this:

2

x

e

That's symmetric around the y-axis (negative and

positive x treated the same way -- if x = 0, then the

value is 1, and it slides to 0 as you go off to infinity,

either positive or negative.

Gaussian or normal distribution

Well, x's average can be something other

than 0: it can be any old

2

) ( x

e

2

2

2

) (

o

x

e

And its variance (o

2

) can be other than 1

And then normalize--

so that it all adds up (integrates, really) to 1,

we have to divide by a normalizing factor:

2

2

2

) (

2

1

o

t o

x

e

You might also like

- CHP 5Document63 pagesCHP 5its9918kNo ratings yet

- ECN-511 Random Variables 11Document106 pagesECN-511 Random Variables 11jaiswal.mohit27No ratings yet

- Probability DistributionDocument69 pagesProbability DistributionAwasthi Shivani75% (4)

- Probability PresentationDocument26 pagesProbability PresentationNada KamalNo ratings yet

- Introduction To Probability: Arindam ChatterjeeDocument21 pagesIntroduction To Probability: Arindam ChatterjeeJAYATI MAHESHWARINo ratings yet

- MATH RECITATION CRYPTOGRAPHY APPROXIMATIONSDocument4 pagesMATH RECITATION CRYPTOGRAPHY APPROXIMATIONSMichael YurkoNo ratings yet

- The Poisson DistributionDocument9 pagesThe Poisson Distributionsyedah1985No ratings yet

- Intro To Stats RecapDocument43 pagesIntro To Stats RecapAbhay ItaliyaNo ratings yet

- Statistical Methods in Quality ManagementDocument71 pagesStatistical Methods in Quality ManagementKurtNo ratings yet

- Random Variables: Petter Mostad 2005.09.19Document24 pagesRandom Variables: Petter Mostad 2005.09.19liteepanNo ratings yet

- BIOSTAT Random Variables & Probability DistributionDocument37 pagesBIOSTAT Random Variables & Probability DistributionAnonymous Xlpj86laNo ratings yet

- Imprecise Probability in Risk Analysis (PDFDrive) PDFDocument48 pagesImprecise Probability in Risk Analysis (PDFDrive) PDFBabak Esmailzadeh HakimiNo ratings yet

- OpenStax Statistics CH04 LectureSlides - IsuDocument48 pagesOpenStax Statistics CH04 LectureSlides - IsuAtiwag, Micha T.No ratings yet

- Unit 03 - Random Variables - 1 Per PageDocument47 pagesUnit 03 - Random Variables - 1 Per PageJonathan M.No ratings yet

- Distribution: Dr. H. Gladius Jennifer Associate Professor SPH, SrmistDocument23 pagesDistribution: Dr. H. Gladius Jennifer Associate Professor SPH, SrmistCharan KNo ratings yet

- Mathematics in Machine LearningDocument83 pagesMathematics in Machine LearningSubha OPNo ratings yet

- Evidence 2.1 2.3Document10 pagesEvidence 2.1 2.3alan ErivesNo ratings yet

- s2 Revision NotesDocument5 pagess2 Revision NotesAlex KingstonNo ratings yet

- A (Very) Brief Review of Statistical Inference: 1 Some PreliminariesDocument9 pagesA (Very) Brief Review of Statistical Inference: 1 Some PreliminarieswhatisnameNo ratings yet

- Probability and DistributionDocument43 pagesProbability and DistributionANKUR ARYANo ratings yet

- ProbabilityDocument24 pagesProbabilityMahatma NarendranitiNo ratings yet

- Statistical Analysis in GeneticsDocument21 pagesStatistical Analysis in GeneticsWONG JIE MIN MoeNo ratings yet

- Distribution of Random Variables Discrete Random VariablesDocument22 pagesDistribution of Random Variables Discrete Random VariablesAbdallahNo ratings yet

- LECTURE 02-Probability IE 3373 - ALDocument44 pagesLECTURE 02-Probability IE 3373 - ALMahmoud AbdelazizNo ratings yet

- Lec 07Document51 pagesLec 07Tùng ĐàoNo ratings yet

- Unit 4Document45 pagesUnit 4Viji MNo ratings yet

- Instructions For Chapter 5-By Dr. Guru-Gharana The Binomial Distribution Random VariableDocument10 pagesInstructions For Chapter 5-By Dr. Guru-Gharana The Binomial Distribution Random Variablevacca777No ratings yet

- Statistical Methods in Quality ManagementDocument71 pagesStatistical Methods in Quality ManagementKurtNo ratings yet

- 5 Probability DistributionsDocument88 pages5 Probability Distributionsnatiphbasha2015No ratings yet

- L5 Probability & Probability DistributionDocument44 pagesL5 Probability & Probability DistributionASHENAFI LEMESANo ratings yet

- Christian Notes For Exam PDocument9 pagesChristian Notes For Exam Proy_getty100% (1)

- Expected Value:) P (X X X EDocument28 pagesExpected Value:) P (X X X EQuyên Nguyễn HảiNo ratings yet

- BPT-Probability-binomia Distribution, Poisson Distribution, Normal Distribution and Chi Square TestDocument41 pagesBPT-Probability-binomia Distribution, Poisson Distribution, Normal Distribution and Chi Square TestAjju NagarNo ratings yet

- Lecture 3 Probability DistributionDocument25 pagesLecture 3 Probability Distributionlondindlovu410No ratings yet

- Part IA - Probability: Based On Lectures by R. WeberDocument78 pagesPart IA - Probability: Based On Lectures by R. WeberFVRNo ratings yet

- BiostDocument73 pagesBiostDheressaaNo ratings yet

- RV and DistributionsDocument81 pagesRV and DistributionsSai Mohnish MuralidharanNo ratings yet

- Lec 02 Bayesian Decision Theoryv 2024Document143 pagesLec 02 Bayesian Decision Theoryv 2024wstybhNo ratings yet

- Dealing With Uncertainty P (X - E) : Probability Theory The Foundation of StatisticsDocument34 pagesDealing With Uncertainty P (X - E) : Probability Theory The Foundation of StatisticsChittaranjan PaniNo ratings yet

- ProbablityDocument312 pagesProbablityernest104100% (1)

- MODULE 1 - Random Variables and Probability DistributionsDocument12 pagesMODULE 1 - Random Variables and Probability DistributionsJimkenneth RanesNo ratings yet

- Chapter 3 - Special Probability DistributionsDocument45 pagesChapter 3 - Special Probability Distributionsjared demissieNo ratings yet

- Probability Distributions Explained: Elementary Probability Theory, Rules, Bayes Theorem & MoreDocument47 pagesProbability Distributions Explained: Elementary Probability Theory, Rules, Bayes Theorem & MorePrudhvi raj Panga creationsNo ratings yet

- ProbabilityDistributions 61Document61 pagesProbabilityDistributions 61Rmro Chefo LuigiNo ratings yet

- Week4 BAMDocument28 pagesWeek4 BAMrajaayyappan317No ratings yet

- Discrete Probability DistributionsDocument46 pagesDiscrete Probability DistributionsGaurav KhalaseNo ratings yet

- ProbablityDocument310 pagesProbablityPriyaprasad PandaNo ratings yet

- Prob StatDocument46 pagesProb StatShinyDharNo ratings yet

- Probability Distributions in 40 CharactersDocument24 pagesProbability Distributions in 40 CharactersVikas YadavNo ratings yet

- Random Variables and Probability DistributionsDocument45 pagesRandom Variables and Probability DistributionsChidvi ReddyNo ratings yet

- Practical Data Science: Basic Concepts of ProbabilityDocument5 pagesPractical Data Science: Basic Concepts of ProbabilityRioja Anna MilcaNo ratings yet

- Probability Lecture Notes: 1 DefinitionsDocument8 pagesProbability Lecture Notes: 1 Definitionstalha khalidNo ratings yet

- Mathematics Promotional Exam Cheat SheetDocument8 pagesMathematics Promotional Exam Cheat SheetAlan AwNo ratings yet

- Tài liệu 5Document19 pagesTài liệu 5Nguyễn Thị Diễm HươngNo ratings yet

- Module 4 - v1Document84 pagesModule 4 - v1Shubham PathakNo ratings yet

- Quantitative TechniquesDocument31 pagesQuantitative TechniquesYashasvini ReddyNo ratings yet

- Radically Elementary Probability Theory. (AM-117), Volume 117From EverandRadically Elementary Probability Theory. (AM-117), Volume 117Rating: 4 out of 5 stars4/5 (2)

- Proceedings O - Sedgewick Robert Et Al PDFDocument268 pagesProceedings O - Sedgewick Robert Et Al PDFduylinh65No ratings yet

- Toanhoc Va Nhung Suy Luan Co Li PDFDocument260 pagesToanhoc Va Nhung Suy Luan Co Li PDFPham Thi Thanh ThuyNo ratings yet

- Implementing Quicksort ProgramsDocument11 pagesImplementing Quicksort Programsravg10No ratings yet

- SangtaotoanhocDocument410 pagesSangtaotoanhocduylinh65100% (1)

- ThocokhitoanhocDocument240 pagesThocokhitoanhocduylinh65No ratings yet

- Gardner, Martin - The Colossal Book of MathematicsDocument742 pagesGardner, Martin - The Colossal Book of MathematicsArael SidhNo ratings yet

- Giai Mot Bai Toan Nhu The Nao PDFDocument152 pagesGiai Mot Bai Toan Nhu The Nao PDFPham Thi Thanh ThuyNo ratings yet

- Advanced CalculusDocument592 pagesAdvanced Calculusjosemarcelod7088No ratings yet

- Programming Pearls PDFDocument195 pagesProgramming Pearls PDFduylinh65100% (2)

- 03report TaoDocument6 pages03report Taoduylinh65No ratings yet

- Toanhoc Va Nhung Suy Luan Co Li PDFDocument260 pagesToanhoc Va Nhung Suy Luan Co Li PDFPham Thi Thanh ThuyNo ratings yet

- 1sedgewick Robert Implementing Quicksort ProgramsDocument11 pages1sedgewick Robert Implementing Quicksort Programsduylinh65No ratings yet

- Trilogy 1Document7 pagesTrilogy 1duylinh65No ratings yet

- 03report TaoDocument6 pages03report Taoduylinh65No ratings yet

- What Is Good Mathematics - Terence TaoDocument11 pagesWhat Is Good Mathematics - Terence Taoduylinh65No ratings yet

- Tao An Epsilon of RoomDocument689 pagesTao An Epsilon of RoomMichael Raba100% (1)

- Tao T On Spectral Methods For Volterra Integral Equations AnDocument13 pagesTao T On Spectral Methods For Volterra Integral Equations Anduylinh65No ratings yet

- Carleman Bao Quan YuanDocument4 pagesCarleman Bao Quan Yuanduylinh65No ratings yet

- Is There Something That Bothers or Preoccupies You?Document13 pagesIs There Something That Bothers or Preoccupies You?duylinh65No ratings yet

- College Level Math Study GuideDocument22 pagesCollege Level Math Study GuideNoel Sanchez100% (1)

- On A Strengthened Hardy-Hilbert Inequality - Bicheng YangDocument14 pagesOn A Strengthened Hardy-Hilbert Inequality - Bicheng Yangduylinh65No ratings yet

- Ron Mueck Amazing Hyperrealism Sculpture PDFDocument49 pagesRon Mueck Amazing Hyperrealism Sculpture PDFbadbrad1975No ratings yet

- Reference(s) and instructions for candidatesDocument7 pagesReference(s) and instructions for candidatesduylinh65No ratings yet

- Terence Tao WikiDocument6 pagesTerence Tao Wikiduylinh65No ratings yet

- 2012-2013 Honors Geometry A ReviewDocument16 pages2012-2013 Honors Geometry A Reviewduylinh65No ratings yet

- Edexcel GCE: 6665/01 Core Mathematics C3 Advanced LevelDocument7 pagesEdexcel GCE: 6665/01 Core Mathematics C3 Advanced Levelduylinh65No ratings yet

- Price Tag: at Least $300,000,000Document13 pagesPrice Tag: at Least $300,000,000orizontasNo ratings yet

- Accessing MySQL From PHPDocument32 pagesAccessing MySQL From PHPduylinh65No ratings yet

- The Carleman'S Inequality For Negative Power Number: Nguyen Thanh Long, Nguyen Vu Duy LinhDocument7 pagesThe Carleman'S Inequality For Negative Power Number: Nguyen Thanh Long, Nguyen Vu Duy Linhduylinh65No ratings yet

- Translation Table Annex VII of The CLP Regulation GodalaDocument37 pagesTranslation Table Annex VII of The CLP Regulation GodalaRiccardo CozzaNo ratings yet

- Concentrations WorksheetDocument3 pagesConcentrations WorksheetMaquisig MasangcayNo ratings yet

- Cmos Electronic PDFDocument356 pagesCmos Electronic PDFJustin WilliamsNo ratings yet

- Roses Amend 12Document477 pagesRoses Amend 12Koert OosterhuisNo ratings yet

- Introduction and Basic Concepts: (Iii) Classification of Optimization ProblemsDocument19 pagesIntroduction and Basic Concepts: (Iii) Classification of Optimization Problemsaviraj2006No ratings yet

- Ch3 Work and EnergyDocument25 pagesCh3 Work and EnergyahmadskhanNo ratings yet

- Viscoelastic Modeling of Flexible Pavement With Abaqus PDFDocument143 pagesViscoelastic Modeling of Flexible Pavement With Abaqus PDFcabrel TokamNo ratings yet

- Stefan-Boltzmann Law Experiment ResultsDocument21 pagesStefan-Boltzmann Law Experiment ResultsBenjamin LukeNo ratings yet

- Dubbel-Handbook of Mechanical EngineeringDocument918 pagesDubbel-Handbook of Mechanical EngineeringJuan Manuel Domínguez93% (27)

- Book - Residual Life Assessment and MicrostructureDocument36 pagesBook - Residual Life Assessment and MicrostructureHamdani Nurdin100% (2)

- 7 4 Inverse of MatrixDocument13 pages7 4 Inverse of MatrixEbookcrazeNo ratings yet

- TALAT Lecture 1201: Introduction To Aluminium As An Engineering MaterialDocument22 pagesTALAT Lecture 1201: Introduction To Aluminium As An Engineering MaterialCORE MaterialsNo ratings yet

- Chapter 8 - Center of Mass and Linear MomentumDocument21 pagesChapter 8 - Center of Mass and Linear MomentumAnagha GhoshNo ratings yet

- Roger BakerDocument327 pagesRoger BakerfelipeplatziNo ratings yet

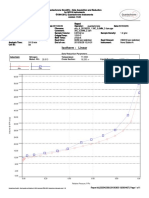

- GraphIsotherm Linear STTN - A - 20150226 - 1 30C - 0,5MM - 3 JamDocument1 pageGraphIsotherm Linear STTN - A - 20150226 - 1 30C - 0,5MM - 3 JamYunus HidayatNo ratings yet

- Lifting AnalysisDocument7 pagesLifting AnalysissaharuiNo ratings yet

- DB Concept NehasishDocument23 pagesDB Concept NehasishNehasish SahuNo ratings yet

- Cantilever Discussion and ResultDocument12 pagesCantilever Discussion and ResultYewHang SooNo ratings yet

- Dynamic Behavior of Chemical ProcessesDocument29 pagesDynamic Behavior of Chemical ProcessesDaniel GarcíaNo ratings yet

- Reflectarray AntennaDocument27 pagesReflectarray AntennaVISHNU UNNIKRISHNANNo ratings yet

- Finite Element Primer for Solving Diffusion ProblemsDocument26 pagesFinite Element Primer for Solving Diffusion Problemsted_kordNo ratings yet

- (Arfken) Mathematical Methods For Physicists 7th SOLUCIONARIO PDFDocument525 pages(Arfken) Mathematical Methods For Physicists 7th SOLUCIONARIO PDFJulian Montero100% (3)

- Chapter 6 Continuous Probability DistributionsDocument10 pagesChapter 6 Continuous Probability DistributionsAnastasiaNo ratings yet

- Motion in A Straight Line: Imp. September - 2012Document3 pagesMotion in A Straight Line: Imp. September - 2012nitin finoldNo ratings yet

- Qualification of Innovative Floating Substructures For 10MW Wind Turbines and Water Depths Greater Than 50mDocument41 pagesQualification of Innovative Floating Substructures For 10MW Wind Turbines and Water Depths Greater Than 50mjuho jungNo ratings yet

- Astavarga Tables of JupiterDocument4 pagesAstavarga Tables of JupiterSam SungNo ratings yet

- Lyapunov Stability AnalysisDocument17 pagesLyapunov Stability AnalysisumeshgangwarNo ratings yet

- Systematic Approach To Planning Monitoring Program Using Geotechnical InstrumentationDocument19 pagesSystematic Approach To Planning Monitoring Program Using Geotechnical InstrumentationKristina LanggunaNo ratings yet

- Camera CalibrationDocument39 pagesCamera CalibrationyokeshNo ratings yet